Supercomputer Frontier sets new record with 9.95 quintillion calculations per second

- In May 2022, the supercomputer Frontier broke the exascale barrier by performing 1.1 quintillion calculations per second, making it the world’s fastest computer.

- Using machine learning, Frontier recently broke its own record by performing a blisteringly fast 9.95 quintillion calculations per second.

- The Oak Ridge National Laboratory aims to use this supercomuting power help scientists and researchers answer bigger, bolder questions.

Give people a barrier, and at some point they are bound to smash through. Chuck Yeager broke the sound barrier in 1947. Yuri Gagarin burst into orbit for the first manned spaceflight in 1961. The Human Genome Project finished cracking the genetic code in 2003. And we can add one more barrier to humanity’s trophy case: the exascale barrier.

The exascale barrier represents the challenge of achieving exascale-level computing, which has long been considered the benchmark for high performance. To reach that level, however, a computer needs to perform a quintillion calculations per second. You can think of a quintillion as a million trillion, a billion billion, or a million million millions. Whichever you choose, it’s an incomprehensibly large number of calculations.

On May 27, 2022, Frontier, a supercomputer built by the Department of Energy’s Oak Ridge National Laboratory, managed the feat. It performed 1.1 quintillion calculations per second to become the fastest computer in the world.

But the engineers at Oak Ridge weren’t done yet. Frontier still had a few tricks up its sleeves — or, rather, its chipsets. The supercomputer recently used machine learning to gain the super boost necessary to set yet another speed record.

Breaking the exascale barrier

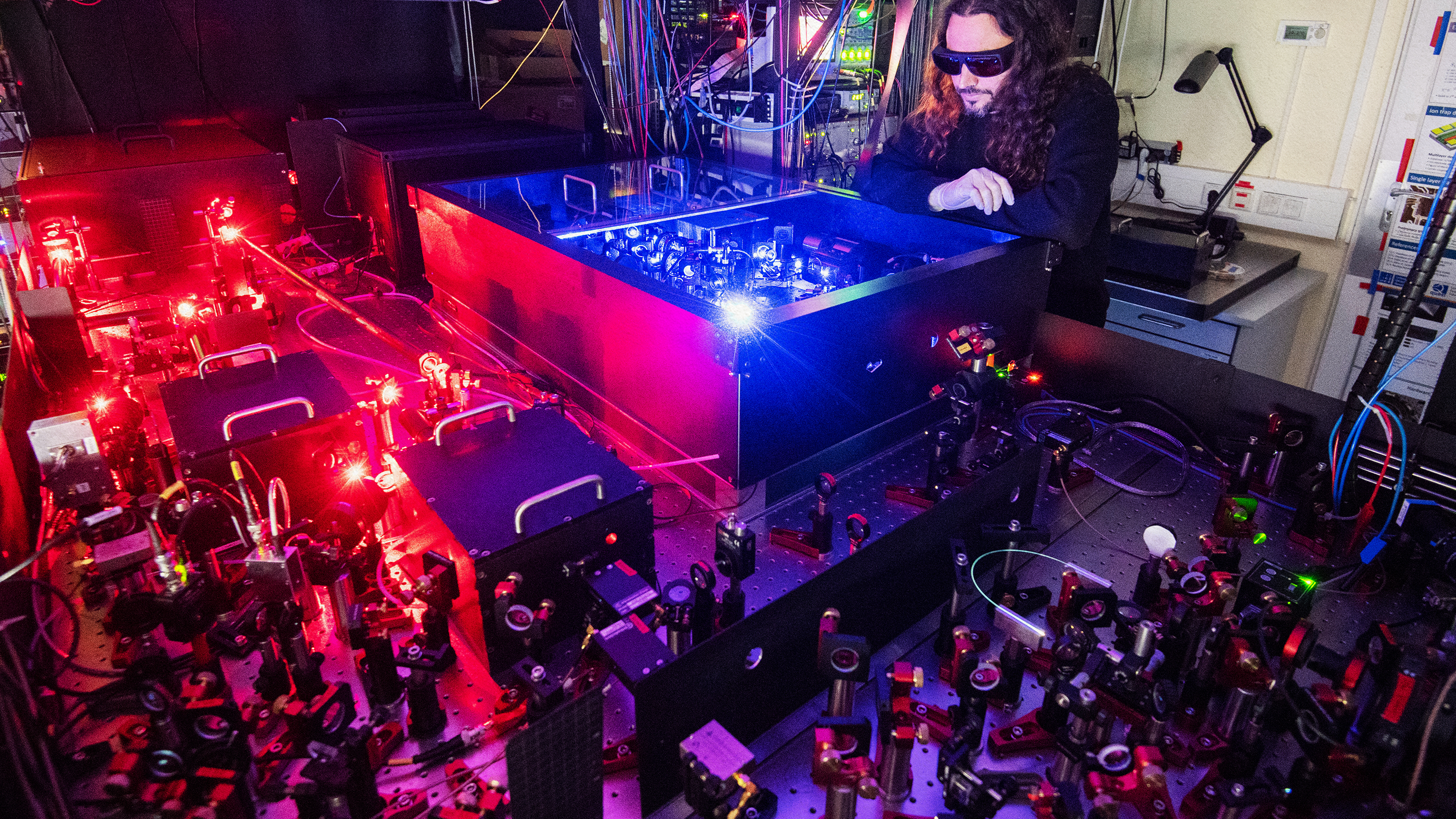

The first thing to know about Frontier is that it’s massive. It contains more than 9,400 nodes, and each node is essentially a self-contained, 150-teraflop supercomputer. These are spread through 74 interconnected cabinets. According to Bronson Messer, the director of science at Oak Ridge, each cabinet is the size of a commercial refrigerator and weighs around 8,000 pounds (or, he jokes, two Ford F-150s if we’re measuring in East Tennessee Units).

The second thing to know is that Frontier’s initial speed record was set using a format called double precision. Double precision requires 64 bits to represent numbers, and that amount of information allows for the calculations to contain a wide range of numerical values compared to single precision (which uses 32 bits).

“Think of the difference between measuring a circle by calculating pi with two decimal places versus 10, 20, or more decimals,” said Feiyi Wang, an Oak Ridge National Laboratory computer scientist.

However, while double precision can perform calculations with a high level of numerical accuracy, those extra bits come at the cost of computational resources such as processing power. To calculate faster, Frontier would need to lower its precision to free up those resources. This is where machine learning comes into play.

“It’s not so much that we went to plant another flag out further,” Messer told Freethink. “[This] benchmark was exercising hardware specifically designed to do AI and machine learning. We wanted to make sure that it was working at the very limits of its capability as well.”

Importantly, this hardware wasn’t used in Frontier’s initial record-breaking run.

Frontier goes hypersonic

During benchmark tests, Frontier is asked to solve a bunch of linear algebra equations all at once. These equations contain patterns — certain steps that recur over and over — and Frontier’s machine-learning algorithm can learn to recognize these patterns. Once recognized, it can then determine which equations require what level of precision.

If the equation requires a high level of numerical accuracy, Frontier uses double precision. But if the equation does not require such heightened accuracy, the supercomputer can throttle the precision back to 32, 24, or even 16 bits. This strategy is known as mixed-precision.

Messer compares this strategy to shopping at the grocery store. Double precision is the equivalent of checking every aisle to make sure you get everything on your list. It’s thorough, but time and energy consuming. However, once you learn to see patterns in the grocery store’s layout, you can make your trips more efficient by only visiting certain aisles and grabbing specific items.

“Traditionally, high-performance computing has been all about doing everything in double precision, and there are good reasons for that,” Messer said. “A lot of problems need to calculate something that’s happening because of the influence of two countervailing forces. Climate is a good example of that. You’ve got input from the sun, atmospheric chemistry, all kinds of stuff.”

That’s our mission: To enable those questions that can’t be answered anywhere else.

Bronson Messer

But, Messer adds, in other cases that extra accuracy doesn’t matter because the calculations don’t require such large numbers. When a supercomputer learns to throttle back for those calculations, it results in huge cost savings of computational resources.

And Frontier did just that. Using mixed-precision, the supercomputer clocked a mind-boggling speed of 9.95 quintillion calculations per second. That’s roughly eight times faster than the calculation speeds that broke the exascale barrier — the equivalent of going from Mach 1 to hypersonic speeds in about a year.

Expanding the frontier of science and research

But can it run Crysis? Yes, it can, Messer laughs.

Frontier could run Crysis — or any other video game for that matter — at full frame rate 10,000 times over. However, since the team isn’t allowed to play games, mine cryptocurrency, or check their social media feeds on Frontier, they are instead using its amazing processing powers to advance science and research.

The supercomputer is currently helping GE engineers study turbulence and design rotor blades for an open-fan jet engine. Such engines could theoretically improve fuel efficiency by 20%, making air travel cheaper and cleaner.

Frontier is also being used to model worldwide cloud formations. The models were part of the Energy Exascale Earth System Model — a project that aims to combine Frontier’s speedy calculations with new software to create climate models that accurately predict decades of change. For their efforts, the team working on these simulations won the 2023 Gordon Bell Special Prize for Climate Modeling.

“We finally have climate models that are at a high enough resolution to resolve clouds for climate simulations at a rate of one simulated year per day. That means you can do a 40-year climate run in about a month, which is a remarkable achievement,” Messer said. “[After all], you want to make sure that you can run your simulations faster than the climate actually changes.”

Frontier also has the potential to help researchers arrange new protein structures for medical therapies, simulate natural disasters to aid emergency planning and forewarning, or make nuclear reactor designs safer by generating high-fidelity models of reactor phenomena.

“There’s no scientific discipline that doesn’t get touched by supercomputing in some way,” Messer said. “We’re going to have projects ranging from the biggest to the smallest scales — from simulations of the universe to quarks being held in nuclei. It’s this ability to attack scientific problems across the spectrum of human inquiry that gets me up and jazzed every morning.”

He added: “That’s our mission: To enable those questions that can’t be answered anywhere else. If they didn’t have that much computing power, they wouldn’t even be able to ask the question.”

And by combining those questions with Frontier’s calculating speed, who knows what other barriers we might one day break through.

This article was originally published by our sister site, Freethink.