The Rory Sutherland interview: Bees, magic, and the folly of “Laplace’s demon”

Credit: WWF Global Photo Network / WWF-Canon / Richard Stonehouse / CC BY-NC-ND 2.0

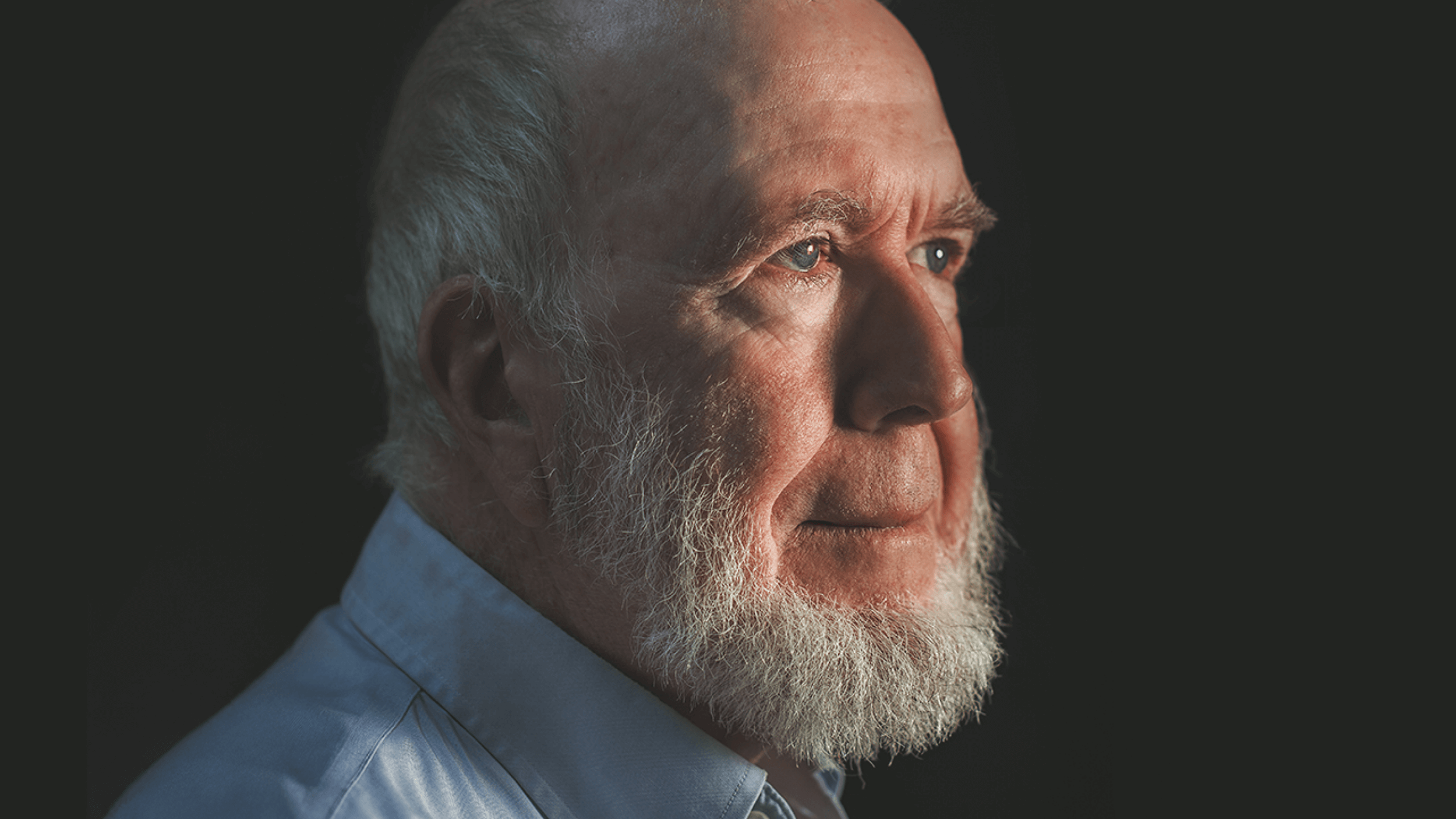

- Rory Sutherland, UK Vice Chairman at advertising agency Ogilvy, is a thought-leader at the intersection of business and behavioral psychology.

- Sutherland connects the resilience of bees to longevity and success in innovation.

- Also covered in this Q&A: How Uber created magic from psychological messiness; our over-reliance on reason; and why friction can be golden.

Rory Sutherland, as he often does, wants to talk about bees. As Vice Chairman (UK) of advertising agency Ogilvy — and one of the most provocative voices in business and behavioral psychology — Sutherland has spent years making a compelling, counter-intuitive case for inefficiency as a driver of innovation, longevity, and success.

In a world obsessed with optimization — Sutherland suggests — inefficiency, when designed with care, can lead to extraordinary, exponential outcomes. In one of his latest pieces, “Are We Too Impatient to Be Intelligent?” he argues that true breakthroughs often come not from speeding things up, but from deliberately slowing them down.

Bees, he thinks, can help explain much of this.

Recently, I joined Sutherland for a chat about long-term thinking, innovation, psychological “magic,” and much more, starting with his apicultural analogy of choice.

Eric Markowitz: So, Rory, what can we learn about business strategy from bees?

Rory Sutherland: There are “explore bees” and “exploit bees,” and the ratio between them varies. But here’s the key: you need a certain percentage of bees focused on exploring. These bees serve a dual purpose. Without them, you can’t get lucky. You can only become marginally better at what you’re already doing, but you’ll never discover a new source of pollen or nectar. In other words, you miss out on positive, serendipitous opportunities.

Beyond that, these explore bees provide resilience in a changing environment. Without a balance of exploration alongside exploitation, two major problems emerge: first, you never really grow. Second, you risk going extinct very quickly. This idea is incredibly relevant to businesses. Many aren’t growing well because they’re too focused on operational efficiency — doing the same thing they did last year slightly better — rather than asking tougher questions like, “What should we be doing differently?”

Take the car industry, for example. We must sympathize with volume manufacturers like Toyota or Volkswagen, but they arguably should have seen electrification coming. Almost every rotating device in our homes has steadily become electrified. It was only a matter of time before this shift reached automobiles. Yet many car-makers clung to improving existing operations rather than exploring bold new directions. That lack of exploration hinders both growth and long-term survival.

Eric Markowitz: You’ve written about how economics tries to model human behavior using physical laws. What’s the issue with this approach?

Rory Sutherland: Economics often tries to model and predict human behavior using Newtonian-style physical laws. But the great thing about psychology is that it’s completely messy. And consequently you can produce “magic” through psychology, which is not possible if you’re hanging onto what’s possible.

Take Uber, for example. A Newtonian economist would say, “To make customers happier, you need to reduce waiting time.” But a psychologist might say, “If you show customers their taxi on a map and let them watch it approach, they’ll feel less uncertain and less annoyed by the wait.” Under 95% of conditions, it’s the equivalent of reducing waiting times, because the degree of uncertainty and pain they experience is less. They’re less bothered by the wait. And that’s exactly what Uber did. The map didn’t reduce wait times, but it reduced the psychological discomfort of waiting, and that was enough to make people happier. It’s a brilliant example of psychological magic.

By clinging to Newtonian certainty, economics often destroys the opportunity to do something magically creative.

Eric Markowitz: Why do you think businesses tend to avoid this “messiness” in favor of quantification?

Rory Sutherland: I think there’s a problem when you live in a world obsessed with quantification. There are two main issues. First, all big data comes from the past. Big data might be very useful for predicting the immediate future, but it becomes increasingly useless when trying to predict even the intermediate future, let alone the far future.

Second, slow processes are very hard to measure — not necessarily because they can’t be measured, but because we lack the patience to do so. In advertising, I’ve noticed that brands often overinvest — and consequently overestimate — the importance of what you might call short-term, fast-feedback activities. These provide immediate efficiency gains but come at the expense of longer-term, compounding investments.

Eric Markowitz: Can you give an example of this distinction between short-term and long-term investments?

Rory Sutherland: Of course. Investment in a brand or in fame is a compounding investment. Short-term activities offer linear, immediate growth, while long-term activities deliver deferred — but exponential growth. Jeff Bezos highlighted this perfectly: there’s no point in merely focusing solely on short-term optimization because all your competitors are doing the same. By doing so, you’re simply benchmarking yourself against them, becoming more similar, and ultimately losing your opportunities for differentiation or distinctiveness.

The best-reasoned argument doesn’t always lead to the best decision. Evolution has taught humans to embrace complexity and ambiguity.

Eric Markowitz: In the past, you’ve referenced Voltaire. What does his critique of reason have to do with all this?

Rory Sutherland: Voltaire’s Bastards is a brilliant book about our over-reliance on reason. It’s seductive because reason helps us win arguments. But the best-reasoned argument doesn’t always lead to the best decision. Evolution has taught humans to embrace complexity and ambiguity. Sometimes it’s better to act on intuition or take a wild bet, even if it seems irrational. This is especially true in business. Institutional decision-making often rewards safe, logical choices because they’re easy to justify. But true innovation requires messiness and experimentation, which can’t always be reasoned out in advance.

Eric Markowitz: You’ve also talked about the dangers of trying to over-optimize for the future. How does that play out?

Rory Sutherland: I think there’s a fundamental question we have to address, which is that we’re fighting this battle against a kind of weird conspiracy. I think it involves the tech-consulting-financial complex, which has this fantasy of perfect quantification leading to perfect prediction, and then to perfect decision-making. It’s equivalent to a scientific idea that was later rejected — once chaos and complexity theory emerged — the idea of “Laplace’s demon.” The notion was that if you knew enough, everything would become predictable and knowable. But Edward Lorenz’s work in climate prediction, for example, shows that beyond a certain point, things are to some degree unknowable.

Faced with that, people tend to do one of two things. They either pretend, through extrapolation, that the future is knowable. Or they just neglect to consider it altogether. The consequence of this mindset is that if you only focus on short-term, incremental improvement, you end up trapped in a “local maximum.” Worse, you might actually end up dying because you become over-optimized for the past or the near future. My argument is that when it comes to the intermediate future — never mind the far future, which is too mysterious for all of us — we don’t need to predict it exactly. We simply have to accept that it’s probabilistic.

Eric Markowitz: You have also written about the value of inefficiency — what’s the problem with efficiency?

Rory Sutherland: There are things in life where the value is precisely in the time spent, the effort endured, or the friction involved. Deliberate friction can actually be a good idea. Take recruitment at Goldman Sachs. Candidates can go through eight interviews, and if they don’t hear back, they’re expected to follow up by themselves. That added friction filters out people who aren’t truly committed.

Deliberate friction can actually be a good idea.

Or consider the IKEA effect. We value IKEA furniture partly because the experience of buying it is painful — and we have to put it together ourselves. The effort we invest creates emotional attachment. Even email, which is fast and free, suffers because it lacks friction. Its instant nature means people send too many emails, often copying unnecessary recipients. There’s no cost to hitting “Reply All,” so email replication grows exponentially, leading to inboxes overflowing with noise. Imagine if sending an email to multiple recipients added a delay. It would force us to think before we send.

Ironically, by slowing things down, we’d become more efficient.

Eric Markowitz: Does this obsession with efficiency affect how we think about technology, especially AI?

Rory Sutherland: Absolutely. Most AI is designed to give you instant answers. But in real life, we often refine our preferences iteratively. Take dating, for example. The person you end up marrying probably doesn’t meet all your initial criteria. Through the process of meeting people, you discover what truly matters to you — your revealed preferences. If AI could mimic that iterative process, it would be much more useful. Instead of instantly suggesting five hotels in Greece, it might say, “Here’s one option. What do you think? By the way, have you considered Turkey?” This back-and-forth helps refine your preferences in a way that feels more human.

Eric Markowitz: So slowing down and adding friction makes us more intelligent?

Rory Sutherland: Exactly. By slowing down and adding deliberate inefficiencies, we create opportunities for “magic.”