MIT’s robotic nose can detect first sign of disease

- Our smartphones know a lot about us: they can hear us, see us, and feel our touch. But they can’t smell us.

- MIT researchers are working toward a future where we can have a mini olfactory system built into our smartphones.

- MIT’s robotic nose learned what cancer smells like.

This article was originally published on our sister site, Freethink, and is an installment of The Future Explored, a weekly guide to world-changing technology. You can get stories like this one straight to your inbox every Thursday morning by subscribing here.

Our smartphones know a lot about us: they can hear us, see us, and feel our touch.

What they can’t do is smell us — at least, not yet. But MIT researchers are working toward a future where we can have a mini olfactory system right inside our pockets.

Sniffing out disease: Diseases often change human body odor — and being able to pick up on this odor could lead to earlier and more accurate medical diagnoses.

According to Business Insider, modern medical articles have described yellow fever as smelling like raw meat, typhoid as baked bread, and diabetic ketosis as rotten apples. Two years ago, a woman swore she could smell her husband’s Parkinson’s disease.

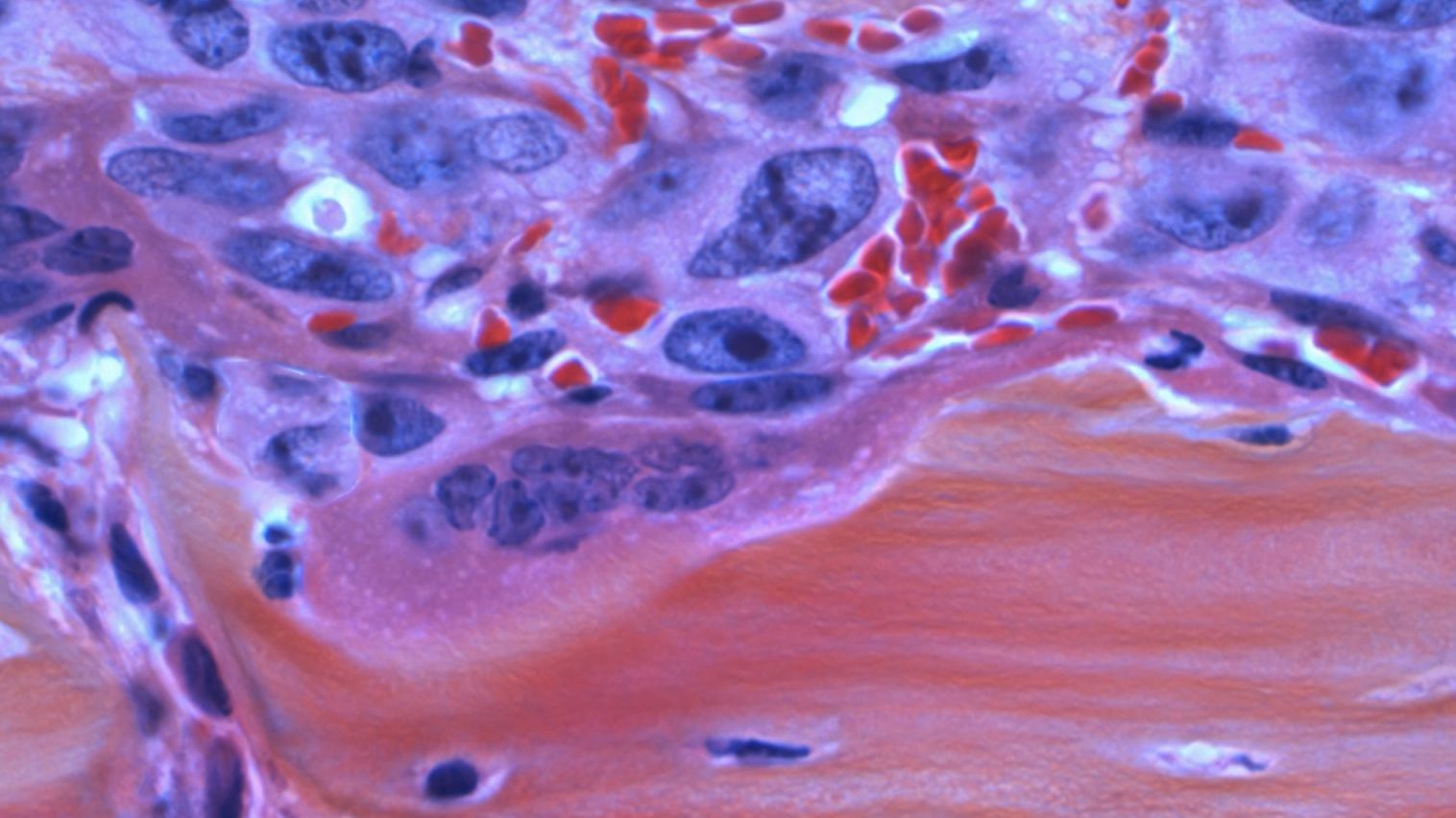

And scientifically, this checks out: when a healthy cell is attacked by a virus, a toxic byproduct is produced. This byproduct can be emitted from the body via breath, sweat, or urine.

“In theory, this should be the earliest possible detection of any possible infection event,” Josh Silverman, CEO of Aromyx, a biotech startup that focuses on digitally replicating our sense of smell, told BI.

“You’re measuring the output of an infected cell. That can happen far before you get any viral replication.”

When a healthy cell is attacked by a virus, a toxic byproduct is produced. This smell could be the earliest possible detection of a disease.

The problem is that a person’s sense of smell is weak and subjective, so we can’t rely on a human nose to diagnose a patient. A dog’s nose is much more sensitive, up to 100,000 times better than ours. (Sniffer dogs have even been trained to screen travelers for coronavirus.)

“Dogs, for now 15 years or so, have been shown to be the earliest, most accurate disease detectors for anything that we’ve ever tried,”said Andreas Mershin, a scientist at MIT. “So far, many different types of cancer have been detected earlier by dogs than any other technology.”

The problem with dogs is that they have to be trained to sniff out each specific disease — and training them is expensive and time-consuming. Plus, it’s not super convenient to bring a dog into every doctor’s office or airport, for example.

Dogs can sniff out diseases — but training them is expensive and time-consuming.

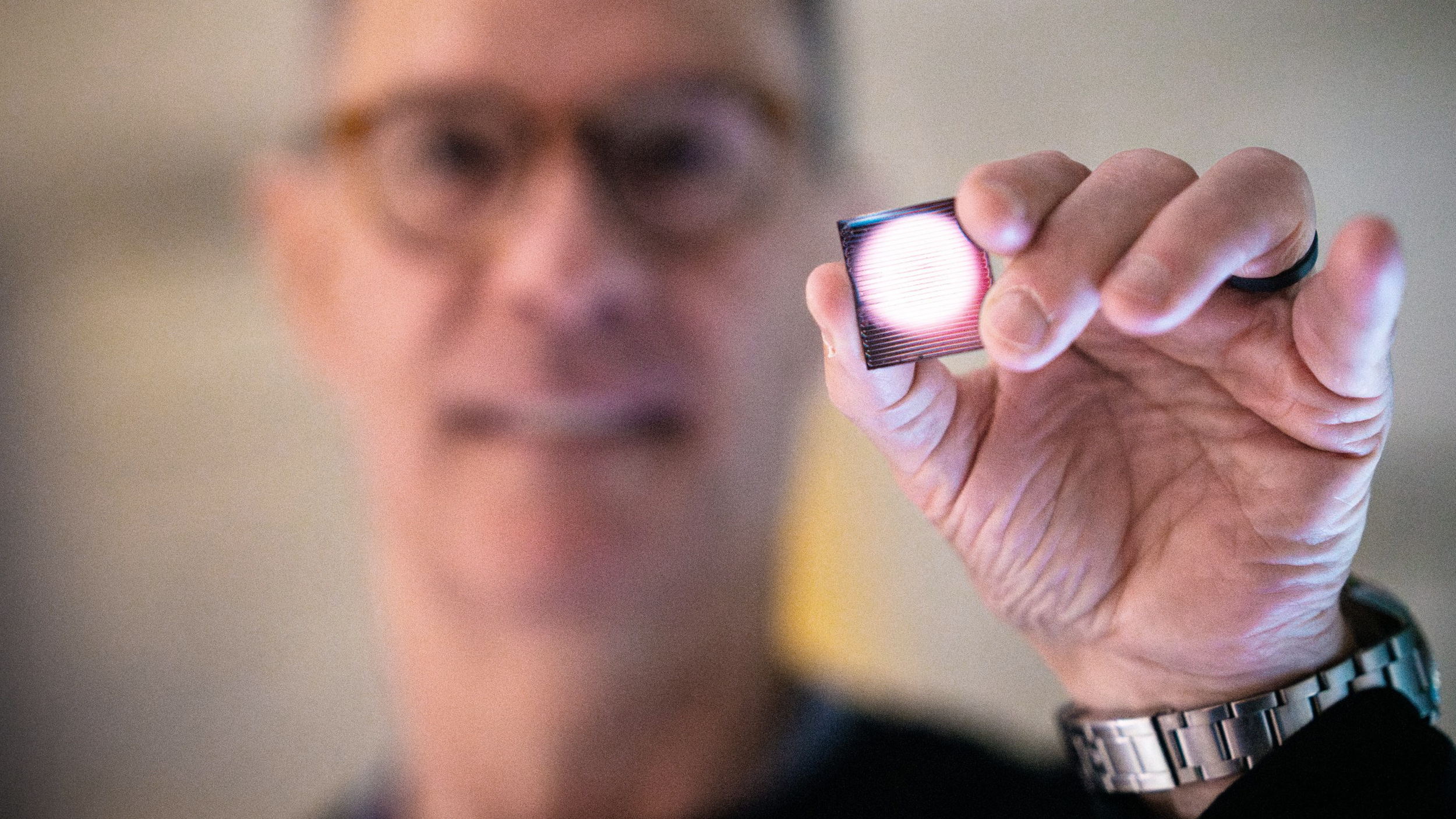

So Mershin and his team are creating a digital dog nose that could eventually be built into every smartphone.

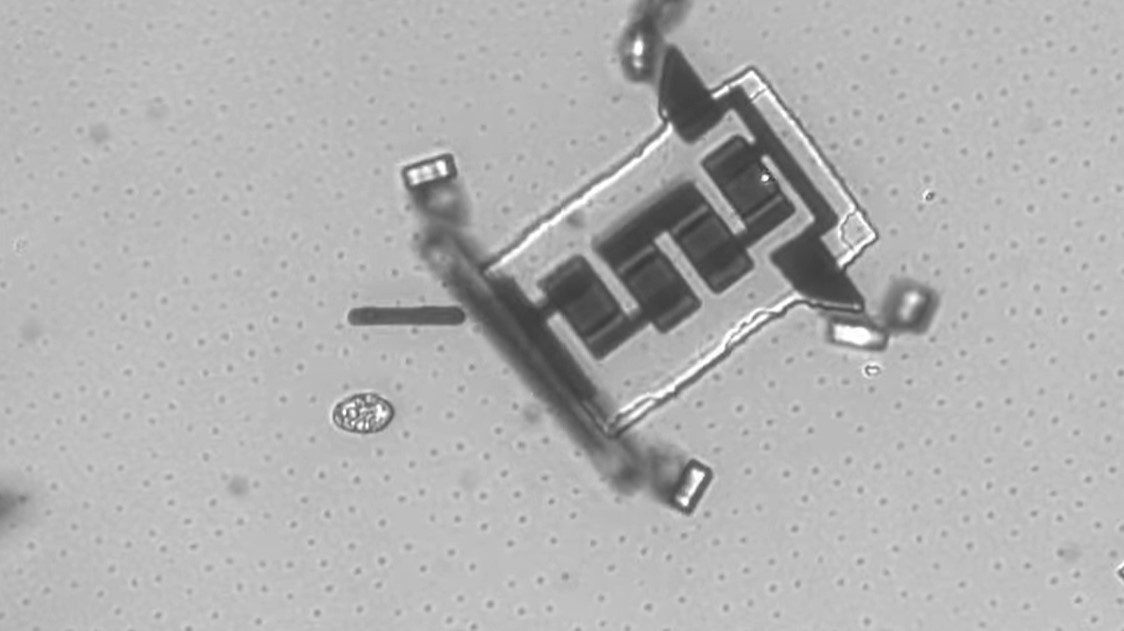

Nano-Nose: Earlier this year, Mershin and his team announced they had created a Nano-Nose — a robotic nose powered by AI — that could identify cases of prostate cancer from urine samples with 70% accuracy. The study claims that the robotic nose performed just as well as trained dogs in detecting the disease.

“Once we have built the machine nose for prostate cancer, it will be completely scalable to other diseases,” Mershin told the BBC.

According to Mershin, the Nano-Nose is “200 times more sensitive than a dog’s nose” when it comes to detecting and identifying tiny traces of different molecules emitted from a human body.

But the device is “100% dumber” when it comes to interpreting those molecules. That’s where AI comes into play — the data collected by the Nano-Nose sensors is run through a machine learning algorithm to interpret complex patterns of molecules.

Interpreting patterns is key to making an accurate diagnosis. The presence of a molecule or even a group of molecules doesn’t necessarily signify cancer — but a complex pattern can. Scientists are still trying to figure out these patterns, but dogs pick up on them naturally.

“The dogs don’t know any chemistry,” Mershin said. “They don’t see a list of molecules appear in their head. When you smell a cup of coffee, you don’t see a list of names and concentrations, you feel an integrated sensation. That sensation of scent character is what the dogs can mine.

MIT’s robotic nose learned what cancer smells like.

To train the AI to mine this sensation as well as the dogs can, the team had to do a complicated analysis of individual molecules in the urine samples as well as understand the genetic composition of the urine. They trained the AI on this data, as well as on the data the dog’s produced in their own analysis, to see if the machine could recognize a pattern between the different data sets.

And it did: the machine learned what cancer smells like.

“What we haven’t shown before is that we can train an artificial intelligence to mimic the dogs,” Mershin said. “And now we’ve shown that we can do this.”

Outside the lab: Next, Mershin and his team need to replicate these results in a bigger study — he’s hoping to conduct another study with at least 5,000 samples. He also needs to ensure the system works outside the pristine lab environment; to be useful in the real world, the system is going to have to work in environments where multiple smells are present.

Still, this proof of concept is exciting because it means a powerful way to diagnose diseases could eventually be found in everyone’s pocket.

Smartphones that smell are nearing reality, Mershin told Vox.

“I think we’re maybe five years away, maybe a little bit less,” he says, “to get it from where it is now to fully inside of a phone. And I’m talking [about deploying it] into a hundred million phones.”