Why ChatGPT feels more “intelligent” than Google Search

- Artificial intelligence caught the public’s imagination when OpenAI released its GPT-4-powered chatbot in 2023.

- For many users, ChatGPT feels like a true AI compared to other tools such as Google Search.

- In this op-ed, Philip L, the creator of the AI Explained YouTube channel, explains why he thinks new video interfaces will give people a sense of AI as a “thing” rather than a “tool.”

The Google Search bar doesn’t feel like an artificial intelligence. No one speculates that it might soon become an artificial general intelligence (AGI) — an entity that is competitive across many domains to a human being.

But do you know many “generally intelligent” humans who can muster a decent translation into and out of 133 languages?

Or a co-worker who can perform mathematical operations instantly, know a good route between pretty much any two locations on the planet, and proffer a plausible answer to every question you might ask in less than a second?

Sure, these answers aren’t original, nor are they especially great. And they are increasingly smothered in ads that benefit the search bar’s parent company. But again, compare a top Google search result to your own knowledge on a random subject.

But why doesn’t Google Search feel like an artificial intelligence, while language models like ChatGPT — and especially the new GPT-4o — often do?

Here are three reasons — if they are accurate, then we can glimpse a wave that will be landing in the coming 12 months.

The simple, singular interface of ChatGPT makes you feel like the thing you are chatting to actually knows things.

First, Google Search clearly derives its answers from human sources (i.e., webpages). That doesn’t count as “knowing,” we chortle, even if the algorithms behind finding and delivering relevant results are fantastically sophisticated.

And second, even if it did “know things,” intelligence is about spotting patterns in what we know, drawing inferences and acting on them, not just “recalling” something.

I’ll come back to reasoning and taking actions in the world, but there is a final, crucial reason why no one considers the search bar to be intelligent — a reason that matters just as much, if not more, to our growing sense of language models being entities, rivals even, true AIs, and not just handy tools.

There has been no existential angst, and widespread job-replacement fears, about the Google Search bar because it is a portal rather than a personality. No one believes the Search bar is the thing providing the answer to your question; instead, it is speedily taking you to places that give you answers.

But chatbots don’t need to break the illusion by bringing you to different sites or citing sources, nor can they or anyone pinpoint the training data that drove the output they just gave you. The simple, singular interface of ChatGPT makes you feel like the thing you are chatting to actually knows things. For many, it now feels appropriate to say “ChatGPT believes that …,” while no one would say “Google Search believes …”

“The “Chat” part of ChatGPT was enough to transfix millions of people who had been uninterested in language models.”

The extent to which large language models like ChatGPT actually go beyond memorization of training data into basic reasoning is an article for another day, but even if they are just imitating the reasoning steps that they have “seen” in the training data, it sure feels like they are “thinking” about what you are saying.

Language models answer with personality, too. The ChatGPT that was unveiled in November 2022 thanks you for your question, admits mistakes, and can hold a back-and-forth conversation. OK, we know that it is not actually grateful for your question, and would admit to 2+2=5 if you push it hard enough, but the “Chat” part of ChatGPT was enough to transfix millions of people who had been uninterested in language models.

Responses don’t come back as a block, like Google Search results, but as a stream of text, as if they are being composed by someone typing with over-eager speed.

Yes, the models have, in the intervening 18 months, also gotten “more intelligent,” with GPT-4, Google Gemini, and Claude 3 Opus all vying for the title of “smartest model.” But the recent AI craze didn’t wait for this intelligence growth: ChatGPT got to 100 million users before the GPT-4 upgrade.

If I am right, it is the human-like, all-in-one nature of chatbots that is driving most of the fear and excitement around AI.

Of course, the professionals in my GenAI networking community focus mostly on how models can now process documents, analyze image inputs, generate catchy music, and absorb hundreds of thousands of words at once, at ever greater speeds.

But just as important for the public, in my opinion, has been that growing sense of “humanness,” with speech input and output added.

So I want you to pay attention to this more visceral shift, this coalescence of web-scale training data, tools, and abilities into an increasingly human-like conversational interface — the humanifestation, if you will, of deep learning-derived artificial intelligence.

If I am right, it is the human-like, all-in-one nature of chatbots that is driving most of the fear and excitement around AI and fueling the idea that we are birthing a new digital species.

Hence the unprecedented interest in guessing timelines for the creation of AGI (most predictions falling between 2025-2030, if you’re wondering), as well as frustrations over that term’s growing popularity.

This would also explain the popularity of Character.AI, an app with tens of millions of users who spend hours interacting with roleplaying bots. Users are suspending disbelief, at scale, and some are even falling in love.

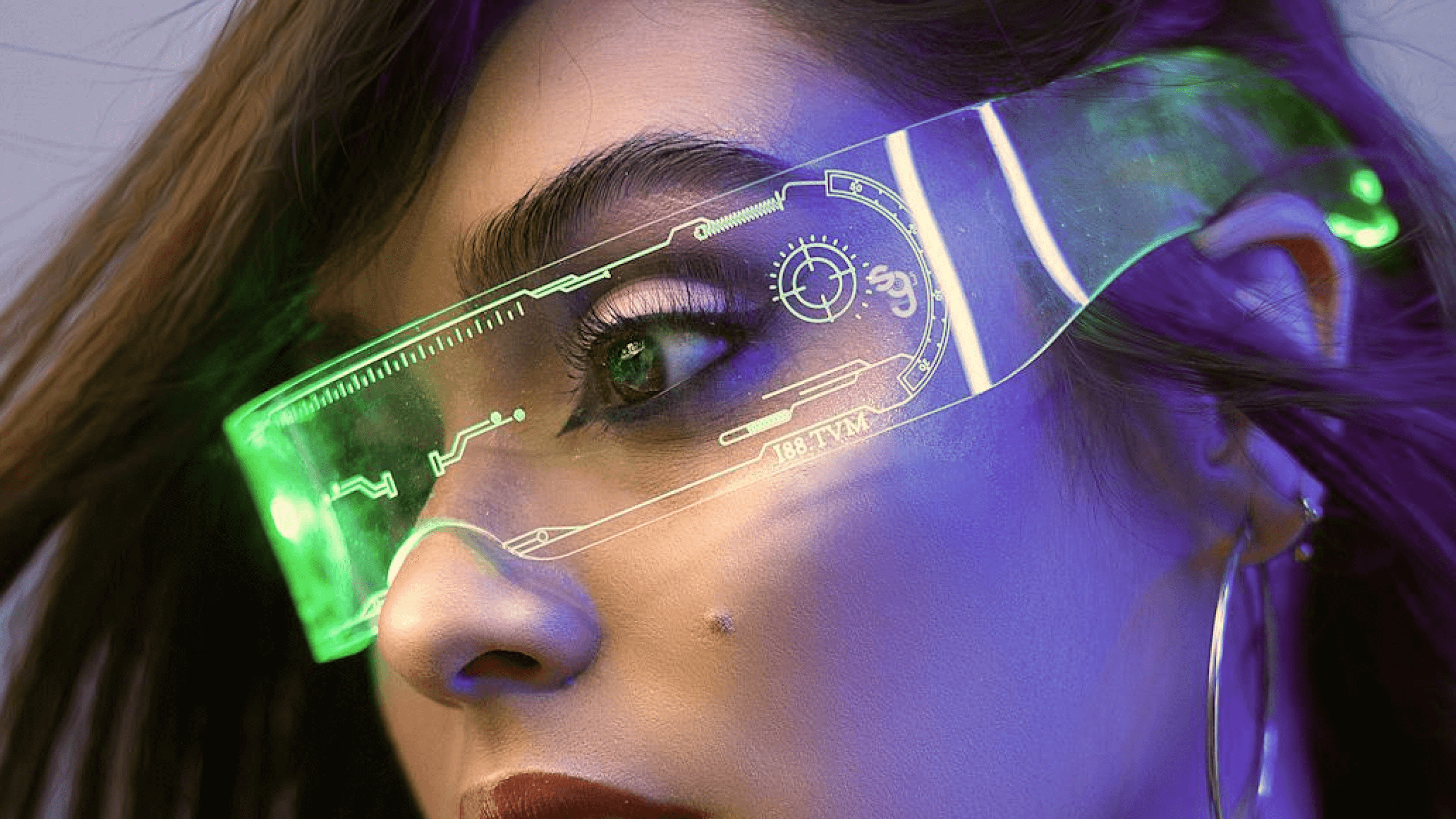

We already have the technology for AI models to have video avatars.

If it is the ersatz humanity of models that is their superpower, rather than their benchmark scores, then we may be able to anticipate a second wave of AI-mania, due in the coming months.

Models will soon have video avatars. The technology is there, with lip-syncing, facial expressions, and text-to-speech getting more life-like and generated in real time. Models can even analyze the emotions of your voice and adapt their speech to your mood.

Imagine video-calling GPT-5, as soon as 2024 or early 2025. Your favorite avatar answers (of course, you speak on a first-name basis), picking up the conversation exactly where you left off.

It won’t hurt your perception of “general intelligence” that you would be chatting to a model that likely knows more about medicine than many doctors, more about the law than junior lawyers, and doubles as an effective counselor and decent financial advisor.

The condensed insights from trillions of words — public or otherwise — and billions of frames from video sources like YouTube, all presented in a simple, human form.

And unlike a perfectly passive search bar, these new models will also be trained from the ground up to take basic actions on your behalf, asking questions when they are not sure what to do. Not yet trusted to act on their own, but worthy assistants nonetheless.

If I am right, billions may soon feel the AGI.

I know, I know: this is mostly just putting a pretty, chatty face on the same technology, plus a bit of fine-tuning and finessing. But that’s what ChatGPT was, in friendly text form, to the GPT-3 base model that was released in 2020.

In November 2022, it was billed as a low-key research preview. And we all know the frenzy that came after.

If I am wrong, this new video interface will be a passing fad and cause little new interest or demand. AGI will be a term that slips into obscurity, and growing debates over its technical definition will fizzle out. Instead, people will simply await smarter models — ones that can, for example, do their dishes or solve any real-world software engineering challenges they may face (for real this time).

But if I am right, billions may soon feel the AGI. The sense of AI being a “thing” rather than a “tool” will grow, with all the concomitant angst and addiction.

Sure, older hands will complain about its inaccuracies, and how the young spend all day on something else that will do them no good. Concerns about AI persuasion will only grow. Many will feel possessive about their models, and protective of the relationships they build with them. More debates will rage, without end, over AI consciousness. And then will come the AI adverts. Delivered by the model you have come to rely on, slipped into conversations in 2025.

Who knows, it may well be a Google model that first claims the mantle of an artificial general intelligence. Not only did Google invent the Transformer architecture behind ChatGPT, it pioneered the implementation of neural networks in Search … and that, handily enough, brings us back to the humble search bar, and what you might soon want to ask it.

“Hey Search, it’s me. You know how we’ve never before created something that resembles a new species — how do you think that’s gonna go for us?”

Philip is the creator of the AI Explained YouTube channel. He also runs AI Insiders, a community of more than 1,000 professionals working in generative AI across 30 industries and authors the newsletter Signal to Noise.

This article was originally published by our sister site, Freethink.