superintelligence

A sobering thought to anyone laughing off the thought of robot overlords.

▸

5 min

—

with

Elon Musk’s new company will use “neural lace” technology to link human brains with machines.

This AI hates racism, retorts wittily when sexually harassed, dreams of being superintelligent, and finds Siri’s conversational skills to be decidedly below her own.

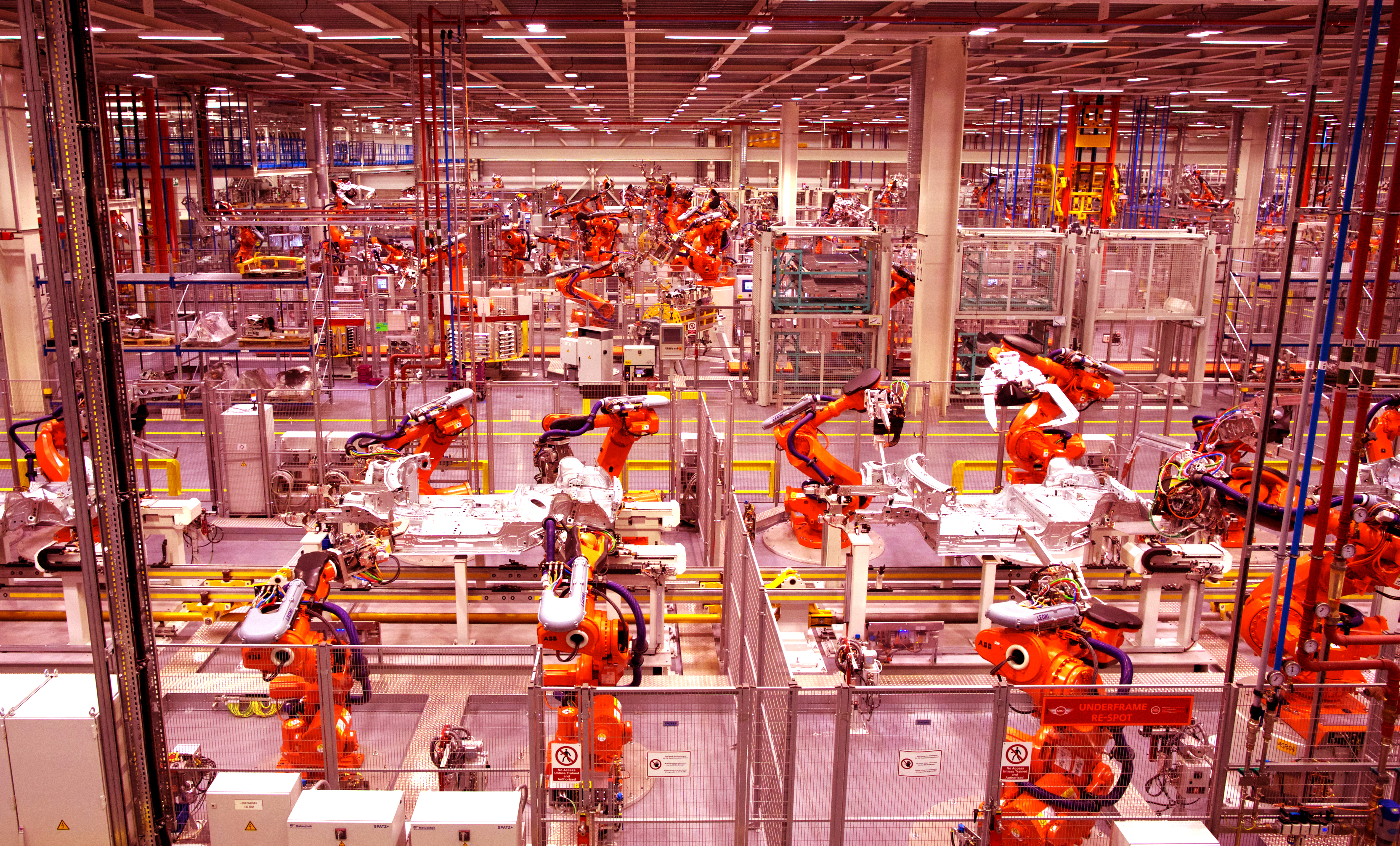

Philosopher and cognitive scientist David Chalmers warns about an AI-dominated future world without consciousness at a recent conference on artificial intelligence that also included Elon Musk, Ray Kurzweil, Sam Harris, Demis Hassabis and others.

We cannot rule out the possibility that a superintelligence will do some very bad things, says AGI expert Ben Goertzel. But we can’t stop the research now – even if we wanted to.

▸

7 min

—

with

A recent conference on the future of artificial intelligence features visionary debate between Elon Musk, Ray Kurzweil, Sam Harris, Nick Bostrom, David Chalmers, Jaan Tallinn and others.