Study: 50% of people pursuing science careers in academia will drop out after 5 years

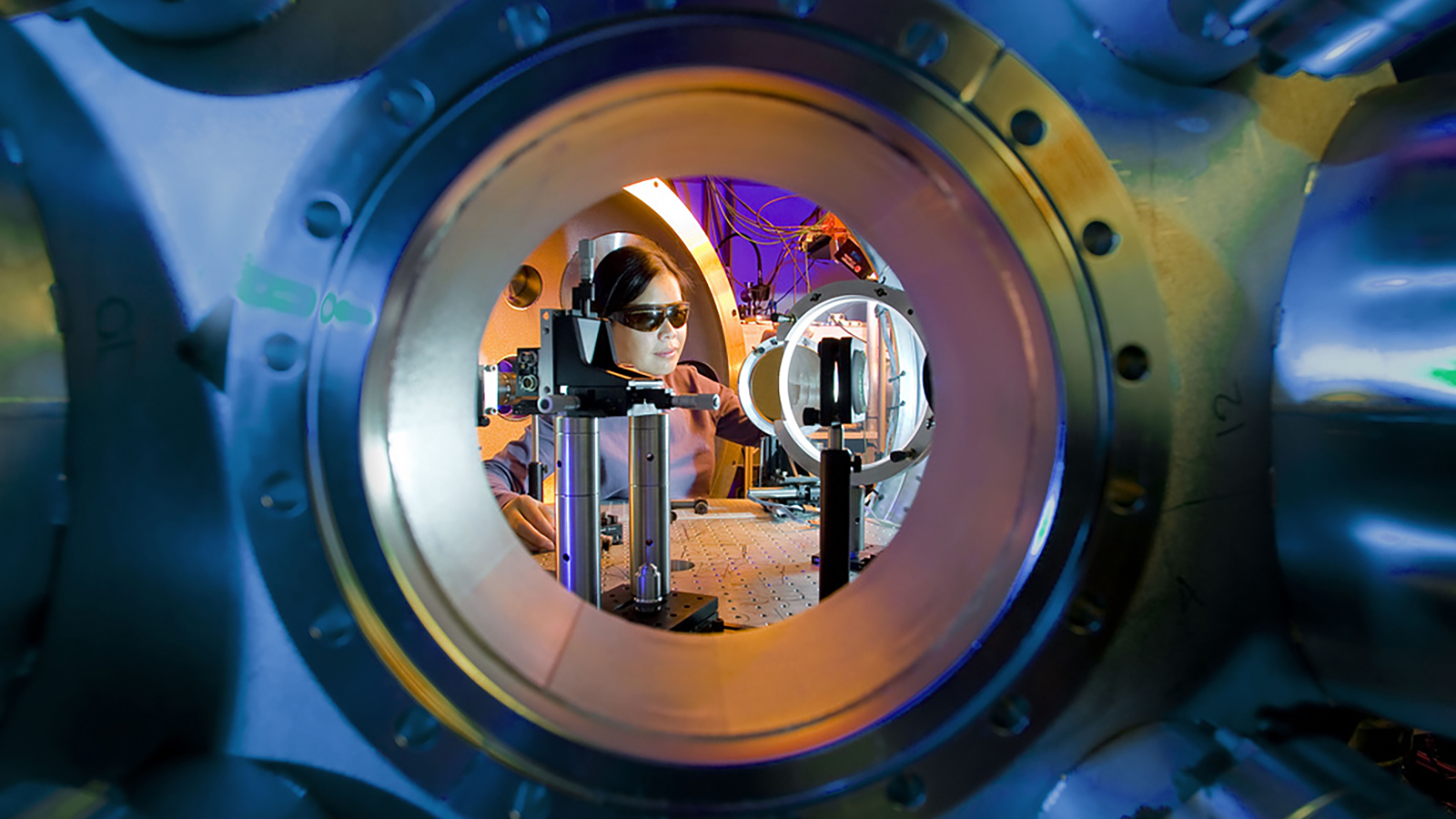

Pixabay

- The study tracked the careers of more than 100,000 scientists over 50 years.

- The results showed career lifespans are shrinking, and fewer scientists are getting credited as the lead author on scientific papers.

- Scientists are still pursuing careers in the private sector, however there are key differences between research conducted in academia and industry.

A new study reveals that half of people pursuing careers in academic science will drop out of their field after just five years.

The analysis, published in the journal of the Proceedings of the National Academy of Sciences, tracked the careers of more than 100,000 scientists over 50 years.

“Between 1960 and 2010, we found the number of scientists who spent their entire career in academia as supporting scientists — rather than a faculty scientist — has risen from 25 percent to 60 percent,” lead author Staša Milojević, an associate professor at Indiana University Bloomington’s School of Informatics, Computing and Engineering, told News at IU Bloomington. “There seems to be a broad trend across fields in science: It’s increasingly a revolving door.”

The study found that the number of scientists who never get credited as a study’s primary author has risen by 35 percentage points since the 1960s, when just 25 percent of scientists never led a published study. Not publishing can hurt scientists’ chances of gaining secure employment.

“Academia isn’t really set up to provide supporting scientists with long-term career opportunities,” Milojević told News at IU Bloomington. “A lot of this work used to be performed by graduate students, but now it’s typical to hire a ‘postdoc’ — a position that practically didn’t exist in the U.S. until the 1950s but has since become a virtual prerequisite for faculty positions in many fields.”

Scientists in fields like robotics drop out of academia at higher rates, likely to pursue lucrative careers in the private sector. But others, like astronomers, have fewer career options outside of academia and are more likely to stay.

In recent decades, academic institutions have been granting an increasing number of PhD degrees, but that rise hasn’t been “accompanied by a similar increase in the number of academic positions.” This had led to “concerns about the lack of opportunities” for scientists, and even “warnings regarding possible scientific workforce bubbles.”

Milojević said the paper doesn’t offer a solution, but that academia might be able to retain more scientists if the government allocated more resources to pure research.

“In the end, I think these issues will need to be addressed at the policy level,” she said. “This study doesn’t provide a solution, but it shows that the number of scientists leaving academia isn’t slowing down.”

Academic vs. industry research

The study doesn’t suggest fewer people are trying to become professional scientists, but rather that an increasing share are ditching academia for the private sector. And that’s not all bad; good science and research are still conducted within the confines of industry.

But academic research differs from industry research in a key way. In industry, the aims of research are almost always focused on making profit, and are therefore aligned with market forces. In academia, however, the incentives are more focused on prestige and problem-solving.

Great achievements have come from academic research that didn’t begin with a profit-seeking motive. The technological ability to click on this article, for instance, arguably stems from the academic work of M.I.T. scientist Vannevar Bush, who in 1945 outlined a vision for hypertext and a rudimentary version of the internet in his famous essay ‘As We May Think’.

Still, it’s not always clear from the perspective of investors and governments that it’s worth investing in research that won’t necessarily yield anything of immediate economic or societal value. But those inclined to pursue research for its own sake, or for the belief that it’ll someday change the world, argue that we can’t always predict the big changes produced by seemingly small achievements.

Albert Einstein once put it another way: “If we knew what it was we were doing, it would not be called research, would it?”