“Mind-Reading” Technology Has Been Developed by Purdue Scientists

We’ve all seen sci-fi films where a leader of the resistance is strapped down into a brain-scanning machine. Though the captive can fight it, he can’t hold out forever. Sooner or later they’ll extract the location of the rebel base and the jig is up. This troupe is useful in ratcheting up tension and moving the plot along. Yet, in real life, we often dismiss such devices as flights of fancy.

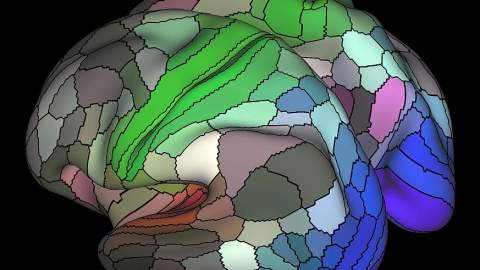

But consider that every thought we have travels down a certain neural pathway, with a pattern or signature that can be picked up, identified, and even cataloged. If one could read those patterns, they could decipher your very thoughts. So how far away are we from a mind-reading machine? Scientists are getting there. They’re beginning to decipher these patterns in the visual cortex using an fMRI machine paired with advanced A.I.

Researchers at Purdue University recently used a form of artificial intelligence to “read” people’s minds. How did they do it? First, three female subjects were each, stuffed into an fMRI machine. They watched videos on a screen from inside depicting animals, people, and natural scenes, while their brains were scanned. The scientists collected 11.5 hours of fMRI data in total. At first, the “deep learning” program had access to the data while the subjects watched the videos. This helped train it.

From left, doctoral student Haiguang Wen and former graduate student Junxing Shi prepare to test a graduate student. Credit: Purdue University image/Ed Lausch.

Then the A.I. used this data to predict what brain activity would take place in a subject’s visual cortex, depending on the scene depicted. As it went on, the deep learning program decoded the fMRI data and placed each image signature into a specific category. This helped scientists hunt down the regions of the brain responsible for each visual. Soon, the deep learning program could say what object a person was seeing through their brain scan data alone.

Researchers then had the A.I. program reconstruct the videos without any access to them, from subjects’ brain scans alone. It did so flawlessly. These findings should give us a better understanding of the brain and how it functions. It will also help develop more advanced forms of A.I. The results of this study were published in the journal Cerebral Cortex.

Zhongming Liu is an assistant professor in Purdue’s Weldon School of Biomedical Engineering and School of Electrical and Computer Engineering. He’s been studying the brain for nearly two decades. But it wasn’t until he started using A.I. that he made major progress. Dr. Liu told Futurism, “Reconstructing someone’s visual experience is exciting because we’ll be able to see how your brain explains images.”

Doctoral student Haiguang Wen, the lead researcher, explained a little more about their approach. “A scene with a car moving in front of a building is dissected into pieces of information by the brain. One location in the brain may represent the car; another location may represent the building.”

Wen went on, “Using our technique, you may visualize the specific information represented by any brain location, and screen through all the locations in the brain’s visual cortex. By doing that, you can see how the brain divides a visual scene into pieces, and re-assembles the pieces into a full understanding of the visual scene.”

From left, doctoral student Haiguang Wen, assistant professor Zhongming Liu and former graduate student Junxing Shi, review fMRI data of brain scans. Credit: Purdue University image/Ed Lausch.

The type of “deep learning” algorithm used is called a convolutional neural network. Before this, it’s been used to isolate patterns associated with unmoving visual images in the brain. It now aids in facial and object recognition software. This experiment however, was the first time such a technique was used with videos and natural scenes, allowing researchers to get one step closer to the ultimate goal, evaluating the brain in a dynamic situation in real time.

If you’re afraid that some scary institution is going to kidnap you, strap you down and scan your brain, understand that such research is still in the very early stages. It took a long time for scientists to reach this point. And according to Carnegie Melon’s Dr. Marcel Just, you can fight it, by just failing to cooperate.

There are other limits too. “Because brains are so complex,” Dr. Liu said, “it’s hard to ask the A.I. to understand the brain given that we still don’t fully understand (it) ourselves.” So we’ve entered into a strange virtuous cycle, where A.I. informs us about the brain, which in turn helps to improve A.I.

To learn more about this groundbreaking study, click here: