Has Google Actually Achieved ‘Quantum Supremacy’ With Its New Quantum Computer?

A fully programmable quantum computer that can outperform any classical computer is right at the edge of today’s technology.

Earlier this month, a new story leaked out: Google, one of the leading companies invested in the endeavor of quantum computing, claims to have just achieved Quantum Supremacy. While our classical computers — like laptops, smartphones and even modern supercomputers — are extraordinarily powerful, there are many scientific questions whose complexity goes far beyond their brute-force capabilities to calculate or simulate.

But if we could build a powerful enough quantum computer, it’s possible that many problems that are impractical to solve with a classical computer would suddenly be solvable with a quantum computer. This idea, that quantum computers could efficiently solve a computation that a classical computer can only solve inefficiently, is known as Quantum Supremacy. Has Google actually done that? Let’s dive into the problem and find out.

The idea of a classical computer is simple, and goes back to Alan Turing and the concept of a Turing machine. With information encoded into bits (i.e., 0s and 1s), you can apply a series of operations (such as AND, OR, NOT, etc.) to those bits to perform any arbitrary computations you like. Some of those computations might be easy; others might be hard; it depends on the problem. But, in theory, if you can design an algorithm to successfully perform a computation, no matter how computationally expensive it is, you can program it into a classical computer.

However, a quantum computer is a little bit different. Instead of regular bits, which are always either 0 or 1, a quantum computer uses qubits, or the quantum analog of bits. As with most things, going to the quantum world from the classical world means we need to change how we view this particular physical system.

Instead of recording a 0 or 1 permanently as a bit, a qubit is a two-state quantum mechanical system, where the ground state represents 0 and the excited state represents 1. (For example, an electron can be spin up or spin down; a photon can be left-handed or right-handed in its polarization, etc.) When you prepare your system initally, as well as when you read out the final results, you’ll see only 0s and 1s for the values of qubits, just like with a classical computer and classical bits.

But unlike a classical computer, when you’re actually performing these computational operations, the qubit isn’t in a determinate state, but rather lives in a superposition of 0s and 1s: similar to the simultaneously part-dead and part-alive Schrodinger’s cat. It’s only when the computations are over, and you read out your final results, that you measure what the true end-state is.

There’s a big difference between classical computers and quantum computers: prediction, determinism and probability. As with all quantum mechanical systems, you cannot simply provide the initial conditions of your system and the algorithm of which operators act on it and then predict what the final state will be. Instead, you can only predict the probability distribution of what the final state will look like, and then by performing the critical experiment over and over again can you hope to match and produce that expected distribution.

You might think that you need a quantum computer to simulate quantum behavior, but that’s not necessarily true. You can simulate quantum behavior on a quantum computer, but you should also be able to simulate it on a Turing machine: i.e., a classical computer.

This is one of the most important ideas in all of computer science: the Church-Turing thesis. It states that if a problem can be solved by a Turing machine, it can also be solved by a computational device. That computational device could be a laptop, smartphone, supercomputer or even a quantum computer; a problem that could be solved by one such device should be solvable on all of them. This is generally accepted, but it tells you nothing about the speed or efficiency of that computation, nor about Quantum Supremacy in general.

Instead, there’s another step that’s much more controversial: the extended Church-Turing thesis. It states that a Turing machine (like a classical computer) can always efficiently simulate any computational model, even to simulate an inherently quantum computation. If you could provide a counterexample to this — if you could demonstrate even one example where quantum computers were vastly more efficient than a classical computer — that would mean that Quantum Supremacy has been demonstrated.

This is the goal of many teams working independently: to design a quantum computer that can out-perform a classical computer by a significant margin under at least one reproducible condition. The key to understanding how this is possible is the following: in a classical computer, you can subject any bit (or combination of bits) of information to a number of classical operations. This includes operations you’re familiar with, such as AND, OR, NOT, etc.

But if you have a quantum computer, with qubits instead of bits, you’ll have a number of purely quantum operations you can perform in addition to the classical ones. These quantum operations obey particular rules that could be simulated on a classical computer, but only at great computational expense. On the other hand, they can be easily simulated by a quantum computer on one condition: that the time it takes to perform all of your computational operations is short enough compared to the coherence time of the qubits.

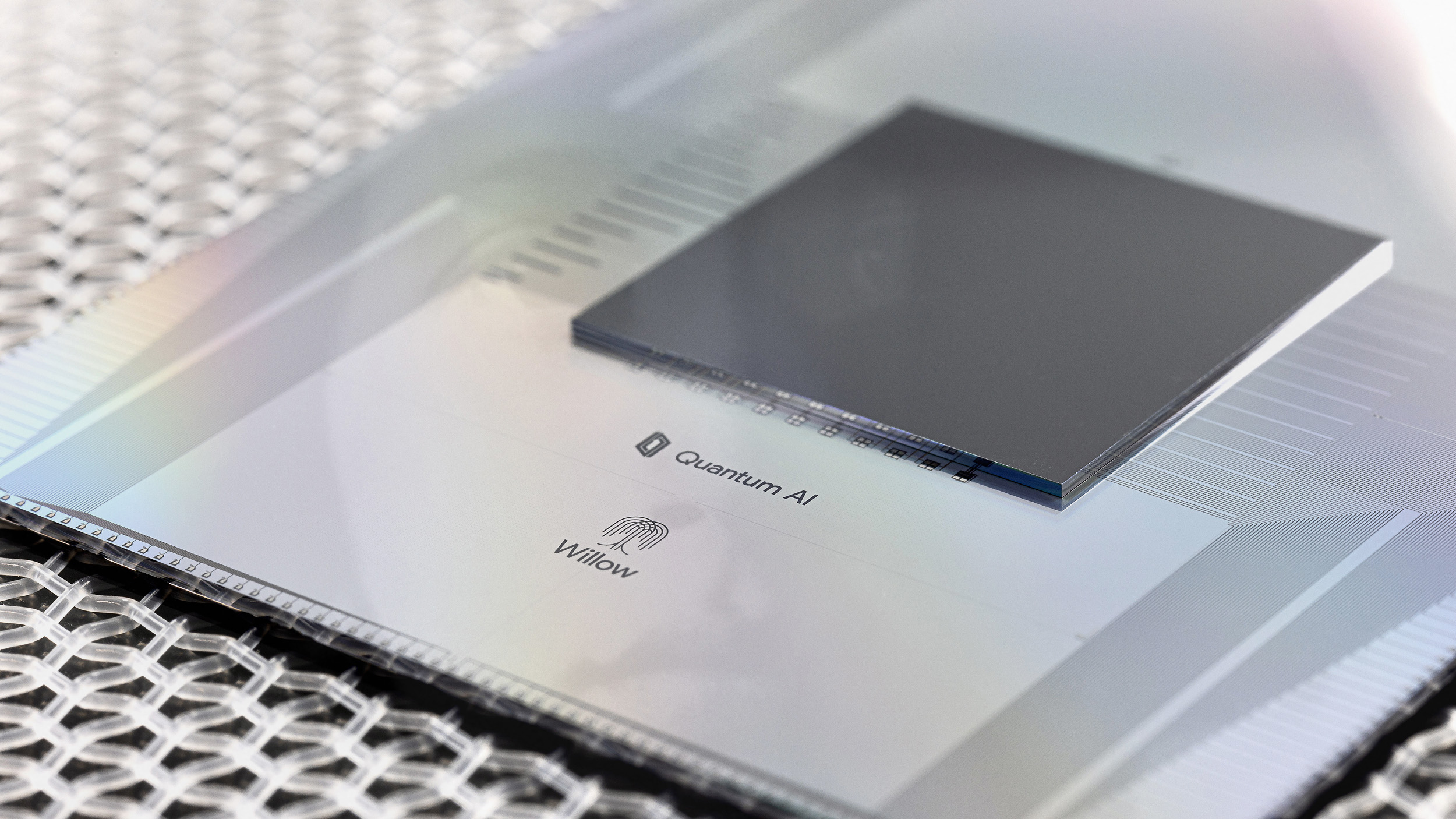

With all this in mind, the Google team had a paper that was briefly posted to NASA’s website (likely an early draft of what the final paper will be) that was later removed, but not before many scientists had a chance to read and download it. While the implications of their accomplishments have not yet been fully sorted out, here’s how you can imagine what they did.

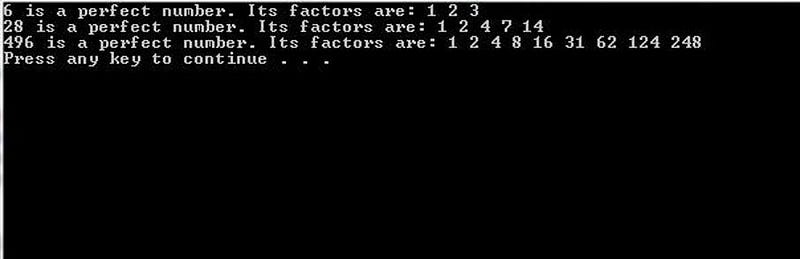

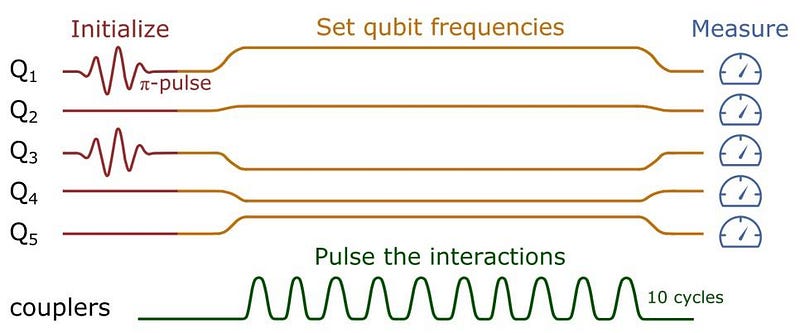

Imagine you have 5 bits or qubits of information: 0 or 1. They all start in a 0 state, but you prepare a state where two of these bits/qubits are excited to be in the “1” state. If your bits or qubits are perfectly controlled, you can prepare that state explicitly. For example, you can excite bit/qubit numbers 1 and 3, in which case your system’s physical state will be |10100>. You can then “pulse in” random operations to act on these bits/qubits, and you expect that what you’ll get is a specific probability distribution for the outcome.

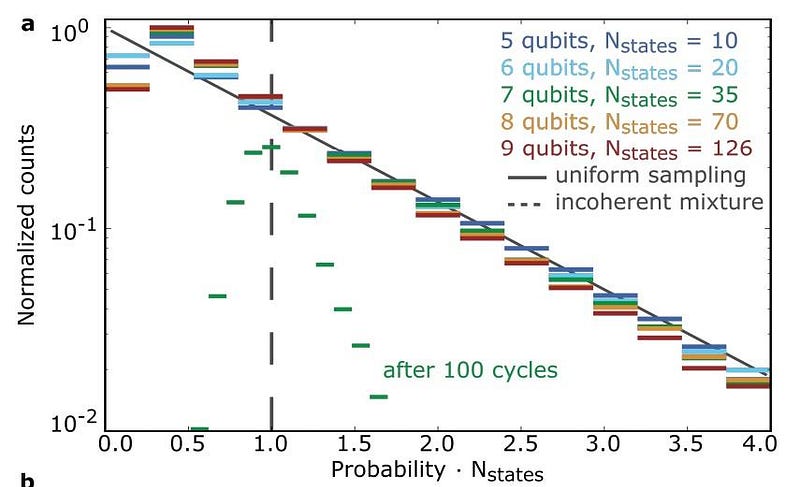

The Google team chose a particular protocol for their experiment attempting to achieve Quantum Supremacy, demanding that the total number of excited bits/qubits (or the number of 1s) must be preserved after the application of an arbitrary number of operations. These operations are completely random, meaning that which bits/qubits are excited (1) or in the ground state (0) are free to vary; you’d need two “1” states and three “0” states for the five qubit examples. If you didn’t have truly random operations, and if you didn’t have the purely quantum operations encoded in your computer, you’d expect that all 10 of the possible final states would appear with equal probability.

(The ten possibilities are |11000>, |10100>, |10010>, |10001>, |01100>, |01010>, |01001>, |00110>, |00101>, and |00011>.)

But if you have a quantum computer that behaves as a true quantum computer, you won’t get a flat distribution. Instead, some states should occur more frequently in a final-state outcome than the others, and others should be very infrequent. This is a counterintuitive aspect of reality that only arises from quantum phenomena, and the existence of purely quantum gates. We can simulate this phenomena classically, but only at great computational cost.

If we only applied the allowable classical gates, even with a quantum computer, we wouldn’t get the quantum effect out. Yet we can clearly see that the probability distribution we actually get isn’t flat, but that some possible end states are much more likely than the 10% you’d naively expect, and some are far less likely. The existence of these ultra-low and ultra-high probability states is a purely quantum phenomenon, and the odds that you’ll get these low-probability and high-probability outcomes (instead of a flat distribution) is an important signature of quantum behavior.

In the field of quantum computing, the odds of getting at least one final state that exhibits a very low-probability of appearing should follow a specific probability distribution: the Porter-Thomas distribution. If your quantum computer was perfect, you could perform as many operations as you wanted for as long as you wanted, and then read out the outcomes to see if your computer followed the Porter-Thomas distribution, as expected.

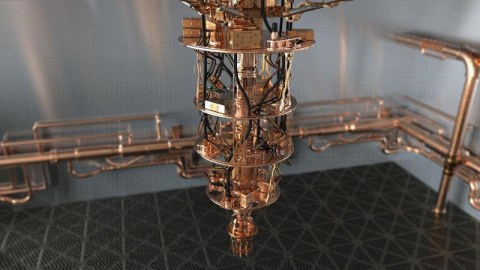

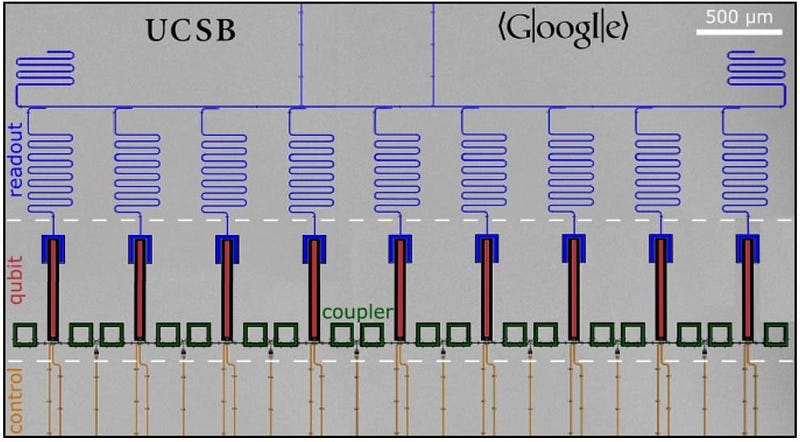

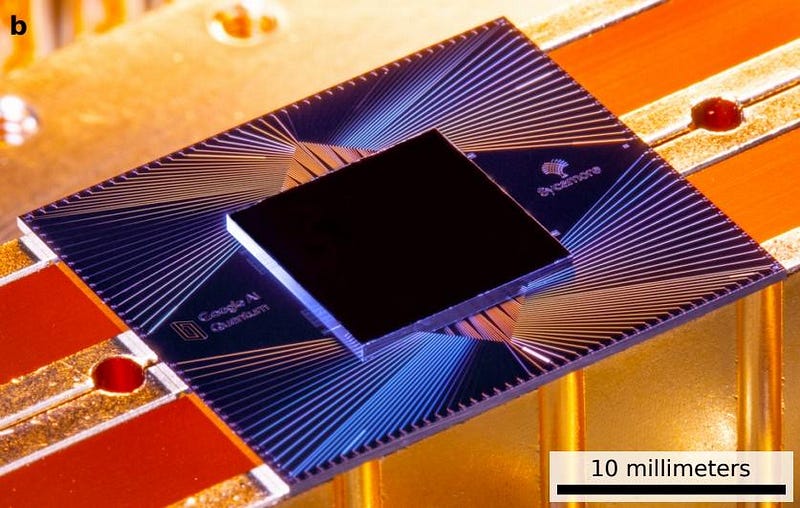

Practically, though, quantum computers aren’t perfect. Any quantum system, no matter how it’s prepared (the Google team used superconducting qubits, but other quantum computers, using quantum dots or ion traps, for example, are also possible), will have a coherence time: the amount of time you can expect a qubit prepared in an excited state (i.e., 1) to remain in that state. Beyond that time, it should decay back to the ground state, or 0.

This is important, because it requires a finite amount of time to apply a quantum operator to your system: known as gate time. The gate time must be very short compared to the coherence timescale, otherwise your state might decay and your final state won’t give you the desired outcome. Also, the more qubits you have, the greater the complexity of your device and the higher the probability of error-introducing crosstalk between qubits. In order to have an error-free quantum computer, you must apply all of your quantum gates to the full suite of qubits before the system decoheres.

Superconducting qubits remain stable only for ~50 microseconds. Even with a gate time of ~20 nanoseconds, you can only expect to perform a few dozen computations, at most, before decoherence ruins your experiment and gives you the dreaded flat distribution, losing the quantum behavior we sought after so thoroughly.

The problem that the Google scientists solved with their 53-qubit computer was not a useful problem in any regard. In fact, the setup was specifically engineered to be easy for quantum computers and computationally very expensive for classical ones. The way they finessed this was to make a system of n qubits, which requires on the order of 2^n bits of memory on a classical computer to simulate, and to pick operations that are as computationally expensive as possible for a classical computer.

The original algorithm put forth by a collaboration of scientists, including many on the current Google team, required a 72-qubit quantum computer to demonstrate Quantum Supremacy. Because the team couldn’t achieve that just yet, they went back to the 53-qubit computer, but replaces an easy-to-simulate quantum gate (CZ) with another quantum gate: the fSim gate (which is a combination of the CZ with the iSWAP gate), which is more computationally expensive to simulate for a classical computer.

There is a long-shot hope for those who want to preserve the extended Church-Turing thesis: perhaps with a clever enough computational algorithm, we could lower the computational time for this problem on a classical computer. It seems unlikely that this is plausible, but it’s the one scenario that could revoke what appears to be the first achievement of Quantum Supremacy.

For now, though, the Google team appears to have achieved Quantum Supremacy for the first time: by solving this one particular (and probably not practically useful) mathematical problem. They performed this computational task with a quantum computer in a much faster time than even the biggest, most powerful (classical) supercomputer in the country could. But achieving useful Quantum Supremacy would enable us to:

- make high-performance quantum chemistry and quantum physics calculations,

- replace all classical computers with superior quantum computers,

- and to run Shor’s algorithm for arbitrarily large numbers.

Quantum Supremacy may have arrived; useful Quantum Supremacy is still far off from being achieved. For example, if you wanted to factor a 20-digit semiprime number, Google’s quantum computer cannot solve this problem at all. Your off-the-shelf laptop, however, can do this in milliseconds.

Progress in the world of quantum computing is astounding, and despite the claims of its detractors, systems with greater numbers of qubits are undoubtedly on the horizon. When successful quantum error-correction arrives (which will certainly require many more qubits and the necessity of addressing and solving a number of other issues), we’ll be able to extend the coherence timescale and perform even more in-depth calculations. As the Google team themselves noted,

Our experiment suggests that a model of computation may now be available that violates [the extended Church-Turing thesis]. We have performed random quantum circuit sampling in polynomial time with a physically realized quantum processor (with sufficiently low error rates), yet no efficient method is known to exist for classical computing machinery.

With the creation of the very first programmable quantum computer that can efficiently perform a calculation on qubits that cannot be efficiently carried out on a classical computer, Quantum Supremacy has officially arrived. Later this year, the Google team will surely publish this result and be lauded for their extraordinary accomplishment. But our biggest dreams of quantum computing are still a long way off. It’s more important than ever, if we want to get there, to keep on pushing the frontiers as fast and far as possible.

Additional resources and information can be found from Quanta Magazine, the Financial Times, Scott Aaronson, and this 2017 publication.

Ethan Siegel is the author of Beyond the Galaxy and Treknology. You can pre-order his third book, currently in development: the Encyclopaedia Cosmologica.