Déjà vu is a neurological phenomenon, scientists claim

Have you ever experienced déjà vu? If so, you are among the 60-70% of the population who has. The majority of those who report déjà vu are between the ages 15 and 25. I’m a complete rationalist who believes that every phenomenon, no matter how strange or supernatural it appears, has a scientific reason behind it. Even so, I’ve met several people and walked into a few situations where, though I had never been there before or met the person before, I suddenly felt awash in a bizarre familiarity. This transcendental sensation can shake beliefs such as mine right to their very core.

But I wasn’t ready to denounce science yet. And I’m glad. Because it turns out, there is a rational explanation. Though some radical notions have in the past been connected to this strange feeling, such as déjà vu being a momentarily aligning with a past life or another you in a parallel universe, or as my editor suggests—a glitch in the matrix, scientists now believe it has a neurological basis.

Unfortunately, the feeling is here one minute and gone the next, making it difficult to study. Even so, there are quite a few theories on what causes it. One traditional hypothesis, posited by psychiatrists, is mismatched brain signals. For a second it feels as though we are transported to a moment in the past and we mistake it for the present. This may be why it’s been associated with the idea of reincarnation.

Another theory is that déjà vu is our brain trying to piece together a situation on limited information. A third states that it is a misfiring in the parts of the brain that recall memory and decipher sensory input. Sensory information, rather than taking the proper channels, leaks out of the short-term memory and into the long-term one. In this way, current experiences seem to be connected to the past. Some studies even suggest that familiar geometric shapes give us a sense of knowing something about a place that is, in reality, totally unfamiliar to us.

Instead of a glitch in the matrix, déjà vu may just be a glitch in our memory.

Since we are completely aware of everything that’s going on when we experience déjà vu, this suggests that every part of the brain need not participate for the sensation to take place. Psychologist Anne M. Cleary at Colorado State University, in a study in 2008, found that déjà vu followed patterns we associate with memory, specifically recognition memory. This is the kind that gets us to understand that we are confronting something that we’ve seen or experienced before. If you’ve ever recognized a landmark, a friend from across the room, or a song on the stereo, you’ve experienced recognition memory.

Familiarity-based recognition is associated with it. Here, we have that feeling of familiarity, but we can’t quite place where we’ve seen this person, place, or thing. For instance, you recognize someone across the street, but can’t remember their name or where you know them from. Prof. Cleary conducted several studies which found that déjà vu is a form of familiarity-based recognition. Her work suggests that our memory stores items in fragments. When there is a certain overlap between old and new experiences, we have strong feelings about the connection, which we interpret as déjà vu.

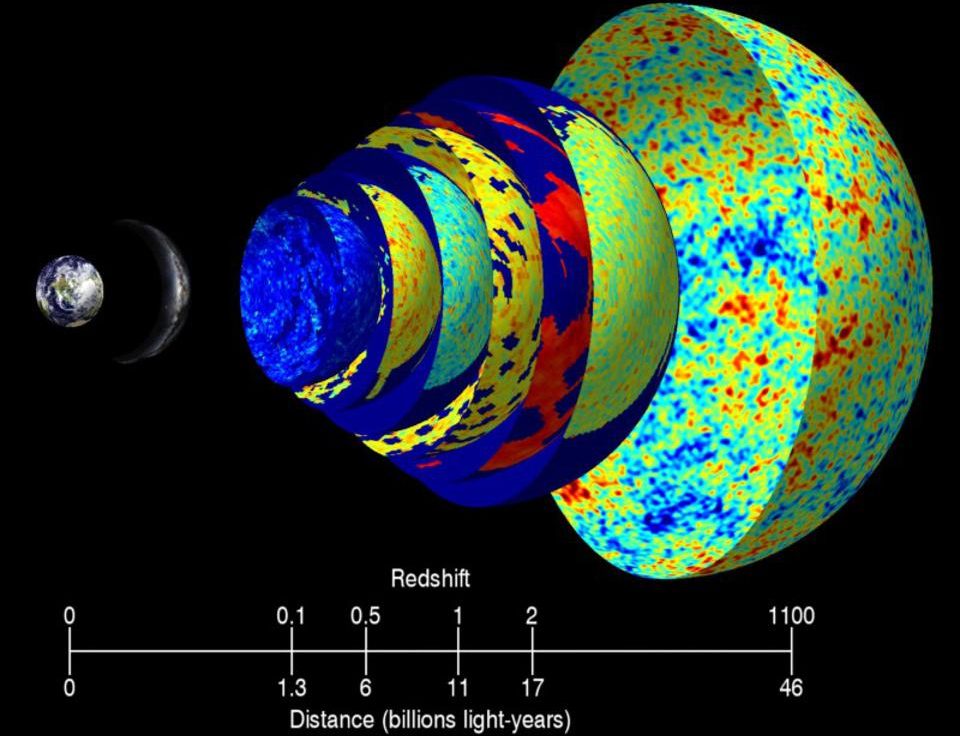

Recent studies looking at epileptic patients made impressive breakthroughs in our understanding of the phenomenon. Epileptics with certain intractable conditions require electrodes to be placed inside their brains in order to locate the source of their seizures. During this procedure, some neurologists have had patients experience déjà vu. They soon discovered that the phenomenon takes place in the medial temporal lobe, which is responsible for memory. The electrodes are usually placed within the rhinal cortex—the most important piece of which is the hippocampus, the structure responsible for long-term memory formation. French scientists have found that firing current into this cortex can trigger an episode of déjà vu.

Location of the amygdala and the hippocampus. By OpenStax College [CC BY 3.0], Wikimedia Commons.

The French study, published in the journal Clinical Neurophysiology, measured EEG wave patterns from patients with epilepsy who experienced déjà vu through electrical stimulation. The areas of the brain they examined included the amygdala, which is responsible for emotion and the hippocampus. Researchers found that electrical patterns, emanating from rhinal cortices and the amygdala or the hippocampus, caused déjà vu to occur. These neuroscientists believe that some sort of electrical phenomenon in the medial temporal lobe activates the memory in such a way that it causes déjà vu to occur.

Stranger still, scientists in the UK have actually found patients who experience “chronic déjà vu.” In this case, experts identified four senior citizens who encounter the feeling on a consistent basis. What is the impact of such a phenomenon? It made them feel as if they were clairvoyant. All four refused to go to the doctor, believing they already knew what the physician would say, and avoided watching the news, thinking they already knew the outcome. That’s because each time they took part in either activity that was the result they came to.

Each individual experienced some type of problem with the temporal lobe of their brain. The circuits in that area were in a sense stuck in the “on” position. It just goes to show that when we don’t know the reason for a phenomenon or sensation, our mind assigns a meaning to it. But that isn’t necessarily the correct one. And even though knowing the neurological basis of déjà vu may evaporate the supernatural awe surrounding it, understanding the phenomenon better puts a scientific mind, like mine, at ease.

—