The Irrational Risk of Thinking We Can Be Rational About Risk

In his groundbreaking 1995 book Descartes’ Error, neuroscientist Antonio Damasio describes Elliott, a patient who had no problem understanding information, but who nonetheless could not live a normal life. Elliott passed every standard intelligence test with flying colors. But he was dysfunctional because he was missing one thing: his cognitive brain couldn’t converse with his emotional brain. An operation to control violent seizures had severed the connection between Elliott’s prefrontal cortex, the area behind the forehead that plays a key role in making decisions, and the limbic area down near the brain stem, which is involved with emotions.

As a result, Elliott had the facts, but he couldn’t use them to make decisions. Without feelings to give the facts valence they were useless. Indeed, Elliott teaches us that in the most precise sense of the word, facts are meaningless…just disconnected ones and zeroes in the computer until we run them through the software of how those facts feel. Of all the building evidence about human cognition that suggests we ought to be a little more humble about our ability to reason, no other finding has more significance, because Elliott teaches us that no matter how smart we like to think we are, our perceptions are inescapably a blend of reason and gut reaction, intellect and instinct, facts and feelings. That means decisions and choices that feel right may sometimes fly in the face of the evidence. Nowhere is that more crucially true than in the way we perceive and respond to risk.

Because our perceptions rely as much as or more on feelings than simply on the facts, we sometimes get risk wrong. We’re more afraid of some risks than we need to be (child abduction, vaccines), and not as afraid of some as we ought to be (climate change, particulate air pollution), and that “Perception Gap” can be a risk in and of itself. Thanks to research by Paul Slovic and Baruch Fischhoff and many others, we know the specific psychological personality traits that make some risks feel scarier than others, the evidence notwithstanding. A natural risk (radiation from the sun) is less frightening than one which is human-made (radiation from nuclear power plant accidents). A risk we think we have some control over (driving) is less scary that if we feel powerless (flying). There are more than a dozen of these risk perception factors, (see Ch. 3 of “How Risky Is It, Really? Why Our Fears Don’t Match the Facts“, available online free at) and examples of how they can lead to a Perception Gap, and dangerous choices and behaviors, are common.

Yet despite all we know about the frailties of our risk perception system, and indeed about how the instinctive and subjective nature of cognition in general can lead to errors, many people, particularly intellectuals and academics and policy makers, maintain a stubborn post-Enlightenment confidence in the supreme power of rationality. They continue to believe that we can make the ‘right’ choices about risk based on the facts, that with enough ‘sound science’ evidence from toxicology and epidemiology and cost-benefit analysis, the facts will reveal THE TRUTH. At best this confidence is hopeful naivete. At worst, it is intellectual arrogance that denies all we’ve learned about the realities of human cognition. In either case, it’s dangerous, because as Elliott teaches us, our perceptions are subjective interpretations of the facts that sometimes irrationally fly in the face of the evidence

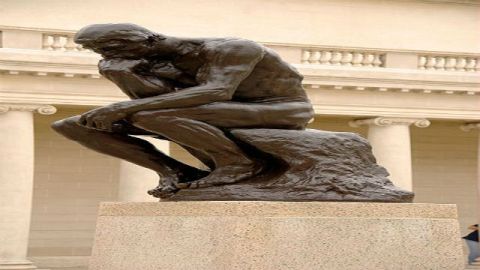

We have not evolved in the past several thousand years into pure Rationalists. We remain Affectives…Homo Naturalis, not dispassionate perfectly rational Homo Economicus. We need to heed the wisdom of Blaise Pascal, who observed, “We know the truth, not only by the reason, but by the heart.” Pascal spent the first part of his life as a brilliant rational mathematician and scientist—tutored in part by rationalist Descartes (“I think, therefore I am”) himself—but then turned into a religious philosopher. Pascal’s life perfectly sums things up. Head and Heart. Reason and Affect. Facts and Feelings. Thinking and Sensing. They are not separate. It’s not either/or. It’s and. We must understand that instinct and intellect are interwoven components of a single system that helps us perceive the world and make our judgments and choices, a system that worked fine when the risks we faced were simpler but which can make dangerous mistakes as we try to figure out some of the more complex dangers posed in our modern world.

So what are we to do? Elliott teaches us that we would be unwise to trust ourselves to always make the right calls when our reason is mixed with emotions and instincts not yet calibrated to handle the kinds of threats we now face. We can’t wait for evolution to work out the bugs, because we’re pretty clearly mucking things up so badly, so fast, that there isn’t time for that sort of patience.

What we can do to avoid the dangers that arise when our fears don’t match the facts—the most rational thing to do—is, first, to recognize that our risk perceptions can never be purely objectively perfectly ‘rational’, and that our subjective perceptions are prone to potentially dangerous mistakes. We have to let go of our fealty to the mythical false God of Perfect Reason, and recognize the risk we face if we irrationally assume we can be rational about risk.

Then we can begin to apply all the details we’ve discovered of how our risk perception system works, and use that knowledge and self-awareness to make wiser, more informed, healthier choices for ourselves and our families, and for the wider communities to which we all belong.