Just Post It

Yesterday, the journal Psychological Science published a paper by Uri Simonsohn online (it’s behind a paywall but you can access a free earlier version here). The paper calls on researchers to post their data so that the entire research process is transparent. There may be some reasonable exceptions to this rule, but it’s hard to argue that it should not be the default. Uri was kind enough to share a draft of his paper with me last summer and I wrote about it on my personal blog. That article is re-posted below.

Many of the potential reforms that psychological science could introduce to improve practices in the field are controversial — their benefits are not always certain and they often carry substantial costs. I don’t think that a default rule to post the data alongside one’s publication is one of these; it’s a sensible step that should be widely adopted.

——————————————-

(First posted July 22nd, 2012)

A quick update on the Simonsohn’s Just Post It paper – Friday’s post focused mostly on the steps Simonsohn took to avoid making false accusations and provided a link to the newly available paper so people could check out the details for themselves. I thought it was worth adding a little bit of explanation for people who are curious about the details. Without going into great detail – people who are interested can obviously read the paper themselves – I think Simonsohn’s analysis of Smeesters’ study that used a Willingness to Pay (WTP) measure is easy to understand and illustrates the assertion that Smeesters is very unlikely to have just dropped a few participants who didn’t follow directions (or even eliminated a handful of inconvenient data points) as he claims to have done. It also makes a strong case for Simonsohn’s call to post raw data. Note that the analysis isn’t representative of the other analyses Simonsohn conducted.

Willingness to Pay is a measure psychologists adopted from economists. Participants answer the maximum amount they would be willing to pay for some item, like a bottle of wine or a toaster, providing a more concrete estimate of how much they value it than, for instance, reporting how much they like something on a seven point scale. Smeesters used a WTP measure in one of the studies Simonsohn analyzed, asking participants how much they would be willing to pay for a t-shirt. Simonsohn explains why the data looks suspicious:

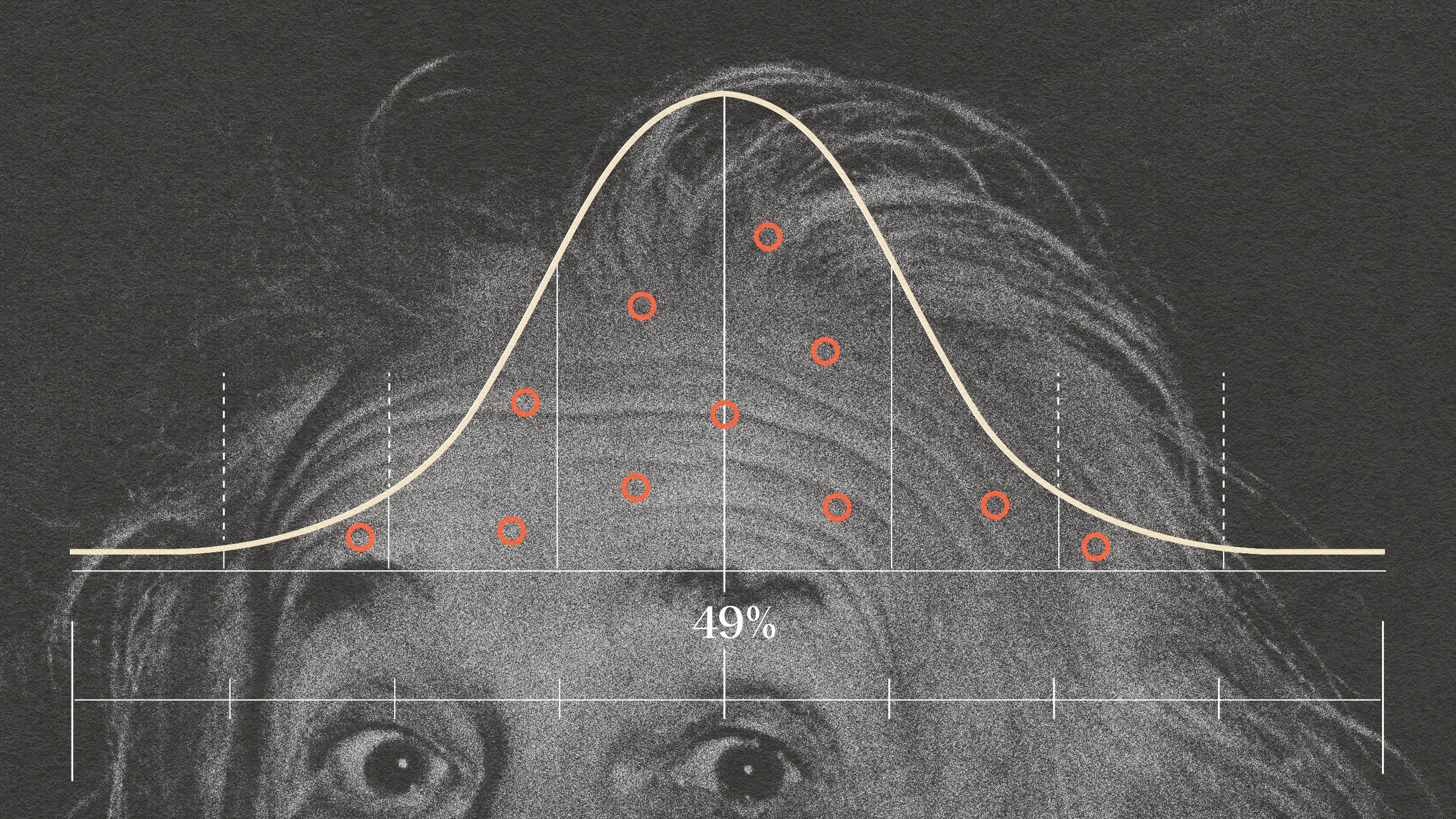

A striking pattern in the WTP data of the Smeesters study is the low frequency of valuations expressed as multiples of $5. Because people often round up or down when answering pricing questions, the percentage of such valuations is typically much higher than the rate expected if people answered randomly.

In other words, WTP data generated by real people should have a lot of data points that are multiples of $5 because people tend to round — they’re more likely to answer $10 or $25 than $9.87 or $25.32. Smeesters’ data doesn’t reflect this tendency and therefore appear not to have been reported by actual subjects. To confirm this suspicion, Simonsohn obtained the data from a number of other studies that used the WTP measure in addition to re-running Smeesters’ study himself. In each of 29 studies, at least 50% of WTP valuations were multiples of $5; in the two studies conducted by Smeesters that proportion was closer to the 20% expected by chance. This can be seen vividly in the graph below:  In the graph, Smeesters’ studies clearly stand out from the rest, more closely matching what one would expect to see if the valuations were chosen randomly (horizontal red line) than the trend line generated by all the other WTP studies. Note that the upward slope on that line reflects the fact that people become even more likely to round as valuations increase in size. [pullquote align=”left|center|right” textalign=”left|center|right” width=”30%”]this evidence is a strong indication that Smeesters’ data was not generated by actual subjects[/pullquote] By itself, this evidence is a strong indication that Smeesters’ data was not generated by actual subjects, but Simonsohn’s paper goes well beyond this analysis. In fact, this was a follow-up to other analyses that had initially aroused Simonsohn’s suspicion, which relied solely on the data Smeesters reported in published papers. The WTP analysis makes a strong case for why, as Simonsohn argues, raw data should be made publicly available. With access to the raw data, it becomes much more difficult for someone to fabricate their data because they need to ensure its internal coherence. Examining the data in the WTP study not only reveals that it does not appear to have been generated by actual subjects because valuations in multiples of $5 are not over-represented as one would expect, it also shows a very strange pattern of within-subject correlations.

In the graph, Smeesters’ studies clearly stand out from the rest, more closely matching what one would expect to see if the valuations were chosen randomly (horizontal red line) than the trend line generated by all the other WTP studies. Note that the upward slope on that line reflects the fact that people become even more likely to round as valuations increase in size. [pullquote align=”left|center|right” textalign=”left|center|right” width=”30%”]this evidence is a strong indication that Smeesters’ data was not generated by actual subjects[/pullquote] By itself, this evidence is a strong indication that Smeesters’ data was not generated by actual subjects, but Simonsohn’s paper goes well beyond this analysis. In fact, this was a follow-up to other analyses that had initially aroused Simonsohn’s suspicion, which relied solely on the data Smeesters reported in published papers. The WTP analysis makes a strong case for why, as Simonsohn argues, raw data should be made publicly available. With access to the raw data, it becomes much more difficult for someone to fabricate their data because they need to ensure its internal coherence. Examining the data in the WTP study not only reveals that it does not appear to have been generated by actual subjects because valuations in multiples of $5 are not over-represented as one would expect, it also shows a very strange pattern of within-subject correlations.

Lastly, it’s worth briefly addressing the concern that, in publishing this paper, Simonsohn is providing a manual for would-be fraudsters. I don’t find this concern compelling for at least two reasons. First, the analyses in Simonsohn’s paper do not come close to exhausting the analyses that could reveal fraud. This is why it’s particularly important that publishing raw data becomes the default — it’s very hard to fabricate data that truly reflects what real data would look like. And second, if we are not transparent in the methods we use to detect fraud then the whole enterprise risks appearing like a witch hunt, thereby making researchers less open to much-needed reforms. As I argue in this post about reforming social psychology, most psychologists are honest researchers with good intentions and respect for truth and the scientific process. It’s critical that we are transparent in the methods we use to detect fraud to assure people that any accusations of fraud have merit, that they have been exhaustively confirmed and re-confirmed, and that they are not being used like “a medieval instrument of torture” on researchers who didn’t realize they were doing anything wrong.

——————————————-

You can also read an interview that Ed Yong did with Uri for Nature, as well as Ed’s blog post on the subject. There was also a profile of Uri in the Atlantic Monthly last year by Christopher Shea. You can also check out my other writing on the subject; I’d start with Crimes and Misdemeanors.