The perpetual quest for a truth machine

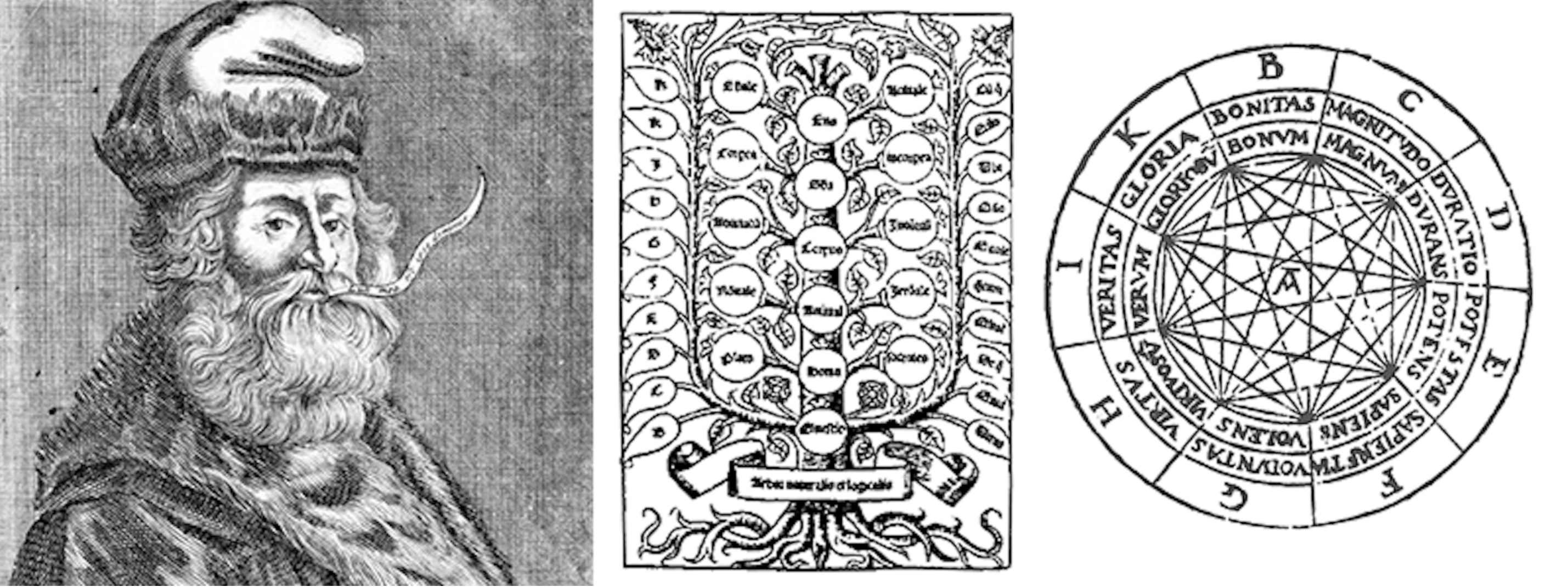

In the 13th century, the young married patrician Ramon Llull was living a licentious life in Majorca, lusting after women and squandering his time writing “worthless songs and poems.” His loose behavior, however, gave way to a series of divine revelations. His visions urged him to write what he believed would be the best book conceived by a mortal: a book that could converse with its readers and truthfully answer any question about faith.

It would be, in a sense, an early chatbot: a mechanical missionary that could be sent to the farthest reaches of humanity to convert any unbeliever with undeniable truths about the universe. Europeans had spent the past two centuries attempting to win hearts through the blood-drenched Crusades. Llull was determined to invent a linguistic device that would communicate a higher truth not through violence, but fact.

His main works, collectively known as Ars Magna, described a sort of logic machine: one that, Llull claimed, could prove the existence of the Christian God to even the most stubborn heretic. Llull likely took inspiration from the zairja, another combinatorial device, which Muslim astrologers used to help generate new ideas. In the zairja, letters were distributed around a paper wheel like the hours on a clock. They could be recombined to answer questions through a series of mechanical operations.

Llull divided his machine’s paper wheels into fundamental religious concepts, including goodness, eternity, understanding, and love. Users would rotate a series of concentric paper discs mounted on threads to combine different divine attributes into logically true statements. It was hardly a turnkey system—potential converts would have to study for months to be able to consult it. And Llull’s obsessively detailed examples obfuscated, rather than revealed, how the machine worked. But his hope to derive truth through the reduction—and mechanical recombination—of knowledge into basic principles and terms prefigured contemporary computing by nearly 800 years.

Although Llull was certain his logic machine would demonstrate the truth of the Bible and gain new Christian converts, he was ultimately unsuccessful. One report has it that he was stoned to death while on a missionary trip to Tunisia.

Humans exist at an uneasy threshold. We have a dizzying ability to make meaning from the world, braid language into stories to construct understanding, and search for patterns that might reveal larger, more steady truth. Yet we also recognize our mental efforts are often flawed, arbitrary, incomplete.

Woven throughout the centuries is a burning obsession with accessing truth beyond human fallibility—a utopian dream of automated certainty. Llull, and many thinkers since, hoped a sort of machine could operationalize logic through language to end disagreements—and perhaps even war—opening access to a single, indisputable truth. This has been the seduction of modern computers and artificial intelligence. If our limited human minds can’t alight—or agree—on pure, rational truth, perhaps we could invent an external one that can, one that would use language to calculate our way there.

For at least a millennium, humans have attempted to take these two marvelously malleable and uniquely human abilities—abstract thinking and descriptive language—and outsource them to something more rational.

In the 17th century, mathematician Gottfried Wilhelm Leibniz created a mechanical—but descriptive—logic machine. In Dissertatio de Arte Combinatoria, published in 1666 when he was 19, Leibniz proposed that all concepts could be described as combinations of simpler concepts, in the same way words are composed of letters.

Leibniz admired Llull’s Ars but found it lacking. Its basic concepts were too arbitrary: Why goodness, eternity, and love, for instance, and not others? To mechanically simulate logic, Leibniz suggested, we needed to discover the full alphabet of human thought: the basic set of concepts from which all others could be constructed. It would be a divine language that, as he wrote in a later letter, “perfectly represents the relationships between our thoughts.”

Leibniz hoped this would lead to the language in which God wrote the universe, one in which no untruth could be spoken. Leibniz believed his own “mother of all inventions” would usher in utopia, accelerate science, and perfect theology. He hoped to create a machine that could provide certainty in any realm, be it philosophy or politics, medicine or physics. Lines of reasoning in any field would become as “tangible as those of the Mathematicians,” he wrote. Instead of disagreeing, people would feed their questions into this logic machine, declaring: “Let us calculate, without further ado, to see who is right.”

Like René Descartes before him, Leibniz believed truth could be discovered through reason alone. Jonathan Swift, for one, ridiculed the notion. In his 18th-century satiric novel Gulliver’s Travels, Swift portrayed scholars at the Grand Academy of Lagado using an “engine” to write books. A spoof of Leibniz’s machine, the Lilliputian engine consisted of a wire mesh string on which hung dice inscribed with words. Scholars would hand crank the wires to spin the dice and create new combinations of words. Philosophers would then record these combinations into books “without the least assistance from genius or study.”

Undeterred by such public scoffing, scholars continued to try to build a truth-generating machine. English mathematician George Boole had a visionary insight at the age of 17 into the nature of the mind and how it “accumulates knowledge.” Decoding this vision became his life’s work. Like Llull and Leibniz, he grew obsessed with the idea of creating a system of language that could put disagreements to rest and calculate truth by mathematical certainty. This led Boole to his 1854 book, Laws of Thought, in which he pioneered a novel form of logic predicated on a new measure of truth: yes or no.

Previously, algebraic variables (like x) stood in for numbers. But what if these variables stood for something else—ideas, for example? What if we could perform algebra on ideas to calculate whether they are true or false and combine these to come to other logical conclusions?

Boole demonstrated that he could turn Aristotle’s logical propositions into mathematical equations. The proposition “all men are mortal” could be expressed as y=vx. Though Boole was delighted to learn that Leibniz had tried to work out a similar language, he was disappointed that his own work had only captured the interest of mathematicians. According to his wife, Boole “meant to throw light on the nature of the human mind.” Instead, Boole’s logic found no immediate practical application and was relegated to the dusty corners of philosophy departments.

In the 1930s, in one of these philosophy departments, Claude Shannon, an undergraduate at the University of Michigan, was introduced to Boole’s ideas. Being a fan of puzzles, Shannon enjoyed symbolic logic and would go on to realize its full potential while a master’s student at MIT. There, he worked as a research assistant to Vannevar Bush, helping visiting scientists use Bush’s giant mechanical calculator to solve their research problems. After repeatedly adjusting the unwieldy machine, he realized he could simplify the calculator’s fussy spinning discs and control relays using Boolean logic.

In his world-changing master’s thesis, Shannon demonstrated how Boolean logic could optimize the routing of telephone switches. Using these ideas, engineers could design circuits to perform calculation and control.

Shannon’s insight would serve as the foundation for modern computing, giving rise to the stream of zeros and ones that now innervate the digital realm. Shannon’s work promised to finally transmute messy human thought into the organized language of logic, perhaps even in pursuit of truth.

It might be said that “ChatGPT is bullshit.”

In 1913, the Russian mathematician Andrey Markov had sat down and tallied how often letters of the alphabet appeared in the first 20,000 words of Alexander Pushkin’s novel Eugene Onegin. He also tallied letter pairs, and found that, if you randomly picked a vowel from the text, the most likely next letter would be a consonant—and vice versa. Letters weren’t peppered randomly throughout but obeyed underlying patterns.

Shannon expanded on this insight to create an early kind of language model. When he picked letters randomly from the alphabet, he generated a gibberish string: “XFOML RXKHRJFFJUJ ZLPWCFWKCYJ FFJEYVKCQSGHYD …” But when he drew from piles of letters with the same frequency of natural English pairs, he generated something that looked a little more like English: “IN NO IST LAT WHEY CRATICT FROURE BIRS GROCID …” The more conditional information he included, the more “real” the output sounded. In this manner, Shannon laid the groundwork for models that mimic higher-order statistics of natural languages.

By the 1960s, engineers had evolved this predictive technology to the extent it was already duping humans. Many users of that decade’s “chatterbot” ELIZA attributed a surprising degree of understanding to even its repetitive diction. Its creator, Joseph Weizenbaum, had intended ELIZA to be a parody of psychotherapists and was surprised that it captivated users.

Today’s large language models, pioneered by Google, were popularized by researchers at OpenAI who noticed that the larger they made these models, the better the models scored on performance benchmarks. Ask ChatGPT to summarize the history of French military victories or alternative theories to dark matter, and it will appear an eager and instantaneous research assistant, ready in no time with an authoritatively phrased answer.

Current language models are trained by turning language into a guessing-game—rather than as an engine pointed toward larger truths. Random words are hidden from training text lifted from the cacophonous internet. The programs learn to guess which words are likely to fill these gaps and, in so doing, learn the complex web of dependencies that drive language. Like Swift’s engine, they spin through possible word combinations without concern for the truthfulness of the final formulated text. Because they’ve only learned to mimic the statistics of our messy language, they can’t represent the difference between fact and fiction.

It might be said that “ChatGPT is bullshit,” as the title of one recent paper, coauthored by philosopher of science Michael Townsen Hicks, asserts—citing the philosopher Harry Frankfurt’s definition of bullshit as “speech intended to persuade without regard for truth.” Instead of an externally calculated, more pure truth, these machines are distilling and reflecting back to us the chaos of human beliefs and chatter.

The hope remains that we might scale these models indefinitely to reach the point of general intelligence. That is, more extrapolation than certainty—the models may already be coming to a point of diminishing returns given they require an immense amount of data for minimal improvements in their performance.

In fact, the LLMs of today are missing something even Llull and Leibniz believed was essential to their machines: reason. While language models can use logic to some extent, they make many of the same reasoning mistakes humans do, being trained, as they are, on our data. But unlike humans, language models can’t self-correct to come to a better answer—in fact, reflection seems to make their performance worse, as researchers at Google DeepMind discovered.

In the end, truth machines haven’t progressed much from Llull’s Ars Magna. The 13th-century zealot hoped to automate truth to dispel people’s uncertainty—instead we’ve automated the uncertainty. Perhaps the elusive truth about the universe does lie in the baroque, feverish ramblings of Llull’s Ars Magna, if only somebody could decipher it. Just don’t ask ChatGPT.

This article originally appeared on Nautilus, a science and culture magazine for curious readers. Sign up for the Nautilus newsletter.