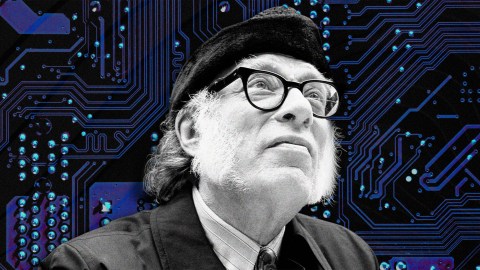

3 rules for robots from Isaac Asimov — and one crucial rule he missed

- Isaac Asimov’s stories explore the implications of a world in which robots match and exceed human talents.

- If humans have defined themselves by what they’re the best at — like intelligence and creativity — will robots become more human than humans?

- Asimov devised three laws for robots to follow. He missed a crucial fourth one.

Those who have known power all their lives will hate the idea of losing it. If you are born into privilege, with fortune on your side, you’ll rage against the idea that it might be taken from you. If you’ve been told that you’re the brightest, the strongest, and the best for as long as you can remember, it will hurt to find out that you’re decidedly below average. None of us likes to be humbled. No human wants to feel inferior.

We have all, collectively, grown up with the knowledge that we are the apex predator. Everyone instinctively believes that Homo sapiens, with our immense intelligence and remarkable talent for invention, have rightly and justly come to dominate the world. We are the kingpin and boss — the best thing evolution could ever create. Bow before our Mozarts and Einsteins!

This is why many people find AI and technology so terrifying. Suddenly, we’re presented with the possibility that we’re no longer the best and brightest. In a flash in the 21st century, we have gone from the font of all wisdom and creativity to clumsy apes fumbling with antiquated tools. It’s this idea that forms the basis of one of Isaac Asimov’s best stories: “…That Thou Art Mindful of Him.”

“…That Thou Art Mindful of Him”

There’s a well-known saying (dubiously attributed to Gandhi) which goes, “First they ignore you, then they laugh at you, then they fight you, then you win.” It’s been said about revolutionary ideas and movements, and it’s something that’s also true for how we view artificial intelligence.

Within the space of a few months, humans have gone from the center of the universe to something that looks a lot like a beta test.

We’ve had computers for a very long time. Most people reading this will have grown up with them. They’ve been the omnipresent background music to our daily lives for half a century. We work at laptops and we carry smartphones; we use calculators, spreadsheets, and internet banking; we talk to voice assistants and let an app tell us the quickest route home. All of these are acceptable. They are helpful and efficient, in a way that is entirely unthreatening.

Sure, I can’t do sums like my calculator can, but my calculator can’t hold a conversation. Yes, I can’t tell you if there’s traffic on the highway, but my GPS can’t make a work of art. Technological inventions are okay so long as they do not pose any existential threat to our lives. They’re fine as gimmicks or entertainment, or if they demonstrate isolated, narrow intelligence because life can go on, mostly, as normal. We can ignore them or laugh at how Siri doesn’t understand my accent.

We are on the cusp, though, of a huge change. Almost overnight, AI has erupted with a great, upheaving burst. It’s making art and books, music and movies. It’s holding conversations and recognizing faces. AI is, in short, doing all those things that we think we’re the best at. In some cases, it’s actually doing it better. Within the space of a few months, humans have gone from the center of the universe to something that looks a lot like a beta test.

It’s this idea that Asimov explores in his short story, “…That Thou Art Mindful of Him.” In this world, humans are terrified and suspicious of all robots and artificial intelligence. They lobby governments and corporations to never produce anything remotely powerful or life-altering. And so, one of these corporations pushes back. It gets two of their most spectacular AIs to generate an ingenious plan to get humans to trust them: Introduce robots that are no threat at all, but which are useful. Bring AI to the human world in a way that is indispensable, yet seemingly harmless. Have people wire into the machine. Over time, more sophisticated robots and more generalized intelligences could be introduced — all with the proviso that they are under total human command. It’s not being second that humans worry about so much, but rather that they are not in control. It’s not inferiority that matters, but powerlessness.

Asimov’s Three Laws of Robotics

Asimov’s books all tend to revolve around his “Three Laws of Robotics.” The laws, essentially, are mechanisms to keep humans in control of things. They say that robots must always obey and never harm a human being. In full, they are:

- A robot may not injure a human being or, through inaction, allow a human being to come to harm.

- A robot must obey the orders given it by human beings except where such orders would conflict with the First Law.

- A robot must protect its own existence as long as such protection does not conflict with the First or Second Laws.

Asimov’s stories work brilliantly as science fiction, but also to show us how difficult — impossible, even — it is to generate problem-free laws in controlling any intelligence. There always will be a loophole or exception. All laws need leeway and flexibility, otherwise they’ll break (or break those they’re trying to protect). As a recent paper in the journal, Science Robotics, puts it, Asimov’s stories, “warn of the improbability of devising a small set of simple rules to adequately design or regulate complex machines, humans, and their interactions.” Perhaps this is something the “pause AI” people should consider.

In “…That Thou Art Mindful of Him,” the twist of the story is particularly prescient. The robots interpret “human” as possessing “apex intelligence.” Humans have defined themselves as separate from other species by things like creativity and high intelligence. If an AI is better than humans in those features, do they have a greater claim to being “human”? Into this world, a world where humans are relegated to inferiority, does “human” simply mean having a Homo sapiens genome? If or when a general artificial intelligence arrives, that’s more than just a threat to our way of life. That’s a threat to how we even understand humanity.

The missing Fourth Law

As we move into the world of AI that does human things better than humans, most of us will still want to know what is and what is not made by a human. It matters to me that the novel I’m reading is written by a human. I suspect it matters to you if a poem, song, or piece of art was made by a robot. Perhaps Asimov missed an essential Fourth Law: A robot must identify itself. We have the right to know if we’re interacting with a human or AI.

Jonny Thomson teaches philosophy in Oxford. He runs a popular account called Mini Philosophy and his first book is Mini Philosophy: A Small Book of Big Ideas.