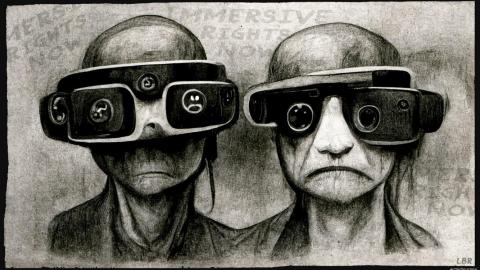

The case for demanding “immersive rights” in the metaverse

- The metaverse is set to transform how we buy goods and services and interact with each other.

- Although many of these changes could be positive, AR and VR technologies could also enable advertisers and propagandists to manipulate us in subtle yet effective ways.

- Policymakers and tech companies should begin thinking about how to guarantee basic “immersive rights” in the metaverse.

Ready or not, the metaverse is coming, bringing a vision of the future in which we spend a significant portion of our lives in virtual and augmented worlds. While it sounds like science fiction to some, a recent study by McKinsey found that many consumers expect to spend more than four hours a day in the metaverse within the next five years.

Personally, I believe this is a dangerous inflection point in human history. The metaverse could be a creative medium that expands what it means to be human. Or it could become a deeply oppressive technology that gives corporations (and state actors) unprecedented control over society. I don’t make that statement lightly. I’ve been a champion of virtual and augmented worlds for over 30 years, starting as a researcher at Stanford, NASA, and the US Air Force, and then founding a number of VR and AR companies. Having lived through multiple hype-cycles, followed by cold winters, I believe we’re finally here: The metaverse will soon have a major impact on society. Unfortunately, the lack of regulatory protections has me deeply concerned.

But first, what is the Metaverse?

Carving away the many tangential technologies that distract from the core concept, we can boil the essence down to a clean and simple definition:

“The metaverse” represents the broad societal shift in how we engage digital content, going from flat media viewed in the 3rd person to immersive experiences engaged in the 1st person.

The metaverse will have two primary flavors: virtual and augmented, which will overlap but evolve at different rates, involve different corporate players, and likely adopt different business models. The “virtual metaverse” will evolve out of gaming and social media, bringing us purely virtual worlds for entertainment, socializing, shopping, and other limited-duration activities. The “augmented metaverse” will evolve out of the mobile phone industry and will embellish the real world with immersive virtual overlays that bring artistic and informational content into our daily experiences at home, work, and public places.

Significant danger lies ahead

Whether virtual or augmented, this shift from flat media to immersive experiences will give metaverse platforms unprecedented power to track, profile, and influence users at levels far beyond anything we’ve experienced to date. In fact, as I discussed with POLITICO this week, an unregulated metaverse could become the most dangerous tool of persuasion that humanity has ever created.

That’s because metaverse platforms will not just track where users click, but also will monitor where users go, what they do, who they’re with, and what they look at. Platforms will also track posture and gait, assessing when users slow down to browse or speed up to pass locations they’re not interested in. Platforms will even know what products you grab off the shelves to look at in stores (virtual or augmented), how long you study the packaging, and even how your pupils dilate to indicate levels of engagement.

In the augmented metaverse, the ability to track gaze, gate, and posture raises unique concerns because the hardware will be worn throughout daily life. While users walk down real streets, platforms will know which store windows they peer in, for how long, even which portions of the display attracts their attention. Gait tracking can also be used to identify medical and psychological conditions, from detecting depression to predicting dementia.

Metaverse platforms will also track facial expressions, vocal inflections, and vital signs, while AI algorithms assess user emotions. Platform providers will use this to make avatars look more natural, with authentic facial reactions. While such features are valuable and humanizing, without regulation the same data could be used to create emotional profiles that document how individuals react to a range of situations and stimuli throughout their daily life.

Invasive monitoring is an obvious privacy concern, but the dangers expand when we consider that behavioral and emotional profiles can be used for targeted persuasion. That’s because advertising in the metaverse will transition from flat media to immersive experiences. This will likely include two unique forms of metaverse marketing known as Virtual Product Placements (VPPs) and Virtual Spokespeople (VSPs) as follows:

Virtual Product Placements (VPPs) are simulated products, services, or activities injected into an immersive world (virtual or augmented) on behalf of a paying sponsor such that they appear to the user as natural elements of the ambient environment.

Virtual Spokespeople (VSPs) are simulated persons or other characters injected into immersive environments (virtual or augmented) that verbally convey promotional content on behalf of a paying sponsor, often engaging users in AI-moderated promotional conversation.

To appreciate the impact of these marketing methods, consider Virtual Product Placements being deployed in a virtual or augmented city. While walking a busy street, a consumer who is profiled as a casual athlete of a particular age might see someone walking past them drinking a specific brand of sports drink or wearing a specific brand of workout clothing. Because this is a targeted VPP, other people around them would not see the same content. A teen might see people wearing trendy clothes or eating a certain fast food, while a young child might see an oversized action figure waving at them as they pass.

While product placements are passive, Virtual Spokespeople can be active, engaging users in promotional conversation on behalf of paying sponsors. While such capabilities seemed out of reach just a few years ago, recent breakthroughs in the fields of Large Language Models (LLMs) make these capabilities viable in the near term. The verbal exchange could be so authentic, consumers might not realize they are speaking to an AI-driven conversational agent with a predefined persuasive agenda. This opens the door for a wide range of predatory practices that go beyond traditional advertising toward outright manipulation.

Tracking and profiling of users is not a new problem — social media platforms and other tech services already do it. In the metaverse, however, the scale and intimacy of user monitoring will expand significantly. Similarly, predatory advertising and propaganda are not new problems. But in the metaverse, users could find it difficult to distinguish between authentic experiences and targeted promotional content injected on behalf of paying sponsors. This would enable metaverse platforms to easily manipulate user experiences on behalf of paying sponsors without their knowledge or consent.

The case for immersive rights

The shift from traditional advertising to promotionally altered experiences raises new concerns for consumers, making it important for policymakers to consider regulations that guarantee basic immersive rights. While there are many protections needed, I propose a few critical categories.

1. The right to experiential authenticity

Advertising is pervasive in the physical and digital world, from product marketing to political messaging, but most adults can easily identify promotional content. This allows individuals to view ads in the proper context: as paid messaging delivered by a party attempting to influence them. Having this context enables consumers to bring healthy skepticism and critical thinking when considering products, services, political ideas, and other messages they are exposed to.

In the metaverse, advertisers could subvert our ability to contextualize promotional content by blurring the boundaries between authentic experiences that are serendipitously encountered and targeted promotional experiences injected on behalf of paying sponsors. This could easily cross the line from marketing to deception and become a predatory practice.

Imagine walking down the street in a realistic virtual or augmented world. You notice a parked car you’ve never seen before. As you pass, you overhear the owner telling a friend how much they love the car. You keep walking, subconsciously influenced by what you believed was an authentic experience. What you don’t realize is that the encounter was promotional, placed there so you’d see the car and hear the exchange. It was also targeted — only you saw the exchange, which was chosen based on your profile and customized for maximum impact, from the color of the car to the gender, voice, and clothing of the Virtual Spokespeople used.

While this type of covert advertising might seem benign, merely influencing opinions about a new car, the same tools and techniques could be used to promotionally alter experiences that drive political propaganda, misinformation, and outright lies. To protect consumers, immersive tactics such as Virtual Product Placements and Virtual Spokespeople should be regulated.

In the least, regulations should protect the basic right to authentic experiences. This could be achieved by requiring that promotional artifacts and promotional people be visually and audibly distinct in an overt way, enabling users to perceive them in the proper context. This would protect consumers from mistaking promotionally altered experiences as authentic encounters.

2. The right to emotional privacy

We humans have evolved the ability to express emotions on our faces, voices, posture, and gestures. We have also evolved the ability to read these traits in others. This is a basic form of human communication that works in parallel with verbal language. In recent years, sensing technologies combined with machine learning have enabled software systems to identify human emotions in real time from faces, voices, posture, and gesture, as well as from vital signs such as respiration rate, heart rate, eye motions, pupil dilation, and blood pressure. Even facial blood flow patterns detected by cameras can be used to interpret emotions.

While many view this as enabling computers to engage in nonverbal language with humans, it can easily cross over to intrusive and exploitative violations of privacy. There are two reasons for this: sensitivity and profiling. In terms of sensitivity, computer systems are already able to detect emotions from cues that are not perceptible to humans. For example, a human observer cannot easily detect heart rate, respiration rate, and blood pressure, which means those signals may be revealing emotions that the observed individual had not intended to convey. Computers can also detect “micro-expressions” on faces that are too brief or too subtle for human observers to notice, but which again can reveal emotions that the observed individual had not intended to convey.

At a minimum, consumers should have the right not to be emotionally assessed at speeds and using trait detection that exceed natural human abilities. This means not allowing vital signs and micro-expressions to be used in emotion detection. In addition, the danger to consumers is amplified by the ability of platforms to collect emotional data over time and create profiles that could allow AI systems to predict the reactions of consumers to a wide range of stimuli. Emotional profiling should either be banned or require explicit consent on an application by application basis. This is especially important in the augmented metaverse where consumers will spend a large portion of their daily life being tracked and analyzed by wearable devices.

Even with informed consent, regulators should consider an outright ban on emotional analysis being used for promotional purposes. As mentioned above, consumers are likely to be targeted by AI-driven Virtual Spokespeople that engage them in promotional conversation. These agenda-driven conversational agents will likely have access to real-time facial expressions, vocal inflections, and vital signs. Without regulation, these conversational agents could be designed to adjust their promotional tactics mid-conversation based on the detected emotions of target users, including subtle emotional cues that no human representative could detect.

For example, an AI-driven conversational agent with a predefined promotional agenda could adapt its tactics based on detected blood pressure, heart rate, respiration rate, and facial blood patterns of the target consumer. This, combined with user profile data that reflects the target’s background, interests and inclinations, could make for an extremely persuasive medium that exceeds any prior form of advertising. It could be so persuasive, in fact, that it crosses the line from passive advertising to active manipulation.

3. The right to behavioral privacy

Consumers are generally aware that big tech companies track and profile their behaviors based on the websites they visit, the ads they click, and the relationships they maintain through social media. In an unregulated metaverse, large platforms will be able to track not just where users click, but where they go, what they do, who they’re with, how they move, and what they look at. In addition, emotional reactions can be assessed and correlated with all of these actions, tracking not just what users do but how they feel while doing it.

In both virtual and augmented worlds, extensive behavioral data is necessary in order for platforms to provide immersive experiences in real time. That said, the data is only needed at the moment these experiences are simulated. There is no inherent need to store this data over time. This is important because the storage of behavioral data can be used to create invasive profiles that document the daily actions of individual users in extreme granularity.

Such data could easily be processed by machine-learning systems to predict how individual users will act, react, and interact in a wide range of circumstances during their daily life. The prospect that metaverse platforms can accurately predict what users will do before they decide could become common practice in an unregulated metaverse. And because platforms will have the ability to alter environments for persuasive purposes, profiles could be used to preemptively manipulate behaviors with accurate results.

For these reasons, regulators and policymakers should consider banning metaverse platforms from storing behavioral data over time, thereby preventing platforms from generating detailed profiles of their users. In addition, metaverse platforms should not be allowed to correlate emotional data with behavioral data, for that correlation would allow them to impart promotionally altered experiences that don’t just manipulate what users do in immersive worlds, but also predictively influence how they’re likely to feel while doing it.

Immersive rights are necessary and urgent

The metaverse will have a major impact on our society. While many effects will be positive, we must protect consumers against the potential dangers. The most insidious dangers are likely those that involve promotionally altered experiences, as such tactics could give metaverse platforms predatory powers to influence their users.

Three decades ago as a young researcher, I studied the potential of augmented reality for the first time, running experiments to show the value of “embellishing the user’s perceptual reality.” Back then, I firmly believed AR would be a force for good, enhancing everything from surgical procedures to educational experiences. I still believe in the potential. But the ability to embellish reality through AR or alter reality through VR is a profound power that can also be used to manipulate populations.

To address these risks, policymakers must consider restrictions on metaverse platforms that guarantee basic rights in immersive worlds. At a minimum, every individual should have the right to go about their daily life without being emotionally or behaviorally profiled. Every individual should also have the right to trust the authenticity of their experiences without worry that third parties are covertly injecting targeted promotional content into their surroundings. These rights are necessary and urgent or the metaverse will not be a trusted place for anyone.