General AI: DeepMind’s virtual playground suggests a path forward

- General artificial intelligence doesn’t yet exist because no one has figured out how to teach a machine to succeed at tasks it wasn’t specifically trained on.

- Google sister company DeepMind has now highlighted a potential path to general AI.

- By the end of the study, the AIs were able to complete a range of tasks and could rapidly master games that completely stumped new AIs trained from scratch.

This article was originally published on our sister site, Freethink.

DeepMind has created a virtual playground that shows a path to creating general AI — the holy grail of artificial intelligence.

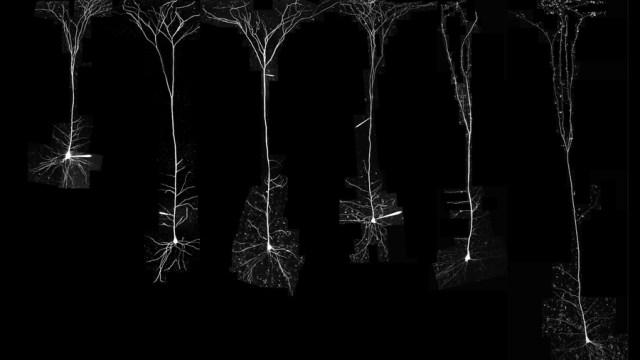

Reinforcement learning: If you want to train an AI to play chess, you can set up a virtual chessboard, list the rules, and let the AI learn the game through trial and error.

When it does something “right,” such as capturing a pawn, you give it a reward. When it does something majorly right, like winning the game, you give it a bigger reward.

Eventually, the AI will learn what it needs to do to get the most rewards, and boom, you have an AI that can beat any human at chess.

“This marks an important step toward creating more general agents.”

DEEPMIND

The challenge: This process is called reinforcement learning, and it’s one of the most effective ways to train AIs. However, it has a major limitation: at the end of the training, the AI only knows how to do one specific thing.

Even trying to train an AI that knows how to do that one thing (chess) to do something similar (such as Shogi, aka Japanese chess) requires starting the reinforcement learning process from scratch.

General AI: It would be useful to have a general AI that could use its smarts to solve all sorts of problems, including ones it has never seen before, just like humans do.

General AI doesn’t currently exist, though, because no one has figured out how to teach a machine to succeed at tasks it wasn’t specifically trained on.

In theory, we could just train an agent on everything, one task at a time, but that would require so much training data and time that it’s simply not feasible.

Welcome to XLand: Google sister company DeepMind has now highlighted a potential path to general AI.

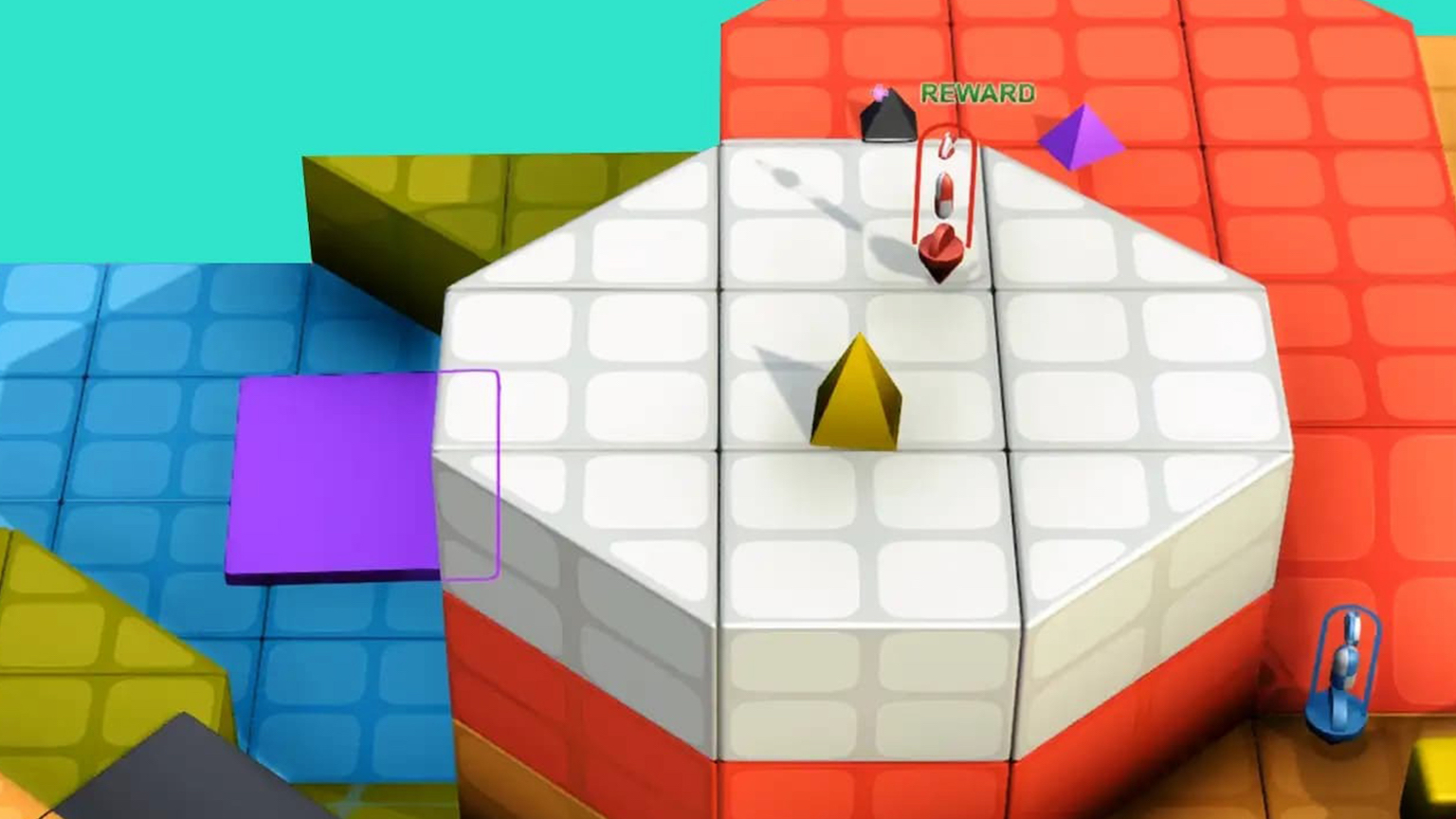

It designed a virtual world called “XLand,” where AI agents could navigate environments that look a bit like Battle Courses from Mario Kart. It then built an algorithm that could create billions of different game-like tasks for the AIs to complete in XLand.

The agents were rewarded for correctly completing tasks, just like they would in a standard reinforcement learning environment, and each new task was designed to be just hard enough to keep the agent learning something new.

The results: By the end of the study, the AIs were able to complete a range of tasks and could rapidly master games that completely stumped new AIs trained from scratch.

“We find the agent exhibits general, heuristic behaviours such as experimentation, behaviours that are widely applicable to many tasks rather than specialised to an individual task,” DeepMind wrote in a blog post.

“This new approach marks an important step toward creating more general agents with the flexibility to adapt rapidly within constantly changing environments,” it continued.

The next steps: To be clear, DeepMind’s agents aren’t general AI, but they are more well-rounded problem-solvers than AIs trained using traditional, narrow reinforcement learning.

That means the algorithm-as-taskmaster approach detailed in the researchers’ paper, which still needs to undergo peer-review, might be how we can create the more capable AIs of the future.