Are the posthumans here yet?

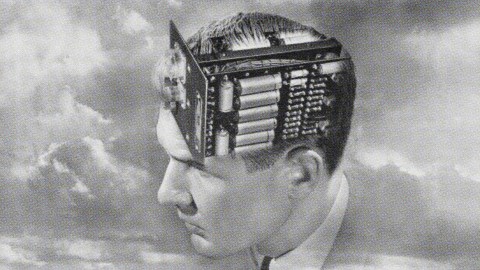

A recent survey found that two-thirds of workers believe that by 2035 workers will have an edge in the labor market if they’re willing to have performance-enhancing microchips implanted in their bodies. Technologically enhanced humans have a rich history in science fiction, but there are many questions about what real-life cyborgs would look like—and whether they already exist.

In 2017, Kevin Warwick, a robotics researcher and posthumanism enthusiast, examined technologies for human enhancement. He defines posthumanism as the permanent or semi-permanent implantation of machine components into a human body to enhance its abilities beyond the human norm.

Warwick himself was the first person to get a radio frequency identification device (RFID) implanted, back in 1998. The device—a collection of microchips and an antenna that powers the device and emits signals—allowed him to control lights and open doors. Since then, nightclubs have used similar implants to grant access to guests, and the Mexican government has also used them for security purposes. They’ve been used for a long time in pets and for animal research, too.

Warwick envisions a future where people could use chips as keys, credit cards, or passports. While he does not specifically address microchips in the workplace, he notes that people don’t want to feel anyone is forcing this kind of technology on them. He suggests that people might accept it voluntarily if it seems like a convenience.

“Such might be the case if, for example, the implant enables the user to bypass queues at passport control because extra information could be directed to the authorities as the individual merely marches through,” he writes.

Beyond microchips, Warwick looks at technologies intended to extend humans’ perception, such as magnets implanted under the skin to allow people to “feel” information gathered by external sensors. The most promising, and potentially most disturbing, type of technology he looks at is an array of microelectrodes attached to users’ brains. Here again, Warwick has tried out the technology himself, successfully receiving information from ultrasonic sensors and controlling external objects using neural signals. For example, while in England, he was able to control a robotic hand in New York and receive feedback from the robotic fingertips sent as neural stimulation. Elon Musk’s Neuralink company recently demonstrated a technology that can supposedly do something similar, but conveying more data using less-invasive hardware.

Ultimately, both Warwick and Musk envision a vast transformation in human capabilities through seamless connections between high-powered computers and human brains. That seems to be a long way from reality. But whether we’re talking about a leap forward for the species or just chips that unlock doors, one big question we may face is how much employers can ask workers to transform themselves for their jobs. That question is inescapably connected with power relationships. In the same survey for which workers assessed the advantages of microchipped employees, 57 percent said they’d be willing to get chipped themselves, as long as they felt it was safe. Only 31 percent of business leaders said the same.

This article appeared on JSTOR Daily, where news meets its scholarly match.