Elon Musk wants testers for Tesla’s long-awaited ‘full self-driving’ A.I. chip

Photo by VCG / VCG via Getty Images

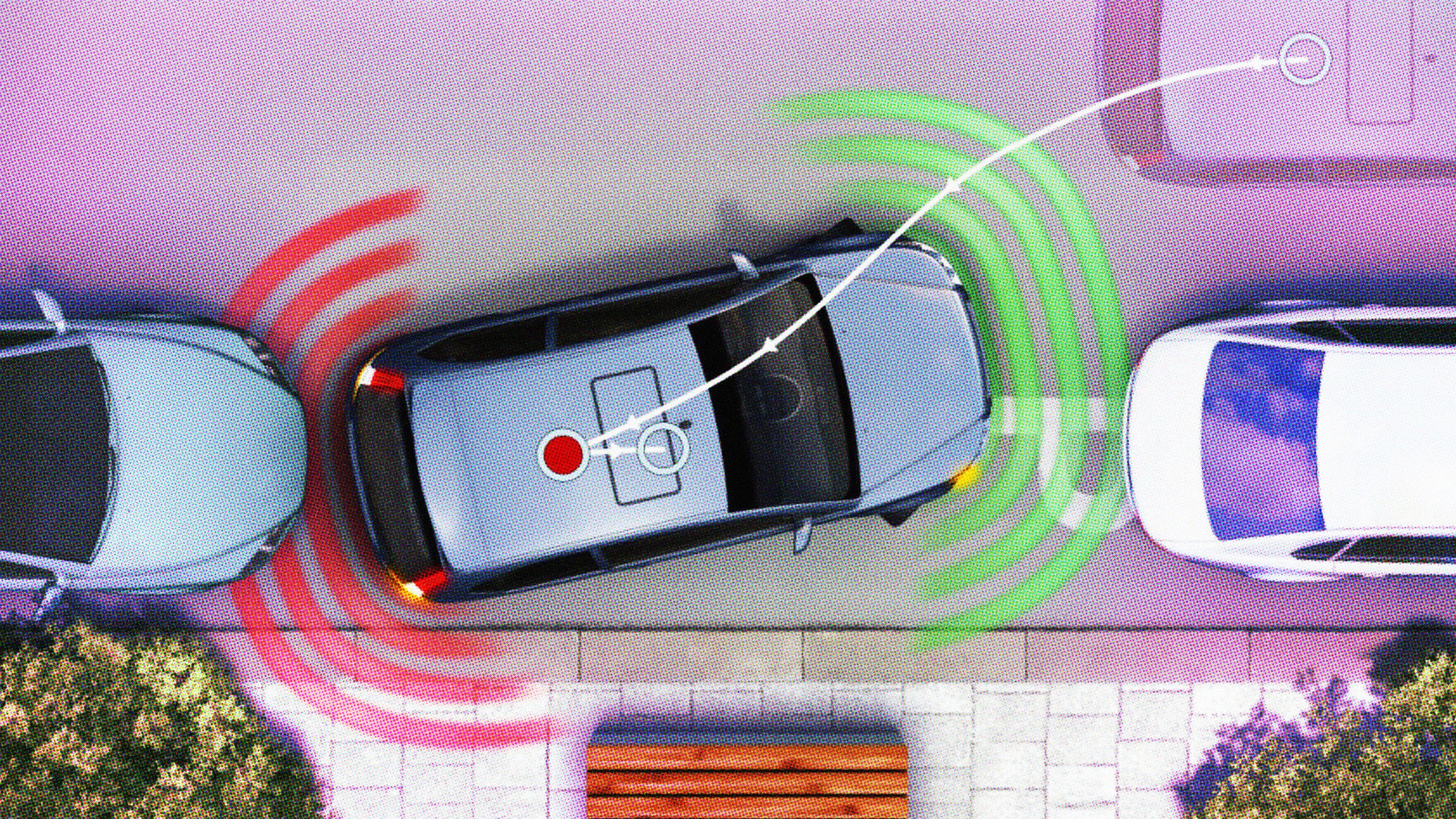

- Elon Musk is looking for a few hundred more people to test and provide feedback about Tesla’s long-awaited Hardware 3 update, according to an internal company message.

- Hardware 3, first announced in August, will likely expand the autonomous abilities of Tesla cars.

- It’s still unclear just what those expanded capabilities will be, however.

Tesla CEO Elon Musk wants “a few hundred” more people to test out the company’s new Autopilot Hardware 3, the long-awaited neural net technology that the company has said will give cars “Full Self-Driving Capability” for $8,000 plus the price of the car.

It’s been hard to pin down details about Hardware 3. Musk had once promised that Tesla cars would be able to provide a coast-to-coast autonomous drive by the end of 2017. Of course, that never happened, though the cars do offer limited self-driving capabilities, including:

- Autosteer, which detects painted lane lines and cars to keep you in your lane automatically

- Auto-lane change

- Summon, an option that starts the car and brings it to you

- And the abilities to take on/off ramps, pass slow cars and park automatically

In September, Tesla announced that it planned to use a team of internal testers to test an early version of Hardware 3. One month later, the company removed an option from its website that let customers pre-order “Full Self-Driving Capability.” Musk said the controversial option was “causing too much confusion.”

Now, an internal Tesla message, ostensibly from Musk, shows that the company is offering to swap out Hardware 2 for Hardware 3 in the cars of anyone, customers or employees, who chooses to participate in the testing program. It’s the “last time the offer will be made,” the message reads.

Telsa CEO Elon Musk unveils new vehicle. Photo credit: Kevork Djansezian / Getty Images

Will the update really make Teslas “full self-driving”?

It’s unclear, exactly. The website 1reddrop, which covers Tesla news and technology, wrote that Tesla is focusing on improving GPS technology, and that increased computing power in Hardware 3 will translate to lower latency and quicker reaction times. Also, it could enable the cars to keep better live maps of roads:

Every Tesla with Autopilot engaged sends a ton of information back to the company’s servers, and this information can be used to maintain live maps that are constantly being updated.

The biggest problem for Tesla right now is reliability. Autopilot hardware 2 and 2.5 are now stretched to their physical limits, but Autopilot Hardware 3 will be far more powerful in that it can process a lot of the information on-board.

Whether this means Teslas will be fully self-driving remains an open question. It also depends on how you define the term.

The Society of Automotive Engineers’ 6 levels of driving automation

The Society of Automotive Engineers maintains six categories of self-driving capabilities (descriptions listed below). Right now, Tesla cars exist between levels two and three, arguably.

- Level 0: Automated system issues warnings and may momentarily intervene but has no sustained vehicle control.

- Level 1 (“hands on”): The driver and the automated system share control of the vehicle. Examples are Adaptive Cruise Control (ACC), where the driver controls steering and the automated system controls speed; and Parking Assistance, where steering is automated while speed is under manual control. The driver must be ready to retake full control at any time. Lane Keeping Assistance (LKA) Type II is a further example of level 1 self-driving.

- Level 2 (“hands off”): The automated system takes full control of the vehicle (accelerating, braking, and steering). The driver must monitor the driving and be prepared to intervene immediately at any time if the automated system fails to respond properly. The shorthand “hands off” is not meant to be taken literally. In fact, contact between hand and wheel is often mandatory during SAE 2 driving, to confirm that the driver is ready to intervene.

- Level 3 (“eyes off”): The driver can safely turn their attention away from the driving tasks, e.g. the driver can text or watch a movie. The vehicle will handle situations that call for an immediate response, like emergency braking. The driver must still be prepared to intervene within some limited time, specified by the manufacturer, when called upon by the vehicle to do so. As an example, the 2018 Audi A8 Luxury Sedan was the first commercial car to claim to be capable of level 3 self-driving. This particular car has a so-called Traffic Jam Pilot. When activated by the human driver, the car takes full control of all aspects of driving in slow-moving traffic at up to 60 kilometres per hour (37 mph). The function works only on highways with a physical barrier separating one stream of traffic from oncoming traffic.

- Level 4 (“mind off”): As level 3, but no driver attention is ever required for safety, e.g. the driver may safely go to sleep or leave the driver’s seat. Self-driving is supported only in limited spatial areas (geofenced) or under special circumstances, like traffic jams. Outside of these areas or circumstances, the vehicle must be able to safely abort the trip, e.g. park the car, if the driver does not retake control.

- Level 5 (“steering wheel optional”): No human intervention is required at all. An example would be a robotic taxi.

1reddrop wrote that the new update could “decisively bring Tesla into Level 4 territory. If they succeed within the projected timeline, Tesla could become the world’s first automaker with a fully autonomous (by SAE standards) fleet on the road in 2019.”