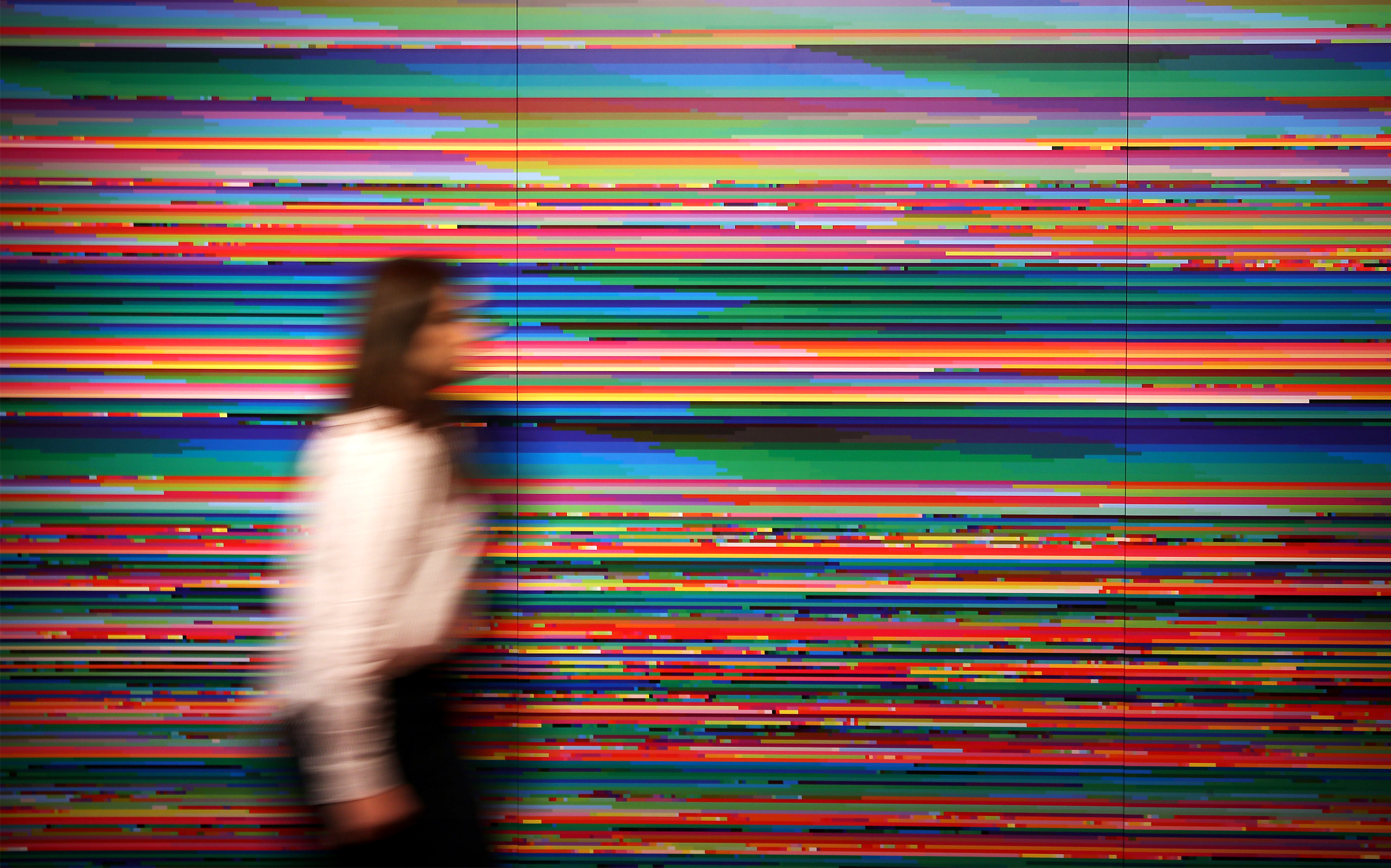

information

Think of a combination of immersive virtual reality, an online role-playing game, and the internet.

Information may not seem like something physical, yet it has become a central concern for physicists. A wonderful new book explores the importance of the “dataome” for the physical, biological, and human worlds.

New research reveals the extent to which groupthink bias is increasingly being built into the content we consume.

Is information the fifth form of matter?

The truth is a messy business, but an information revolution is coming. Danny Hillis and Peter Hopkins discuss knowledge, fake news and disruption at NeueHouse in Manhattan.

▸

13 min

—

with

What information can we trust? Truth isn’t black and white, so here are three requirements every fact should meet.

▸

7 min

—

with

A new study explains why and how people choose to avoid information and when that strategy could be beneficial.