agi

A survey conducted at the Joint Multi-Conference on Human-Level Artificial Intelligence shows that 37% of respondents believe human-like artificial intelligence will be achieved within five to 10 years.

AI expert Ben Goertzel is no stranger to building out-of-this-world artificial intelligence, and he wants others to join him in this new and very exciting field.

▸

5 min

—

with

Philosopher Daniel Dennett believes AI should never become conscious — and no, it's not because of the robopocalypse.

▸

4 min

—

with

This AI hates racism, retorts wittily when sexually harassed, dreams of being superintelligent, and finds Siri's conversational skills to be decidedly below her own.

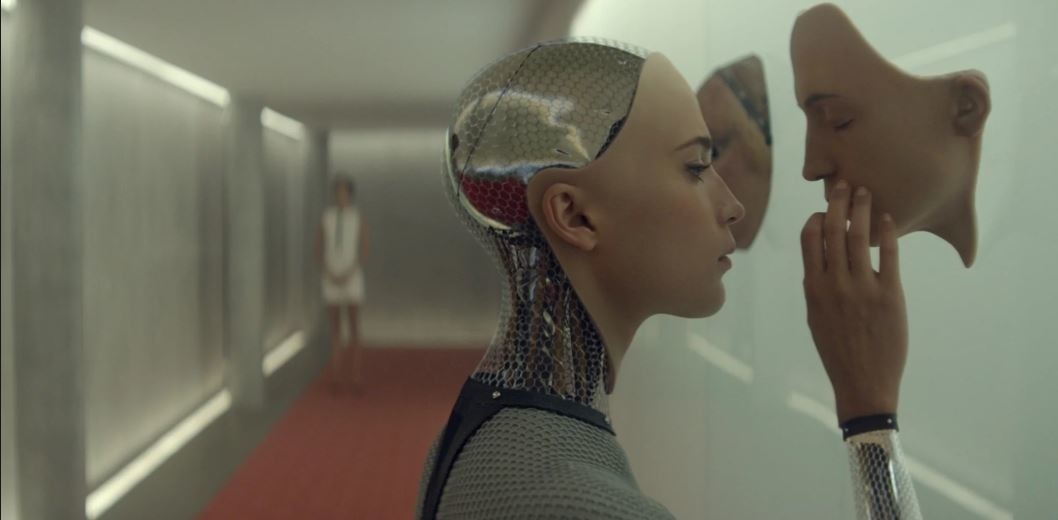

We cannot rule out the possibility that a superintelligence will do some very bad things, says AGI expert Ben Goertzel. But we can't stop the research now – even if we wanted to.

▸

7 min

—

with

One day this century, a robot of super-human intelligence will offer you the chance to upgrade your mind, says AGI expert Ben Goertzel. Will you take it?

▸

5 min

—

with