When Studies Go Wrong, Do We Fail Science, or Vice Versa?

Science has taken a few pretty serious hits recently. Try as we might to control for our human biases, their influence can be overwhelming. Alas science does not operate outside the human realm, but as an extension of our natural capabilities — the good as well as the bad ones.

Take the case of Michael LaCour, currently a Ph.D. candidate at UCLA. In late 2014, the journal Science published a he co-authored as a grad student, quickly making headlines because it claimed to show that people’s views against gay marriage were easily reversible: a 20-minute personal conversation with a gay survey taker appeared to do the trick.

Now, LaCour’s co-author, Columbia University professor Donald P. Green, has asked Science to retract the study, citing statistical irregularities found by other academics as well as LaCour’s own admission that some methodologies were falsified (participants were not paid cash as he originally claimed, which explained, according to LaCour, why response rates to the survey were so high).

LaCour appears to be defending himself on a personal page as well as on Twitter, although the event has stirred a pot long simmering over a fire of professional pressures that sometimes get the better of scientists.

(1/2) I read “Irregularities in LaCour (2014),” posted at http://t.co/SJ3jE89XeD on May 19, 2015 by Broockman et al. I’m gathering evidence

— Michael J. LaCour (@UCLaCour) May 20, 2015

(2/2) and relevant information so I can provide a single comprehensive response. I will do so at my earliest opportunity.

— Michael J. LaCour (@UCLaCour) May 20, 2015

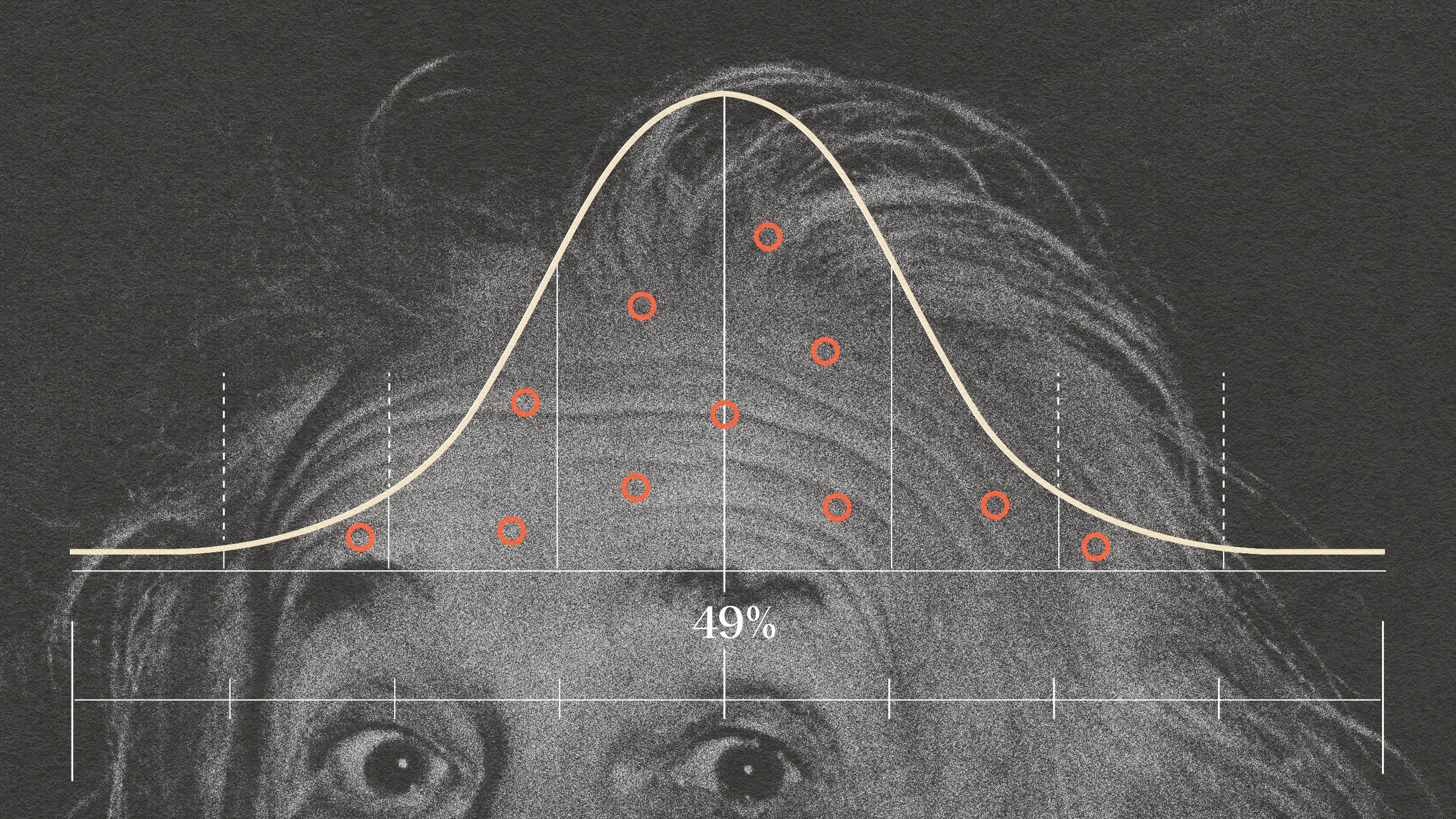

Big Think's Jason Hreha recently posted on a pair of studies that tried to replicate major research findings with an embarrassing lack of success. One study conducted by the drug company Amgen, which tried to replicate a number of oncology studies, was met with a mere 11.3 percent success rate; a larger review of more than 100 psychology articles that was published in the prestigious journal Nature found that only 39 could be replicated. That's a good average for a batter, but not for a science journal.

There were many errors found in these studies: Samples were skewed by the availability of undergraduate college students; researchers overestimated their findings in an attempt to interest the public; and university publicity departments aggrandized their researchers' findings in search of prestige and further funding.

What's worse is that when scientists do attempt to reach out to the public, explaining their results in common parlance, scientific journals tend not to take an interest. That means researchers must often choose between have a social impact and a professional impact — probably not a very motivating or fulfilling sensation.

Scientific literacy is hard and sensational media often don't do us many favors, except when it comes to delighting our imagination at the expense of what is actually probably true. But there is also an important place for stoking of the popular imagination...

The burst of information we are now accustomed to receiving on a daily basis is at odds with how science is meant to proceed: slowly, methodically, and through consensus. And rather than explaining the limitations of scientific data, popular authors often indulge in speculation about what might be true given an extension of the data beyond what is scientifically legitimate.

What are we to make of genetic dispositions, for example, toward anything and everything? When we're told that certain genes affect our ability to make choices that limit our control over overeating or marital infidelity? If confirmation bias affects even professional scientists, how will it affect society to be told that our moral sensibility is compromised by our genes?

While science is indelibly distinct from the field of ethics, says Richard Dawkins, there are a number of ways in which its facts and reasoning could greatly benefit our ability to understand and repair the world’s suffering.