Whatever you think, you don’t necessarily know your own mind

Do you think racial stereotypes are false? Are you sure? I’m not asking if you’re sure whether or not the stereotypes are false, but if you’re sure whether or not you think that they are. That might seem like a strange question. We all know what we think, don’t we?

Most philosophers of mind would agree, holding that we have privileged access to our own thoughts, which is largely immune from error. Some argue that we have a faculty of ‘inner sense’, which monitors the mind just as the outer senses monitor the world. There have been exceptions, however. The mid-20th-century behaviourist philosopher Gilbert Ryle held that we learn about our own minds, not by inner sense, but by observing our own behaviour, and that friends might know our minds better than we do. (Hence the joke: two behaviourists have just had sex and one turns to the other and says: ‘That was great for you, darling. How was it for me?’) And the contemporary philosopher Peter Carruthers proposes a similar view (though for different reasons), arguing that our beliefs about our own thoughts and decisions are the product of self-interpretation and are often mistaken.

Evidence for this comes from experimental work in social psychology. It is well established that people sometimes think they have beliefs that they don’t really have. For example, if offered a choice between several identical items, people tend to choose the one on the right. But when asked why they chose it, they confabulate a reason, saying they thought the item was a nicer colour or better quality. Similarly, if a person performs an action in response to an earlier (and now forgotten) hypnotic suggestion, they will confabulate a reason for performing it. What seems to be happening is that the subjects engage in unconscious self-interpretation. They don’t know the real explanation of their action (a bias towards the right, hypnotic suggestion), so they infer some plausible reason and ascribe it to themselves. They are not aware that they are interpreting, however, and make their reports as if they were directly aware of their reasons.

Many other studies support this explanation. For example, if people are instructed to nod their heads while listening to a tape (in order, they are told, to test the headphones), they express more agreement with what they hear than if they are asked to shake their heads. And if they are required to choose between two items they previously rated as equally desirable, they subsequently say that they prefer the one they had chosen. Again, it seems, they are unconsciously interpreting their own behaviour, taking their nodding to indicate agreement and their choice to reveal a preference.

Building on such evidence, Carruthers makes a powerful case for an interpretive view of self-knowledge, set out in his book The Opacity of Mind (2011). The case starts with the claim that humans (and other primates) have a dedicated mental subsystem for understanding other people’s minds, which swiftly and unconsciously generates beliefs about what others think and feel, based on observations of their behaviour. (Evidence for such a ‘mindreading’ system comes from a variety of sources, including the rapidity with which infants develop an understanding of people around them.) Carruthers argues that this same system is responsible for our knowledge of our own minds. Humans did not develop a second, inward-looking mindreading system (an inner sense); rather, they gained self-knowledge by directing the outward-looking system upon themselves. And because the system is outward-looking, it has access only to sensory inputs and must draw its conclusions from them alone. (Since it has direct access to sensory states, our knowledge of what we are experiencing is not interpretative.)

The reason we know our own thoughts better than those of others is simply that we have more sensory data to draw on – not only perceptions of our own speech and behaviour, but also our emotional responses, bodily senses (pain, limb position, and so on), and a rich variety of mental imagery, including a steady stream of inner speech. (There is strong evidence that mental images involve the same brain mechanisms as perceptions and are processed like them.)Carruthers calls this the Interpretive Sensory-Access (ISA) theory, and he marshals a huge array of experimental evidence in support of it.

The ISA theory has some startling consequences. One is that (with limited exceptions), we do not have conscious thoughts or make conscious decisions. For, if we did, we would be aware of them directly, not through interpretation. The conscious events we undergo are all sensory states of some kind, and what we take to be conscious thoughts and decisions are really sensory images – in particular, episodes of inner speech. These images might express thoughts, but they need to be interpreted.

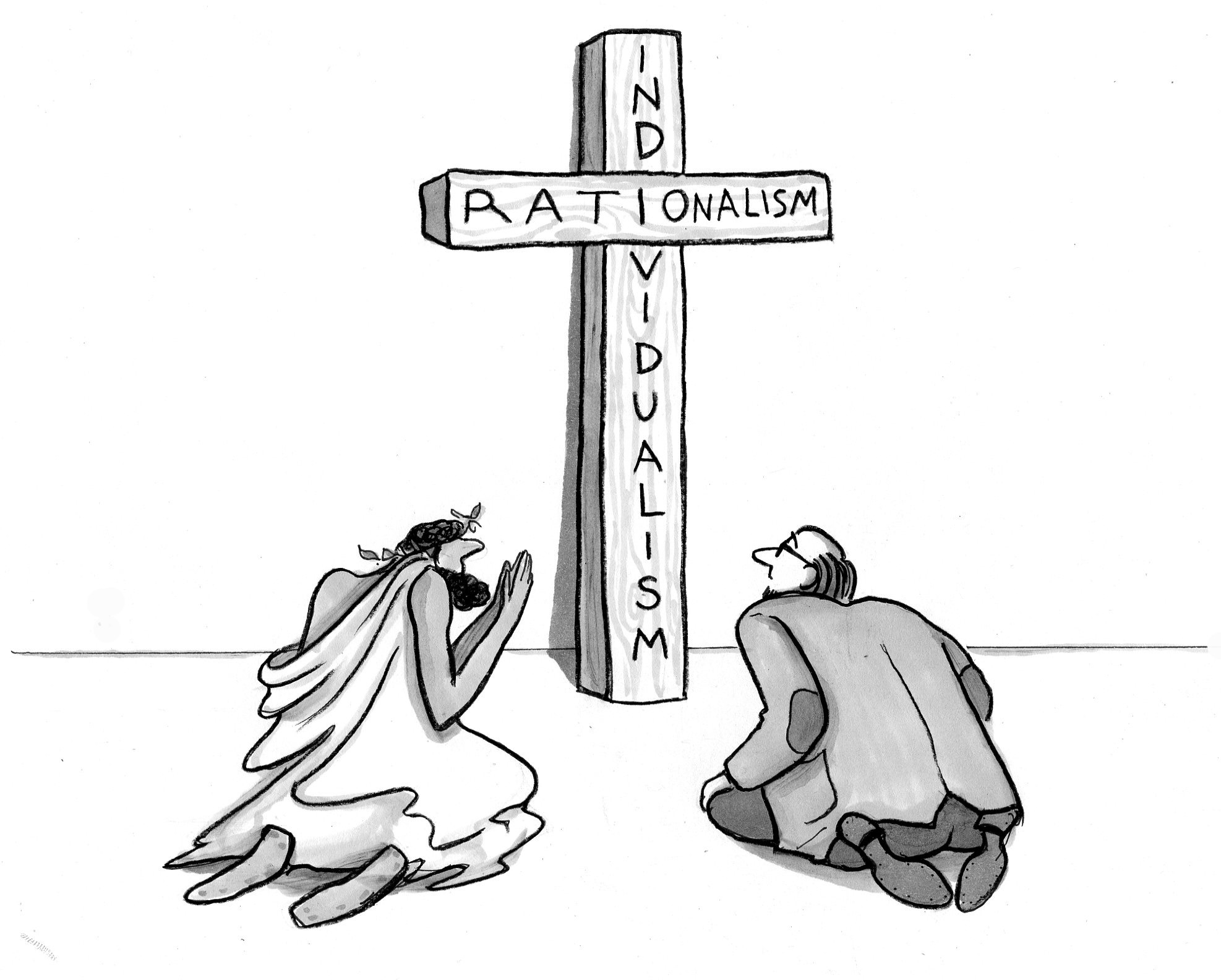

Another consequence is that we might be sincerely mistaken about our own beliefs. Return to my question about racial stereotypes. I guess you said you think they are false. But if the ISA theory is correct, you can’t be sure you think that. Studies show that people who sincerely say that racial stereotypes are false often continue to behave as if they are true when not paying attention to what they are doing. Such behaviour is usually said to manifest an implicitbias, which conflicts with the person’s explicit beliefs. But the ISA theory offers a simpler explanation. People think that the stereotypes are true but also that it is not acceptable to admit this and therefore say they are false. Moreover, they say this to themselves too, in inner speech, and mistakenly interpret themselves as believing it. They are hypocrites but not conscious hypocrites. Maybe we all are.

If our thoughts and decisions are all unconscious, as the ISA theory implies, then moral philosophers have a lot of work to do. For we tend to think that people can’t be held responsible for their unconscious attitudes. Accepting the ISA theory might not mean giving up on responsibility, but it will mean radically rethinking it.

Keith Frankish

This article was originally published at Aeon and has been republished under Creative Commons.