Buh-Bye, ‘Traditional’ Neural Networks. Hello, Capsules.

If you have a recent phone, odds are you have a neural net in your pocket or handbag. That’s how ubiquitous neural nets have become. They’ve got caché, and manufacturers brag about using them in everything, from voice recognition to smart thermostats to self-driving cars. A search for “neural network” on Google nets nearly 35 million hits. But “traditional” neural nets may already be on their way out, thanks to Geoff Hinton of Google itself. He’s introduced something even cutting-edgier: “capsule” neural nets.

The inspiration for traditional neural networks is, as their name implies, the neurons in our brains, and the way these tiny bodies are presumed to aggregate understanding through complex interconnections between many, many individual neurons, each of which is handling some piece of an overall puzzle.

(VITSTUDIO via SHUTTERSTOCK)

Neural nets are more properly referred to as “artificial neural networks,” or “ANNs for shot. (We’ll just call them “neural networks” or “nets” here.) A neural network is a classifier that can sort an object into a correct category based on input data.

The foundation of a neural network is its artificial neurons, or “ANs,” each one assigning a value to information it’s received according to some rule. Groups of ANs are arranged in layers that together come to a prediction of some sort that’s then passed on to the next layer, and so on, until understanding is achieved. In convolutional neural networks, insights travel up and down the layers, continually modifying the ANs’ rules to fix errors and deliver the most accurate outputs.

(SIN14 via SHUTTERSTOCK)

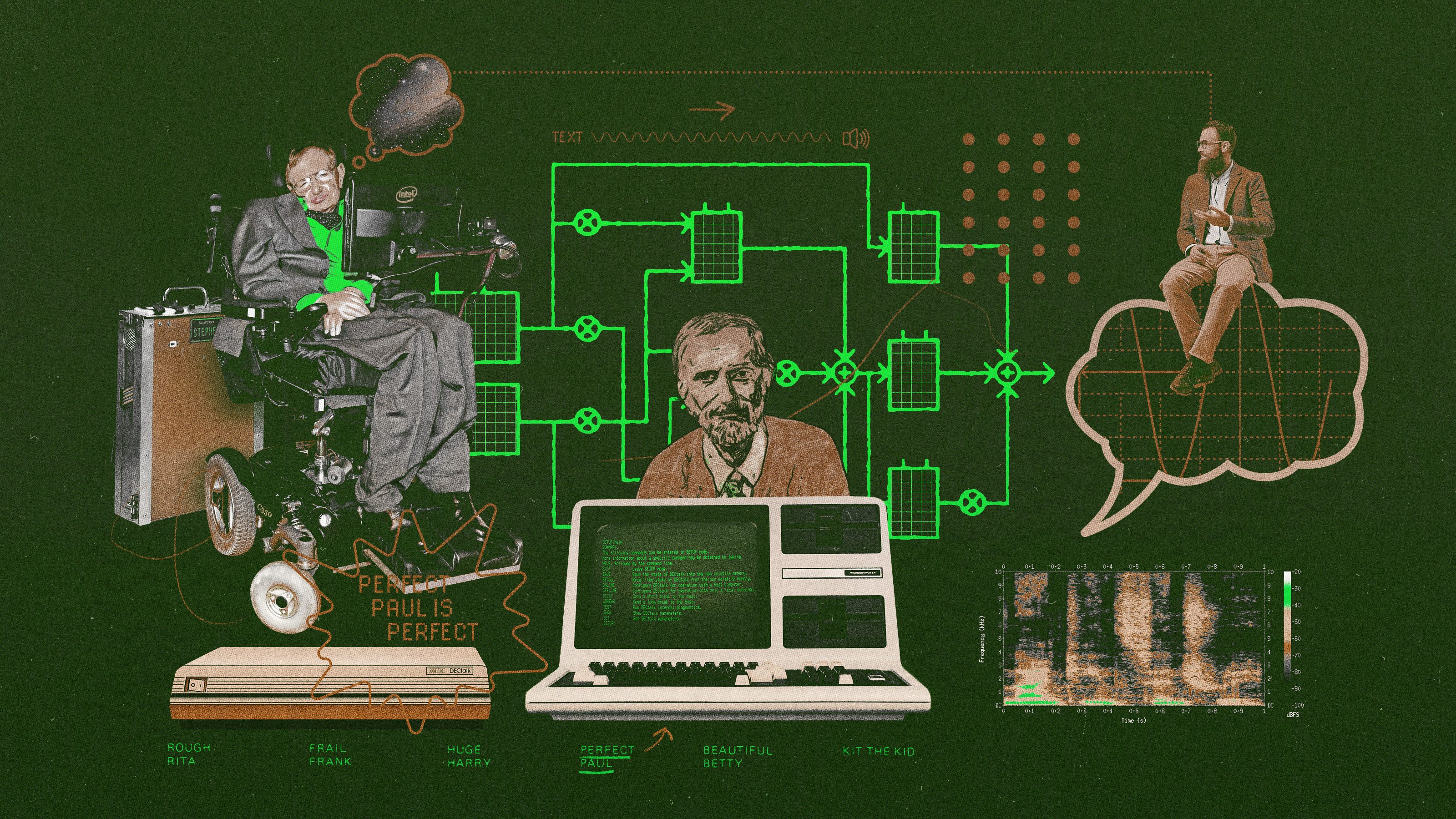

Neural nets predate our modern computers, with the first instance being the Perceptron algorithm developed by Cornell’s Frank Rosenblatt in 1957. To take advantage of their potential, though, requires modern computation horsepower, and therefore not much was done in the way of neural nets’ development until University of Toronto professor Geoff Hinton demonstrated in 2012 just how good they could be at recognizing images. (Google and Apple now use neural-nets in their photo platforms, with Google recently revealing that theirs has 30 layers to it.) Neural networks are a critical component in the development of artificial intelligence (AI).

One appealing aspect of neural networks is that programmers don’t need to tell them how to do their job. Instead, a massive database of samples — images, voices, etc. — is provided to the neural network, which then figures out for itself how to identify what it’s been given. It takes the neural net a while, and lots of samples, to establish the identities of similar items presented under different conditions: in different positions, for example. As Tom Simonite writes in WIRED:

To teach a computer to recognize a cat from many angles, for example, could require thousands of photos covering a variety of perspectives. Human children don’t need such explicit and extensive training to learn to recognize a household pet.

This is a problem: Their need for so many samples has been limiting neural nets’ usefulness to things for which massive data sets exist. In order for AI to be of use with more limited data sets — for analyzing medical imagery, for example — it needs to be smarter with less input data. Hinton recently told WIRED, “I think the way we’re doing computer vision is just wrong. It works better than anything else at present, but that doesn’t mean it’s right.”

Hinton has been pondering a potential solution since the late 90s: capsules, and has just published two articles — at arXiv and OpenReview — detailing a functional “capsule network” because, as he says, “We’ve finally got something that works well.”

Geoff Hinton (UNIVERSITY OF TORONTO)

With Hinton’s capsule network, layers are comprised not of individual ANs, but rather of small groups of ANs arranged in functional pods, or “capsules.” Each capsule is programmed to detect a particular attribute of the object being classified, thus getting around the need for massive input data sets. This makes capsule networks a departure from the “let them teach themselves” approach of traditional neural nets.

A layer is assigned the task of verifying the presence of some characteristic, and when enough capsules are in agreement on the meaning of their input data, the layer passes on its prediction to the next layer.

So far, capsule nets have proven equally adept at as traditional neural nets at understanding handwriting, and cut the error rate in half for identifying toy cars and trucks. Impressive, but it’s just a start. The current implantation of capsule networks is, according by Hinton, slower than it will have to be in the end. He’s got ideas for speeding them up, and when he does, Hinton’s capsules may well spark a major leap forward in neural networks, and in AI.