New Survey: Scientists and Experts Have Different Views. Well, D’UH! But WHY!?

The news and comment-o-sphere are paying a lot attention to a Pew Research survey, done in association with the American Association for the Advancement of Science (AAAS) that finds that people trust science and scientists, and applaud the benefits of science, but when asked about specific science issues, the public has different views than scientists do. While it is important to know that this perception gap exists, and that faith in science in general remains high, the news coverage and punditry and the survey itself are missing one thing; an explanation for what causes those different views. Why the gap?

That’s too bad, because the explanation for why different people see the same issues in such different ways could make surveys like this truly valuable, instead of telling us what we already know, albeit on new issues. And it’s really too bad because those explanations come from cognitive psychology research that began back in the 1970s, and which found precisely the same gap. Too bad the Pew research didn’t use that knowledge to get at explanations for the perception gap that could help identify ways it might be narrowed.

The research that helps explain this perception gap comes from several fields.

1. As the environmental movement fostered rising concern about industrial chemicals and nuclear radiation in the ’70s, regulators and scientists in those fields were frustrated by Chemonoia and RadioPhobia. Yes, those technologies posed risks, but people’s fears far exceeded the likelihood and severity of those risks. Nuclear and chemical regulators and scientists wanted to know why.

So pioneers like Paul Slovic and Baruch Fischhoff and Sarah Lichtenstein and others initiated research into the psychology of risk perception (risks to health, as opposed to the study of people’s irrational choices about monetary risks, which was already well underway). And they found what the Pew survey has confirmed. Experts and “regular people” see science issues differently. In fact, Slovic et al. found that different groups of regular people saw things differently too.

In a 1979 paper in the journal Environment titled “Rating the Risks,” the researchers asked four groups of people to rank the perceived risk of death from 30 activities and technologies; members of the League of Women Voters, college students (always handy for psych experiments), members of business and professional clubs, and scientific experts in risk assessment. They found the same gap the Pew study found, albeit on different issues.

Courtesy: Paul Slovic, The Perception of Risk

Their research went on to discover why those gaps exist. They revealed a set of psychological characteristics, “fear factors” that make some risks feel scarier and some risks feel less scary. Those factors help explain some of the Pew findings. For example, Slovic et al. established that we’re more afraid of human-made risks than natural ones, which helps explain the gap regarding the safety of human-made GMO foods…

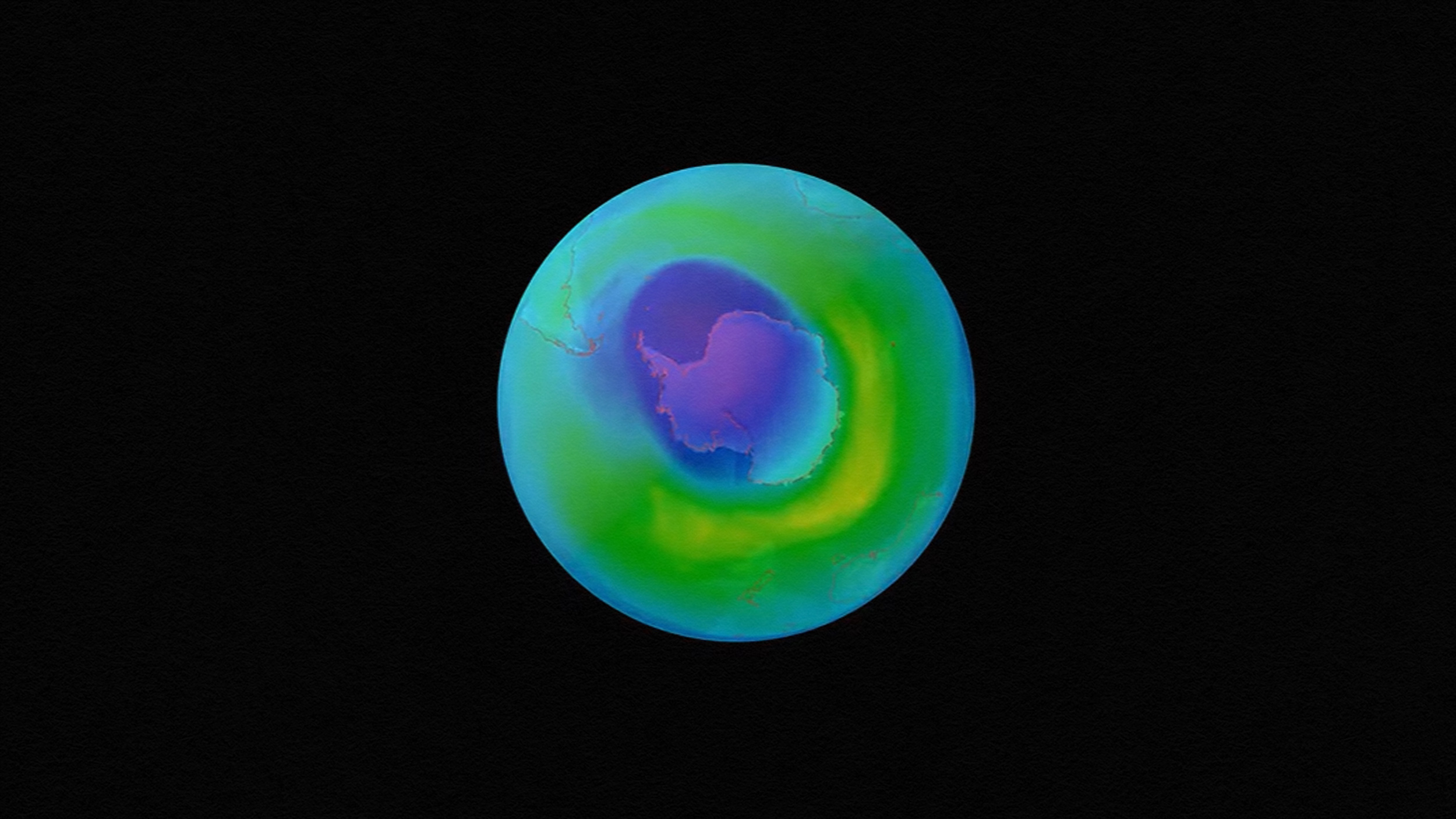

Survey images courtesy: Pew Research Center

… and the gap about the safety of eating food grown with pesticides.

We’re more afraid of risks imposed on us than those we take voluntary, which helps explain the gap on the Pew question about childhood vaccination (note that it asks whether the program should be required.)

Slovic and colleagues also discovered that while experts’ views are affected by these emotional filters too, the views of people with more information and a scientific bent to think about things critically aligned more closely with the evidence. Which helps explain the gap between public and “scientific expert” views in general.

2. Slovic was a colleague for a time of Daniel Kahneman and the cognitive psychology researchers who were studying why people made monetary choices that seemed to defy rationality. They discovered a broad set of heuristics and biases — mental shortcuts — we use to quickly and subconsciously turn partial information into our judgments and decisions.

Kahneman et al. learned, for example, that once we’ve developed an opinion or view, we tend to stick with it, fitting new information into what we already believe and rejecting information that conflicts. This is known as confirmation bias. You can see how that might have played a role in the answer Pew got on evolution, which a third of Americans in this survey still deny.

They also discovered the bias that “framing” imposes on people’s views. Essentially, how we first hear an issue described shapes our initial views, and then we stick with those views. The public’s picture of GMOs has largely been painted by fear-mongering opponents. Small wonder that 63% of the survey respondents say GMO food is unsafe to eat, while 88% of the experts, whose views have been framed more by the scientific evidence rather than the alarms of advocates (in 15 years, no credible studies have ever found human health harm from GMO food), say it’s safe.

3. A modern theory of risk perception that builds on Slovic’s work, Cultural Cognition by Dan Kahan, has found that we tend to see the facts through the lenses of the groups we belong to, based on the values shared by those groups. This is particularly true of issues that have become polarized, totems of tribal values. So when Pew asked about climate change…

… or support for offshore drilling…

… those answers in part reflected people’s feelings about environmental issues and their underlying political values.

This all boils down to one simple thing. Cognition is subjective. We all perceive information, about risk or science or anything, through instinctive and emotional filters that help us subconsciously sense how that information feels. Slovic and Melissa Finucane called this the Affect Heuristic, the fast and mostly subconscious mental process that takes what we know (which is usually not a lot, especially for non-experts) and assesses it based on our experiences and life circumstances and education, and those psychological “fear factors,” and cognitive processes like confirmation bias and framing, and the values of the groups with which we most closely identify… and from that complex set of influences we come up with our views and opinions and perceptions, and what we tell pollsters.

These insights seem to have been ignored by the survey, which asked people how they felt, but not why. (Pew says a later survey will dive into demographics, but those don’t really reveal the cognitive psychological roots of people’s views either.) These explanations also seem to have been ignored by the scientists surveyed, who offered trite, old explanations for the perception gap, explanations that don’t display a lot of… well… expertise.

The perception gap identified in the Pew survey is NOT about people not knowing enough, or expecting solutions too quickly. This is the old-school, post-Enlightenment belief that once people are educated enough, we can all get it “right.” Which is wrong. And it’s a problem, because until we realize that the perception of risk (or anything) is not just a matter of the facts and objective rationality, but also a matter of emotions and instincts, we won’t be able to narrow these perception gaps. And that’s a problem because as individuals and together as a society, we make a lot of dumb and dangerous choices when our perceptions don’t match the evidence.

(top image courtesy RiskSense.org)