An Intelligence That Can See Its Own Future Created by Scientists

Researchers from UC Berkeley have created a technology that allows robots to imagine how their actions will turn out in the future. Using this approach, the robots are able to interact with objects that they’ve never come across previously.

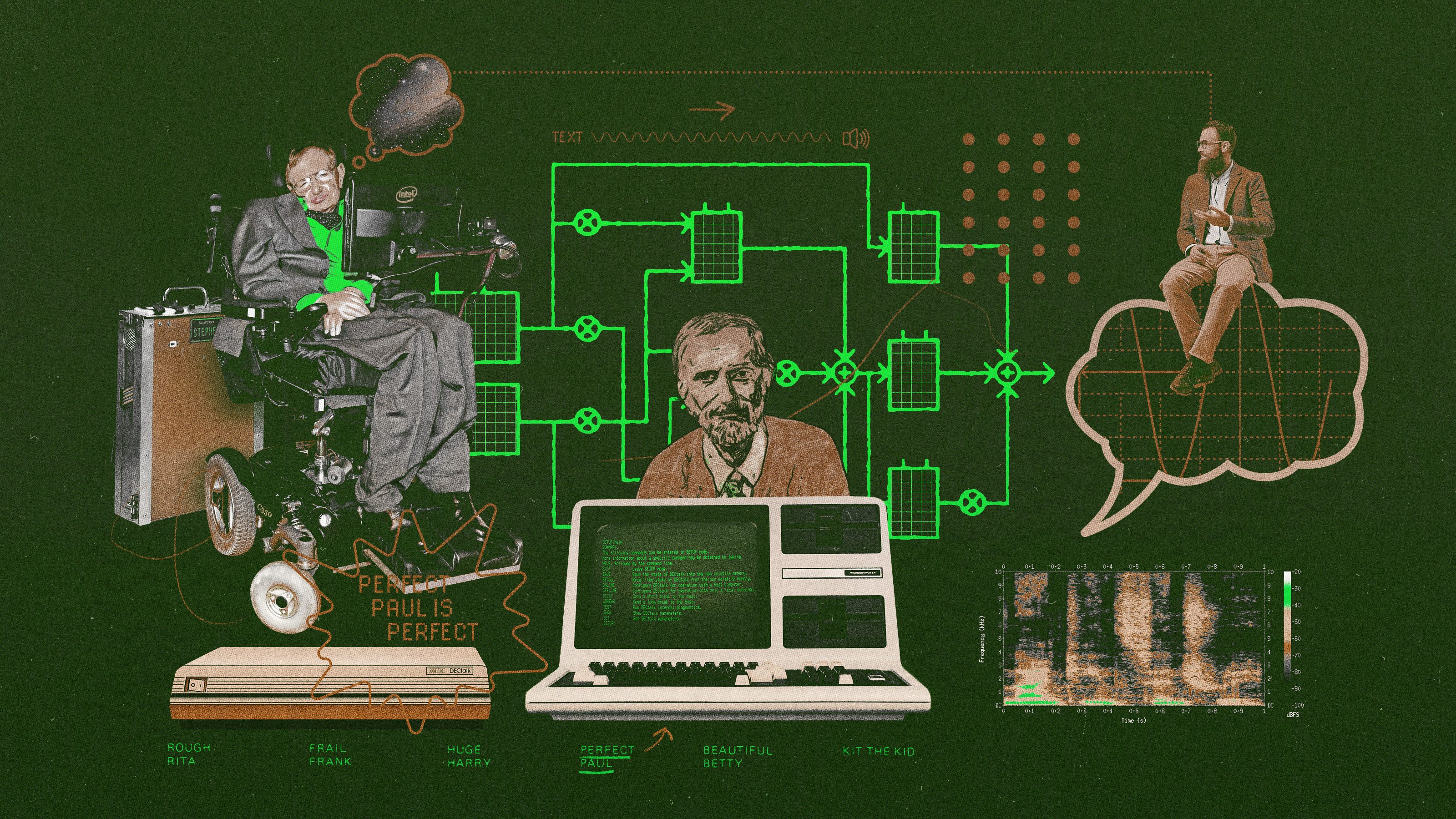

The way the technology helps the robots predict the future is called visual foresight. It gives the robotic system an ability to anticipate what future actions, like a series of movements, would look like in its camera.

In essence, a robotic imagination is invoked, allowing predictions several seconds into the future. This is not much but lets the robot named Vestri perform tasks without help from humans or much background information about the environment. The visual imagination is improved simply through unsupervised exploration, with the robot playing with objects on a table like a child. That helps the robot build a predictive model of the environment and use to control objects it hasn’t encountered previously.

Check out this video on how Vestri uses imagination to perform tasks:

Underlying this ability is deep learning based on technology called “dynamic neural advection (DNA)”. Models using DNA predict how pixels in an image will look like in the next frame depending on the robot’s action. Advancements in such video prediction models allow for greater planning capacity for robots as well as performance of complex tasks like changing positions of various objects and sliding toys around obstacles.

SergeyLevine, assistant professor at Berkeley, whose lab developed the technology, thinks robotic imagination can make the machines learn intricate skills.

“In the same way that we can imagine how our actions will move the objects in our environment, this method can enable a robot to visualize how different behaviors will affect the world around it,” said Levine. ”This can enable intelligent planning of highly flexible skills in complex real-world situations.”

He compared the learning method to how children learn by playing with toys. This research gives robots the same opportunity.

Chelsea Finn, a doctoral student from Levine’s lab, who is also the inventor of the original DNA model, remarked that the significant advancement here is that robots can now learn on their own.

“In that past, robots have learned skills with a human supervisor helping and providing feedback,” said Finn. What makes this work exciting is that the robots can learn a range of visual object manipulation skills entirely on their own.”

This tech can find application in self-driving cars that need to predict future events or in robotic assistants.