The Sartre Fallacy, or Being Irrational About Reason

Consider the story of my first encounter with Sartre.

I read Being and Nothingness in college. The professor, a Nietzsche aficionado, explained Sartre’s adage that existence precedes essence. After two years of ancient philosophy the idea struck me as profound. If it was true, then Plato and Aristotle were wrong: there are no Forms, essences or final causes. Meaning isn’t a fundamental abstract quality; it emerges from experience.

But that’s not what has remained with me. We might simply say that the flamboyant French existentialist believed that we ought to live in “good faith” in order to live authentically. To the psychologist the authentic life is a life without cognitive dissonance; the realist might say it means you don’t bullshit yourself. For Leon Festinger it’s a world in which his doomsayers admit that the destruction of Earth is not, in fact, imminent. For Aesop it’s a world in which the fox admits the grapes are ripe.

Sartre’s philosophy of authenticity remains with me because of a relationship I had with a classmate. I have in mind an ironically inclined peer whom I recall getting high after class and talking about living authentically. Mind you, this occurred while he wore clothes that referenced an earlier generation and drank beer targeting a much different demographic than his own. He was doubling down on irony.

You can imagine my horror when I realized that I was not unlike him. I was a college student, after all: I was selective with my clothes and I too engaged in pseudo-intellectual conversations with my friends. Worse, I used Being and Nothingness to confirm my authenticity and his phoniness. If the role of the conscious mind is to reconcile the pressures of the external world with the true self then I was hopelessly inauthentic. I was a poseur living an unexamined life. The joke was on me.

It was only until recently that the lesson of my time with Sartre and the hipster truly registered. There are times, I realized, when people (myself included) behave or decide counter to recently acquired knowledge. It’s worse than simply misinterpreting or not learning; it’s doing the opposite of what you’ve learned – you’re actually worse off. I’m tempted to term this the “Hipster Mistake” after my existential experience in which reading Sartre caused me to live less authentically. But let’s call this counter-intuitive phenomenon the Sartre Fallacy after my friend Monsieur Sartre.

After the Sartre Fallacy fully registered, a perfect example from cognitive psychology emerged in my mind. Change blindness occurs when we don’t notice a change in our visual field. Sometimes this effect is dramatic. In one experiment researchers created a brief movie about a conversation between two friends, Sabina and Andrea, who spoke about throwing a surprise party for a mutual friend. The two women discuss the party as the camera cuts back and forth between the two, sometimes focusing only on one and other times both. After the participants watched the minute-long movie the researchers asked, “Did you notice any unusual difference from one shot to the next where objects, body positions, or clothing suddenly changed?”

The researchers – Dan Simons and Daniel Levin – are wily psychologists. There were, in fact, nine differences throughout the movie purposely put in to test change blindness. They were not subtle either. In one scene Sabina is wearing a scarf around her neck and in the next scene she isn’t; the color of the plates in front of Sabina and Andrea change from one scene to the next. Nearly every participant said nothing of the alterations.

In a follow up experiment Levin explained the premise of the Sabina-Andrea study (including the results) to undergraduates. 70 percent reported that they would have noticed changes even though no one from the original study did. Let this detonate in your brain: the undergrads concluded that they would notice the changes knowing that the participants in the original study did not. The lesson here is not that change blindness exists. It is that we do not reconsider how much of the world we miss after we learn about how much of the world we miss – the follow up experiment actually caused participants to be more confident about their visual cortices. They suffered from change blindness blindness, so to speak (the visual equivalent to the Sartre Fallacy), even though the sensible conclusion would be to downgrade confidence. Here we see the Sartre Fallacy in action (and if you doubt the results of the second experiment you’re in real trouble).

I committed a similar error as an undergraduate about one year after falling pray to the Sartre Fallacy. My interest in philosophy peaked around the time I finished Being and Nothingness. The class continued with de Beauvoir’s The Second Sex but it was not, I quickly noticed, that sexy; The Fall and Nietzsche’s bellicosity were much more seductive. In fact, with a few exceptions (Popper and later Wittgenstein) philosophy becomes incredibly boring after Nietzsche. The reason, simply, is the old prevails over the new and not enough time has passed to determine who is worth reading. It’s a safe bet that the most popular 20th century philosopher in the 23rd century is currently unpopular.* This is one reason why death is usually the best career move for philosophers. Good ideas strengthen with time.

With this in mind I turned to psychology where experiments like the ones the Dans conducted reignited my neurons. I especially enjoyed the literature on decision-making, now a well-known domain thanks to at least three more psychologists named Dan: Kahneman, Ariely, and Gilbert. I read Peter Wason’s original studies from the 1960s and subsequent experimentation including Tom Gilovich’s papers on the hot hand and clustering illusions. Confirmation bias, the first lesson in this domain, stood out: the mind drifts towards anything that confirms its intuitions and preconceptions while ignoring anything that looks, sounds or even smells like opposing evidence. An endless stream of proverbs since language emerged underlines this systematic and predictable bias but the best contemporary description comes from Gilbert: “The brain and the eye may have a contractual relationship in which the brain has agreed to believe what they eye sees, but in return the eye has agreed to look for what the brain wants.” I still remember reading this gem and immediately uncapping my pen.

But then something unexpected occurred. Suddenly and paradoxically I only saw the world through the lens of this research. I made inaccurate judgments, illogical conclusions and was irrational about irrationality because I filtered my beliefs through the literature on decision-making – the same literature, I remind you, that warns against the power of latching onto beliefs. Meanwhile, I naively believed that knowledge of cognitive biases made me epistemically superior to my peers (just like knowing Sartre made me more authentic).

Only later did I realize that learning about decision-making gives rise to what I term the confirmation bias bias, or the tendency for people to generate a narrow (and pessimistic) conception of the mind after reading literature on how the mind thinks narrowly. Biases restrict our thinking but learning about them should do the opposite. Yet you’d be surprised how many decision-making graduate students and overzealous Kahneman, Ariely and Gilbert enthusiasts do not understand this. Knowledge of cognitive biases, perhaps the most important thing to learn for good thinking, actually increases ignorance in some cases. This is the Sartre Fallacy – we think worse after learning how to think better.

Speaking of Thomas Gilovich, right around the time I became a victim of confirmation bias bias he gave a lecture in our psychology department. I sat in on a class he taught before his lecture and listened to him explain a few studies that I already knew from his book, which I had bought and read. Of course, I took pride in this much like a hipster at a concert bragging about knowing the band “before everyone else.” It was Sartre part II. Somehow I had learned nothing.

After his class I jumped on an opportunity to spend a few hours with him while he killed time before his lecture to the department. I’d been studying his work for months, so you might imagine how exciting this was for me. I asked him about Tversky (his adviser) and Kahneman, practically deities in my world at the time, as well as other researchers in the field. I regret not remembering a thing he said. But thanks to pen and paper I will remember the note he wrote after I asked him to sign my copy of his book: “To a kindred spirit in the quest for more rational thinking.” How foolish, I thought. Didn’t Gilovich know that given confirmation bias “more rational thinking” is impossible? After all, if biases plagued the mind how could we think rationally about irrationality?

Again, the joke was on me.

*Likewise, it’s unlikely a new ancient Greek philosopher will become popular, unless they dig up some more charred papyrus fragments at Oxyrhynchus or Herculaneum. Another example. The youngest book from top ten of the Modern Library’s 100 best English-Language novels from the 20th century is Catch-22, published in 1961. AFI top 100 movies shows a similar effect.

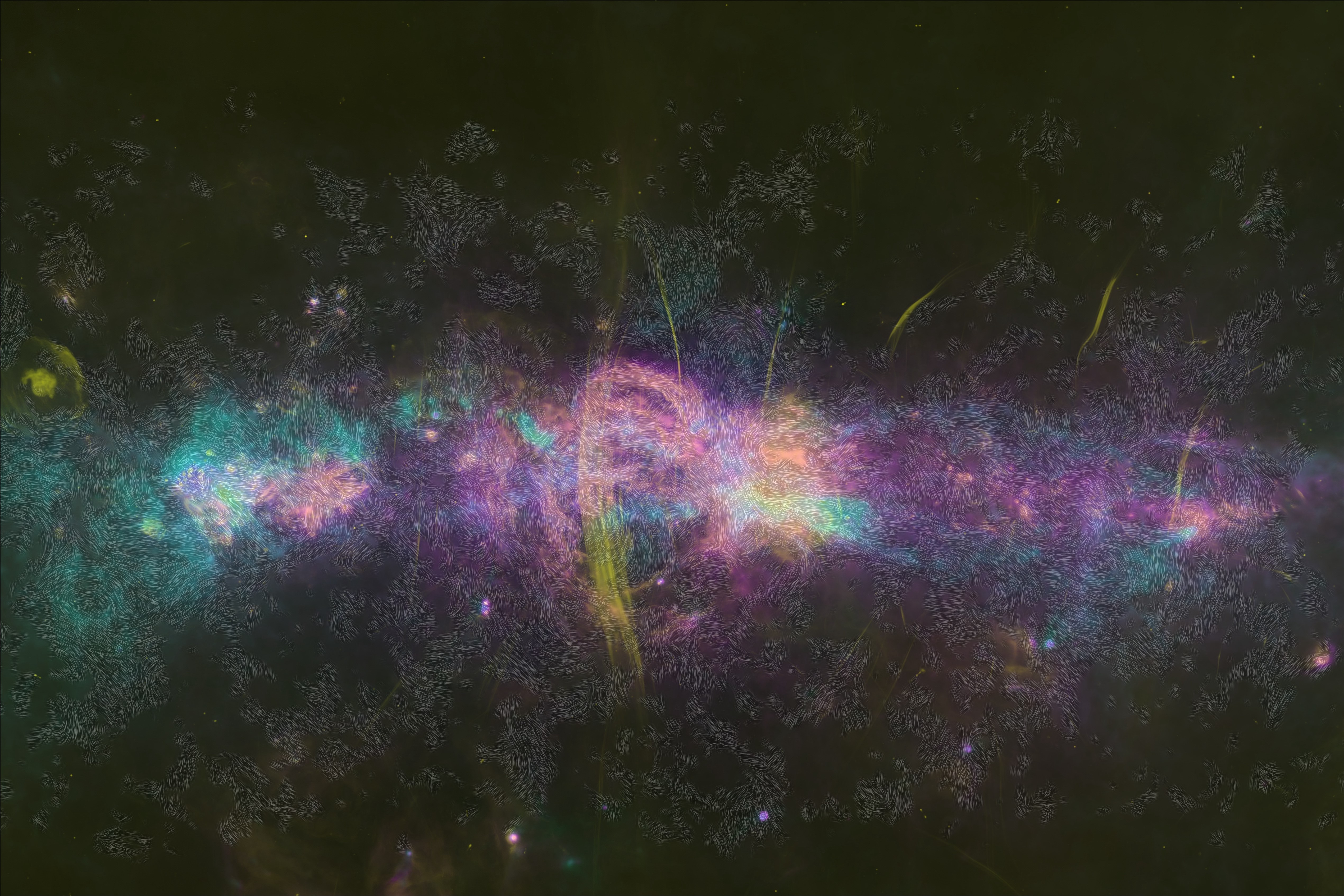

Image via Wikipedia Creative Commons