Is Society Prepared to Handle Tough Questions Surrounding AI?

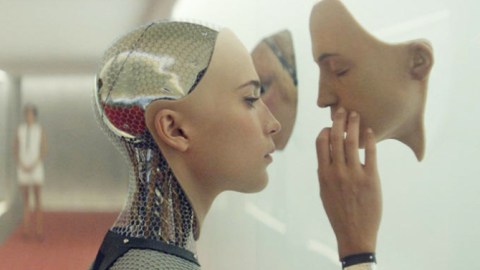

Our future as humans might be great. Or non-existent.

“On one side of the equation,” states Sairah Ashman, “there is a very utopian view of what artificial intelligence will bring us.” Ashman is Global COO for Wolff Olins, a creative consultancy firm. She recently gave a thought-provoking talk entitled Playing God, Being Human at the Collision conference in New Orleans, along with authoring a related essay to spark debate.

After painting a quick picture of a utopian future filled with flying cars and self-healing pods, Ashman pivots to a future we may fear.

“But on the other side of the equation, there is also a very dystopian view. Which is that we will reach a stage where we become redundant. And where artificial intelligence and machines will take over the world. And what will our place be?“

How Do We Prevent a Dystopian Future?

The future doesn’t happen to us, of course. Our future, especially as it relates to advancing AI, is dependent on the decisions we are making today in terms of expectations, ethics, and regulations. The million dollar question, however, is whether we are adequately prepared to handle these questions. Also, who should be tackling these questions around advancing technology?

“Historically we might have looked towards government,” states Ashman in Playing God, Being Human. “But they’re somewhat handicapped, they’re not moving at the same pace as technology. They’re bureaucratic institutions, they are different around the world, they have different agendas. So perhaps they’re not going to give us the answers.”

Like many individuals thinking about our future with AI, such as Elon Musk and Bill Gates, Ashman advocates towards a more nuanced view of how we think of the future. “I take a very optimistic view of what artificial intelligence could do for us in the future. We don’t have to think of this as a black and white subject.”

I reached out to Sairah Ashman to dig a little deeper into the issues she raised in her talk, and get her impression as to the best steps forward. In particular, I was curious as to why she believes governments are ill-equipped to handle the challenges of advancing tech. Ashman pointed out three aspects as to why governments may not be best suited:

THREE REASONS WHY GOVERNMENTS MAY NOT BE ABLE TO HANDLE AI QUESTIONS:

“It interesting that lots of tech businesses, through no fault of their own I would say, are in a position where the decisions that they make in one part of the planet show up in other parts really easily without them necessarily realizing. There are a lot of unintended consequences.”-Sairah Ashman

If Governments Are Ill-Prepared, Will Silicon Valley Save Us?

“Increasingly there is a view that the answer will not necessarily come out of Silicon Valley,” says Ashman, when I ask her about the likely path forward. She mentions her conversations with those in Silicon Valley who are taking the issues regarding ethics and impact very seriously. In the recent weeks, we have seen the launching of the OpenAI initiative, along with Microsoft’s Satya Nadella openly talking about preventing a dystopian future.

“The great thing about these tech companies and the fact that they are commercial,” says Ashman, “is that they have to be highly responsive.”

Outside of the responsiveness that tech companies may need to show from a pure business standpoint, Ashman points out the power of the community. If we can’t fully rely on our governmental systems to thoughtfully think about the future of AI, there are three questions we should be asking about any advancing tech:

Where does it come from?

Why is being produced?

How do we feel about that?

“I agree with Ashman’s framing of some of the big questions about artificial intelligence,” states Don Heider, after viewing Ashman’s Playing God, Being Human talk. Heider is the founder of the Center for Digital Ethics & Policy, at Loyola University Chicago.

“The large tech companies as they mature have reached a crucial moment where coming to terms with the consequences they create needs to be a high priority.”-Don Heider, Why Facebook Should Hire a Chief Ethicist (Op-Ed for USA Today)

Heider has a few other additional questions he would like tech companies to think about:

The divide between a utopian or dystopian future with advancing AI seems to rely on the thoughtfulness–or lack thereof–that we apply today.

—

Want to connect? Reach out @TechEthicist and on Facebook. Exploring the ethical, legal, and emotional impact of social media & tech. Co-host of the upcoming show, Funny as Tech.

“Technology is not our enemy. Technology is a useful servant, but it could also become a terrible master. Technology is a tool to be employed, not a purpose to employ us. How much of your humanness are you willing to surrender in order to tap into the convenience of those magical machines? The more we robitize the world, the less we govern ourselves.”-Gerd Leonhard, author of Technology vs. Humanity: The Coming Clash Between Man an Machine