Psychopundits: A Consumer Guide

Right after my recent post on “psychopunditry,” I came across signs of this kerfuffle between the writer Jonah Lehrer and the psychologist Christopher Chabris (not to be confused with this other kerfuffle). Short version: Chabris thinks Lehrer exaggerates the reach and meaning of research findings, distorting the science. Their debate, like the National Review piece in my earlier post, turns on an important question: How can experiments and other research from the mind sciences be brought to bear on questions of politics, policy and practical life? Or, to put it less abstractly, if lab experiments suggest that people looking at blue screens are better at a creative task than people who looked at a red screen, should your company paint the office walls blue? An interested bystander will hear contradictory opinions from authoritative sources (Chabris says Lehrer’s inference was hooey, the authors of the study Lehrer quoted said it was OK). What to do? How are you, the non-expert reader, to know who is right?

I do believe there are standards you can apply psychopunditry as it flies across your screen. At any rate, these are the ones I ask myself when looking at research papers, and I commend them to you.

1. How big is the effect?

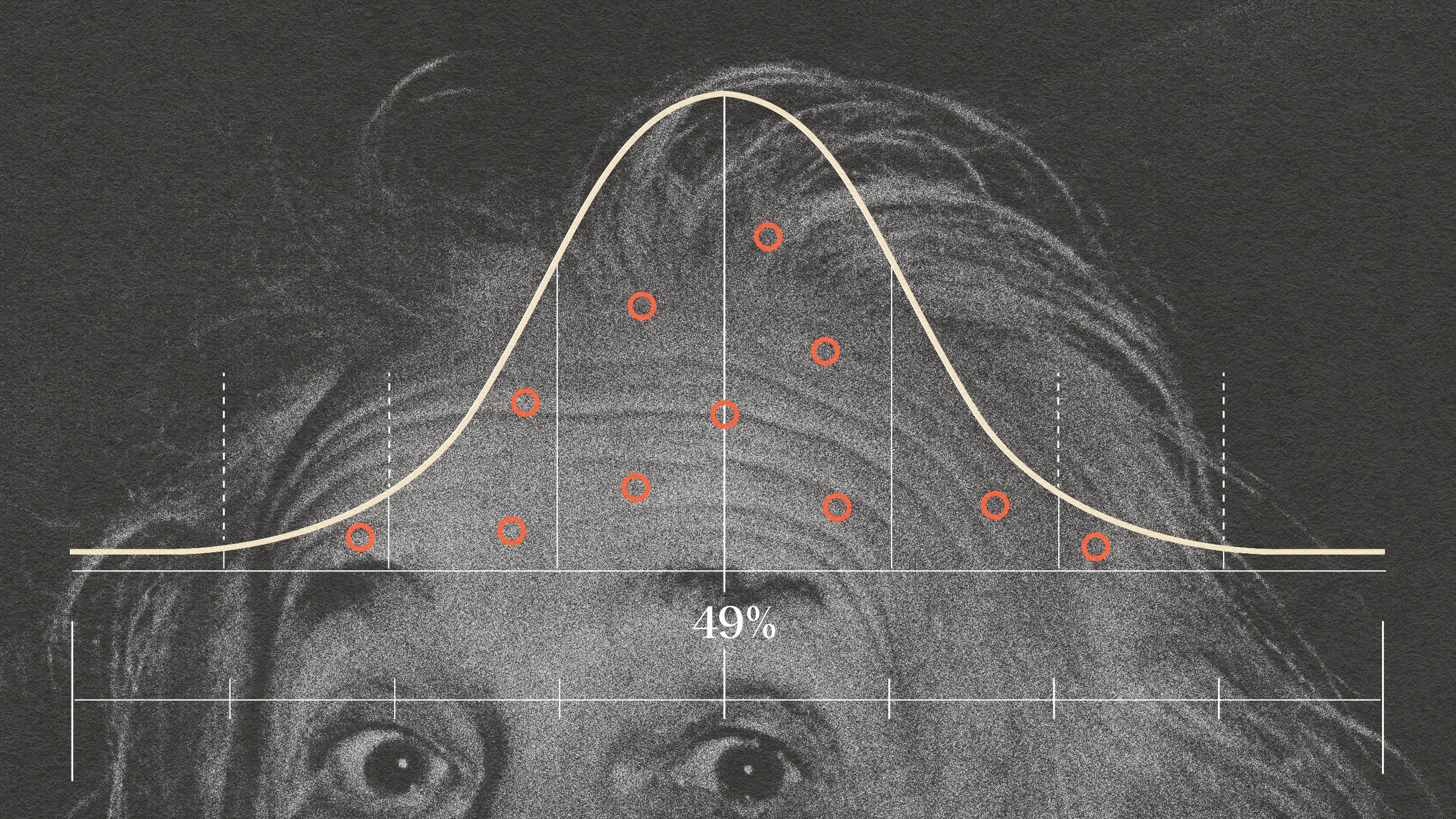

In Chabris’ most famous experiment, he and Dan Simons asked their volunteers watch a basketball game and keep track of how many times the ball was passed. During the game, someone in a gorilla suit walked through the court. About half the observers, concentrating on the ball-passing, didn’t notice the gorilla.

Half of all participants, 50 percent, is difficult to quarrel with. A lot of experiments, though, don’t produce nearly that level of contrast between two groups of people. Similarly, when looking for associations between one phenomenon and another, researchers may be satisfied with a relatively small effect. For example, the correlation between violent videogames and aggressive or anti-social behavior in many studies it’s between r=.1 and r=.3, on a scale where 1.0 is the strongest possible correlation and 0 is no relationship at all. That’s not a big effect.

2. How likely is this effect to be swamped by other effects?

To say that some mental process is real, researchers need to isolate it. But the act of isolating it may suggest an importance that it does not have in real life. For example, having new blue walls at work may not prompt more creative thinking if you have the same old psychotic boss and the same old noxious fumes from the chemical plant next door. The impact of those stressors will overwhelm the subtler color effect.

This is probably the caution that’s most underplayed by both scientists and people like me who are trying to get your attention with our accounts of the research. After all, to capture your eyeballs, we have to say this latest paper is important. That militates against pointing out that a lab effect can be quite real without making much difference in the real world.

Social psychologists have defended small correlations and subtle effects on the grounds that they’re in the same range as findings on health risks from pollutants and tobacco smoke. But epidemiological studies were done on large populations, and were frequently replicated, before they were accepted. Many psychology studies are performed on about 100 undergraduates. (The smaller the number of people in an experiment, the greater the chance that the measured differences among them could be accidental.) Or the experiment is performed on 1000 undergraduates but with only 20 different stimuli, which, as Neuroskeptic pointed out this week, also can make noise look significant. Then, the study is never done again. Which brings us to item # 3:

3. How often has the work been replicated?

It’s not easy to publish a study announcing that an experiment didn’t work out, and many researchers prefer to make discoveries, not point out others’ errors. Though the scientific method presumes that experiments will be replicated, many in social science never are. The problem is acute enough that, as Tom Bartlett reported a few weeks ago, a group of psychologists recently launched a project to attempt to reproduce all the results published in several major journals for a year—in part to see just how much of the stuff does replicate.

4. How often has the work been corroborated in other ways?

Labs permit researchers to control situations precisely, but the cost is a certain distance from real circumstances. On the other hand, working on a street or in an office or a store gives psychologists a chance to test theories in real conditions—but those conditions can’t be manipulated to eliminate potential confusions. If a psychological phenomenon is real, though, it ought to be visible in both the controlled lab experiment and in life as it is lived. Hence it should be possible to test a proposed cause-and-effect in psychology by comparing lab and field experiments. If both lab and field get the same effect, that’s more support for the reality of the phenomenon.

In this analysis, published earlier this year in the journal Perspectives on Psychological Science, the University of Virginia law professor Gregory Mitchell looked at 217 such lab-to-field comparisons across a number of branches of psychology. It’s definitely buzz-kill for journalism about social-psychological theories. Of the subdisciplines he examined (social, industrial/organizational, clinical, marketing, developmental) social psychology had the least amount of agreement between lab and field. Social psychology also evinced the most contradiction: Instances where r in the lab was positive and in the field was negative, or vice versa. If lab and field don’t agree, or one wing is missing, you should hesitate about any grand or general conclusions.

5. What are we being asked to do?

A surprising result of an experiment done on 54 people can be fascinating. But it’s a very slender foundation for major social change. When you see such a mismatch—small-scale experiment, small effect, big sweeping conclusions about how society should respond—then beware. This is the grounds on which I’m skeptical of Lehrer’s claim that being surrounded by blue makes us more creative. Repainting a lot of walls will cost time and money. A few experiments with color screens is not enough justification for the implied call to action.

6. Did you keep in mind that no study is an island?

Finally, I try to keep in mind that social science, like any human endeavor, is cumulative enterprise. We know some things about behavior, with reasonable confidence, because a great many people did their work over a long span of time. Similarly, we have great works of literature because many, many hands took their turn at producing poems, plays, novels, songs etc etc. Most work falls between the extremes of perfection and nonsense, and that’s exactly what you’d expect and want. Masterpieces—a term that originally described the final work of an apprentice before striking out on his own—are the result of many many efforts that aren’t so great. If all work had to be perfect, there would be no work at all.

Put it another way: Theodore Sturgeon once told a convention of SF writers and fans that 90 percent of science fiction is crap. But, he added, no worries—90 percent of everything is crap. The justification for the less-than-perfect is that in doing it, we improve. It is part of the road to excellence.

So, skepticism is in order when you read reports of social-science research. But don’t be a dick about it—even a flawed study can be part of a wave the fetches up a real and lasting insight. Try to keep an open mind, and look at the details. And if you want to paint your walls blue, go ahead. Who ever said everything you do needs to be justified by a lab?