Can We Save the Human Race?

We aren’t doing enough. The human race is in more danger than it might seem.

The human race has always faced a certain amount of what Oxford philosopher Nick Bostrom calls “existential risk.” Existential risk—or “x-risk”—is the risk that something might completely wipe out the human race or destroy human civilization. It’s the risk of just about the worst thing most of us can imagine: the destruction not only of everything that we know and love but the loss of the entire future potential of the human race.

But as Bostrom says—and as I explain in greater detail elsewhere—until recently we faced a fairly low level of existential risk. Prehistoric humans certainly may have struggled to compete with other hominids and survive. In fact, genetic evidence suggests that we came close to extinction at some point in our history. Even after we established ourselves around the planet there was always some danger that a supervolcano or a massive comet could do enough to damage to our environment to wipe us out. But from what we know the risk of that kind of exogenous catastrophe is fairly low.

Unfortunately, the development of modern technology and the spread of human civilization has introduced anthropogenic existential risks. For the first time in recorded history, in other words, there’s a real chance that human beings could trigger—whether accidentally or by design—a catastrophe large enough to destroy the human race. In the last century, for example, the development of nuclear weapons raised the possibility that we could destroy the human race in a single military conflict— a risk that subsided but did not disappear with the end of the Cold War. There is likewise the possibility that our emissions of greenhouse gases could trigger runaway global warming too rapid for us to stop.

New technology means new, less obvious catastrophic risks. Bostrom suggests the greatest risk may actually be from the release of destructive, self-replicating nanomachines—a technology that is beyond us now but may not be that far out of reach. A similar danger is the release of a destructive genetically-engineered biological agent, a scenario that becomes more plausible with the spread of technology that makes it possible for ordinary people to create designer organisms. Another danger—which sounds like science fiction, but is worth taking seriously—could come from the creation an artificial intelligence we cannot control. Some even argue that there is chance that a high-energy physics experiment could trigger a catastrophe. Based on our best theories, this kind of catastrophe seems extremely unlikely. But of course the reason we conduct these experiments in the first place is that our best theories are incomplete or even contradictory. We experiment precisely because we don’t really know what will happen.

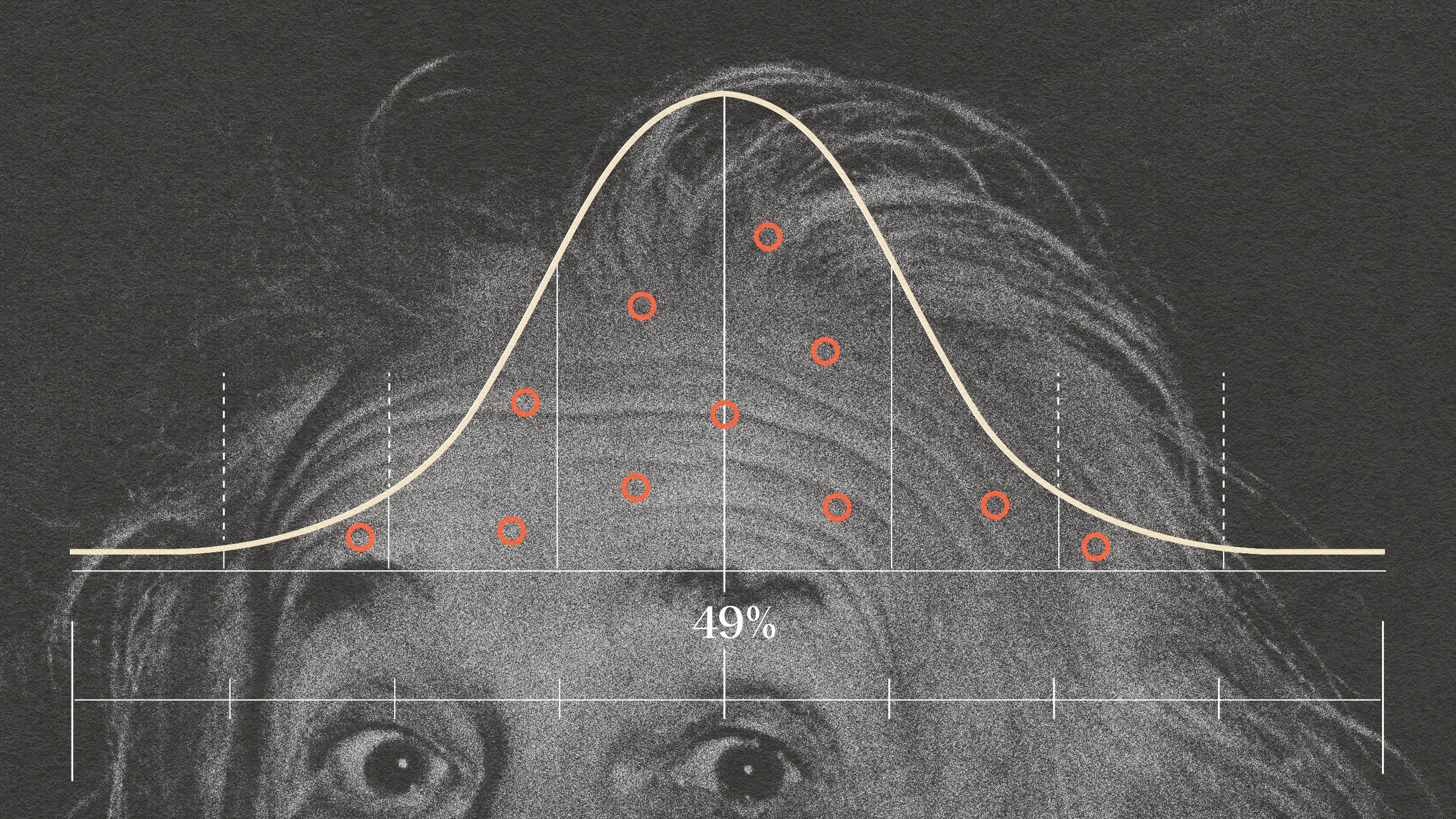

It’s difficult to evaluate these kind of risks. British Astronomer Royal Martin Rees pessimistically estimated that we have just a 50% chance of surviving this century. Other risk experts are more optimistic, but even a miniscule chance of this kind of catastrophe should be enough to spur us to take precautions. That’s why a handful of academic institutes and non-profit organizations like the Global Catastrophic Risk Institute and Cambridge’s Centre for the Study of Existential Risk have begun to focus attention on the existential risks to humanity.

It’s hard to imagine that we can really stop the spread of potentially dangerous technologies, or—given their potential to save and improve lives—that we would necessarily even want to. But it seems clear that we can and should do more to mitigate existential risks. At the very least, we need to develop meaningful international regimes to reduce and counteract the damage we’re doing to our environment, create protocols for the responsible scientific research, and formulate contingency plans to respond to potential dangers. The future of the human race is quite literally at stake.

You can read more about existential risk on my new blog Anthropocene. You can also follow me on Twitter: @rdeneufville

Image of the Earth seen from Apollo 8 courtesy of Bill Anders/NASA