The mystery of the missing experiments

Can experimental findings look too good to be true?

Last week I wrote a blog post about some experiments showing a counterintuitive finding regarding how the need to urinate affects decision making. It’s since been brought to my attention that these experiments (along with dozens of others) have been called in to question by one researcher. You can watch Gregory Francis’s stunning lecture here (scroll half way down the page) where you can also find the Powerpoint slides (The video and powerpoint are on a very slow server, so you may wish to leave the video to buffer or wait until the heavy traffic that might follow this post passes). You can find Francis’ papers on the topic in Perspectives in Psychological Science (OA) and in Psychonomic Bulletin & Review ($), for a brief summary see Neuroskeptic’s coverage here.

When messy data provides only clean findings, alarm bells should go off

Francis makes the argument that when sample size, effect size and power are low, datasets that are replicated repeatedly without occasional, or even relatively frequent null findings should be treated as suspect. This is because in experiments where it is reported in the data that certainty is low, it should be expected that we’ll see null effects with frequency due to the rules of probability. If Francis is correct we should be skeptical of studies such as the one I described last week, precisely because there are no null findings, when based on the statistics of the study, we might expect there should be.

Gazing into a crystal statistical ball

Francis uses Bem’s infamous research on precognition (or seeing in to the future to you and me) as an example of how to spot dodgy research practices through analyzing statistics. It’s noteworthy that the leading journal that accepted Bem’s study in the first place, along with a number of other leading journals all refused to publish a failed replication of the same experiment. According to Bem, nine out of ten of his experiments were successful, but Francis points out that based on the effect sizes and sample sizes of Bem’s research, the stats suggest data is missing or questionable research practices were used. According to Francis, by adding up the power values in Bem’s 10 experiments, we can tell that Bem should only have been able to replicate his experiment approximately six times out of ten. According to Francis, the probability that Ben could have got the result he did is 0.058, “that’s the probability, given the characteristics of the experiments that Bem reported himself, that you would get this kind of unusual pattern”.

What does dodgy data look like?

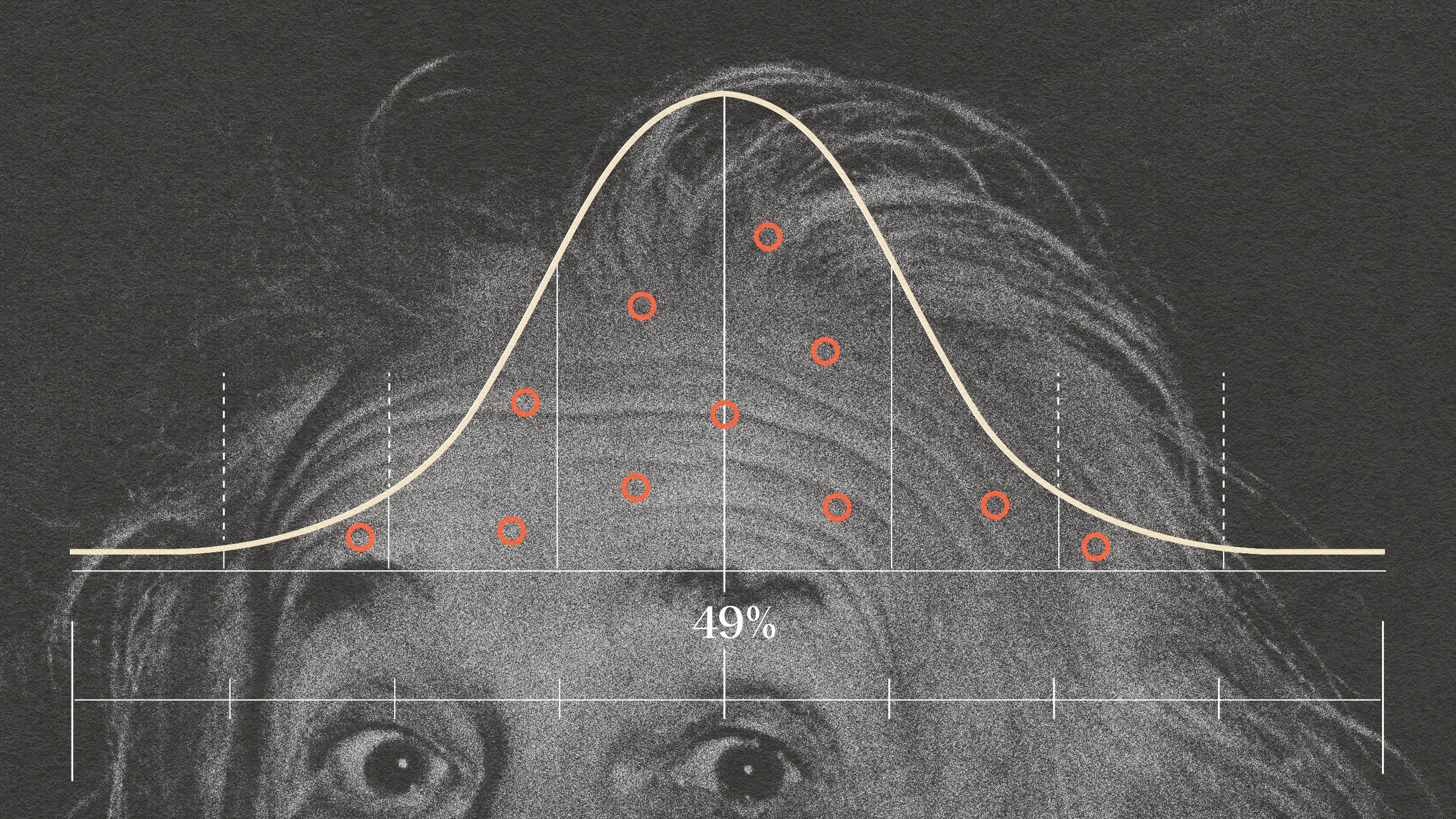

Francis ran thirty simulated experiments with a random sample of thirty points on a normal distribution as a control group and a random sample of thirty data points from a normal distribution with a mean of g and a standard deviation of one as an experimental group. Unlike experiments suffering from bias, we get to see all results (in grey). The dark grey dots are from only the results of experiments that happened upon a positive result and rejected the null hypothesis – simulating the file drawer effect. The dark grey dots on the graph on the right show what the data looks like if data peeking is also used to stop the experiment precisely when a positive effect is found, within the limits of the thirty trials used for the control experiment.

Where does this bias come from?

Francis highlights three areas, most obviously publication bias, but also optional stopping – ending an experiment just after finding a significant result and HARKing– hypothesizing after the results are known. In the words of Francis:

“scientists are not breaking the rules, the rules are the problem” – Gregory Francis

But the story does not end there, all of us suffer from systematic biases and scientists are human too. For a quick introduction to human biases check out Sam McNerney’s recent piece in Scientific American and Michael Shermer’s TED talk: The pattern behind self-deception. For further discussion on the topic of bias in research see my recent posts The Neuroscience Power Crisis: What’s the fallout? and The statistical significance scandal: The standard error of science? There is only one place where the problem of the file drawer effect is more serious than Psychology and this in the area of clinical trials, where I’ll refer you to Dr. Ben Goldacre’s All Trials campaign which you should be sure to sign if missing experiments are something that worries you, and based on the evidence, they should.

What can we do about it?

Francis’ number one conclusion is that we must “recognize the uncertainty in experimental findings. Most strong conclusions depend on meta-analysis”. As my blog post last week demonstrates perhaps only too well, it is easy to be seduced by the findings of small experiments. Small samples with low effect sizes are by definition, uncertain. Replication alone it seems, is not enough. What is needed are either meta-analyses which depend on the file-drawer effect not coming in to play, or larger replications than the original experiment. If the finding of an experiment is particularly uncertain in the first place, which by their very nature a great number of experimental findings are, then we should expect replications of the same size to fail every so often, if they don’t – then we have good reason to believe something is up.

To keep up to date with this blog you can follow Neurobonkers on Twitter, Facebook, Google+, RSS or join the mailing list.

References

Francis G. (2012). The Psychology of Replication and Replication in Psychology, Perspectives on Psychological Science, 7 (6) 585-594. DOI: 10.1177/1745691612459520

Francis G. (2012). Publication bias and the failure of replication in experimental psychology, Psychonomic Bulletin & Review, 19 (6) 975-991. DOI: 10.3758/s13423-012-0322-y

Image Credit: Shutterstock/James Steidl