The race to predict our behavior: Tristan Harris testifies before Congress

Photo By Stephen McCarthy/Sportsfile via Getty Images

- Former Google design ethicist, Tristan Harris, recently spoke in front of Congress about the dangers of Big Tech.

- Harris told senators that behavior prediction models are a troublesome part of design in the race for attention.

- He warned that without ethical considerations, the dangers to our privacy will only get worse.

In a strange cultural turn of events, ethics are at the forefront of numerous conversations. For decades they were generally overlooked (outside of family and social circles) or treated like Victorian manners (i.e., “boys will be boys”). To focus too diligently on ethics likely meant you were religious or professorial, both of which were frowned upon in a “freedom-loving” country like America. To garner national attention, an ethical breach had to be severe.

The potent combination of the current administration and privacy concerns in technology companies — the two being connected — emerged, causing a large portion of the population to ask: Is this who I really want to be? Is this how I want to be treated? For many, the answers were no and no.

While ethical questions surface in our tweets and posts, rarer is it to look at the engines driving those narratives. It’s not like these platforms are benign sounding boards. For the socially awkward, social media offers cover. We can type out our demons (often) without recourse, unconcerned about what eyes and hearts read our vitriolic rants; the endless trolling takes its toll. It’s getting tiresome, these neuroses and insecurities playing out on screens. Life 1.0 is not a nostalgia to return to but the reality we need to revive, at least more often than not.

This is why former Google design ethicist, Tristan Harris, left the tech behemoth to form the Center for Humane Technology. He knows the algorithms are toxic by intention, and therefore by design. So when South Dakota senator John Thune recently led a hearing on technology companies’ “use of algorithms and how it affects what consumers see online,” he invited Harris to testify. Considering how lackluster previous congressional hearings on technology have been, the government has plenty of catching up to do.

The ethicist did not hold back. Harris opened by informing the committee that algorithms are purposefully created to keep people hooked; it’s an inherent part of the business model.

“It’s sad to me because it’s happening not by accident but by design, because the business model is to keep people engaged, which in other words, this hearing is about persuasive technology and persuasion is about an invisible asymmetry of power.”

Tristan Harris – US Senate June 25, 2019

Harris tells the panel what he learned as a child magician, a topic he explored in a 2016 Medium article. A magician’s power requires their audience to buy in; otherwise, tricks would be quickly spotted. Illusions only work when you’re not paying attention. Tech platforms utilize a similar asymmetry, such as by hiring PR firms to spin stories of global connection and trust to cover their actual tracks.

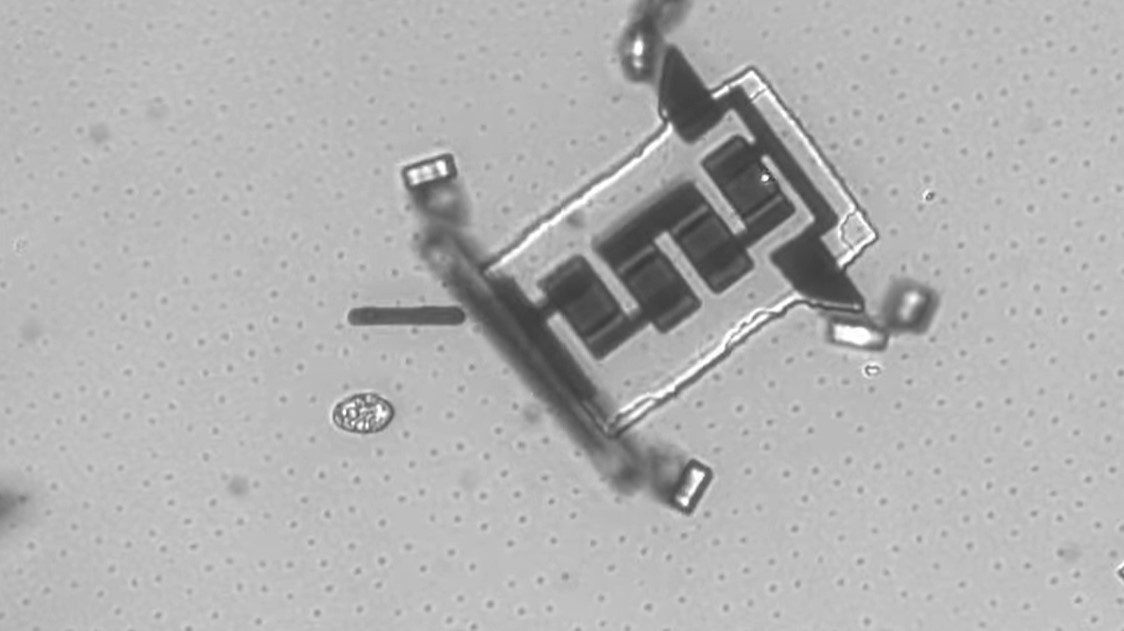

As each track leads to profit maximization, companies must become more aggressive in the race for attention. First it was likes and dislikes, making consumers active participants, causing them to feel as if they have personal agency within the platform. They do, to an extent, yet as the algorithms churn along, they learn user behavior, creating “two billion Truman Shows.” This process has led to Harris’s real concern: artificial intelligence.

AI, Harris continues, has been shown to predict our behavior better than we can. It can guess our political affiliation with 80 percent accuracy, figure out you’re homosexual before even you know it, and start suggesting strollers in advance of the pregnancy test turning pink.

Prediction is an integral part of our biology. As the neuroscientist Rodolfo Llinas writes, eukaryotes navigate their environment through prediction: this way leads to nutrition (go!), that looks like a predator (swim away!). Humans, too — as does all biological life — predict our way through our environment. The problem is that since the Industrial Revolution, we’ve created relatively safe environments to inhabit. As we don’t have to pay attention to rustling bushes or stalking predators, we offload memory to calendars and GPS devices; we offload agency to the computers in our hands. Our spider senses have diminished.

Even during the technological revolution, we remain Paleolithic animals. Our tribalism is obvious. Harris says that in the race for attention companies exploit our need for social validation, using neuroscientist Paul MacLean’s triune brain model to explain the climb down the brainstem to our basest impulses. Combine this with a depleted awareness of our surroundings and influencing our behavior becomes simple. When attention is focused purely on survival — in this age, surviving social media — a form of mass narcissism emerges: everything is about the self. The world shrinks as the ego expands.

We know well the algorithmic issues with Youtube: 70 percent of time spent watching videos is thanks to recommendations. The journalist Eric Schlosser has written about the evolution of pornography: partial nudity became softcore became hardcore became anything imaginable because we kept acclimating to what was once scandalous and wanted more. The Youtube spiral creates a dopamine-fueled journey into right- and left-wing politics. Once validated, you’ll do anything to keep that feeling alive. Since your environment is the world inside of your head influenced by a screen, hypnotism is child’s play to an AI with no sense of altruism or compassion.

How a handful of tech companies control billions of minds every day | Tristan Harris

That feeling of outrage sparked by validation is gold to technology companies. As Harris says, the race to predict your behavior occurs click by click.

“Facebook has something called loyalty prediction, where they can actually predict to an advertiser when you’re about to become disloyal to a brand. So if you’re a mother and you take Pampers diapers, they can tell Pampers, ‘Hey, this user is about to become disloyal to this brand.’ So in other words, they can predict things about us that we don’t know about our own selves.”

Harris isn’t the only one concerned about this race. Technology writer Arthur Holland Michel recently discussed an equally (if not more) disturbing trend at Amazon that perfectly encapsulates the junction between prediction and privacy.

“Amazon has a patent for a system to analyze the video footage of private properties collected by its delivery drones and then feed that analysis into its product recommendation algorithm. You order an iPad case, a drone comes to your home and delivers it. While delivering this package the drone’s computer vision system picks up that the trees in your backyard look unhealthy, which is fed into the system, and then you get a recommendation for tree fertilizer.”

Harris relates this practice by tech companies to a priest selling access to confessional booths, only in this case these platforms have billions of confessions to sell. Once they learn what you’re confessing, it’s easy to predict what you’ll confess to next. With that information, they can sell you before you had any idea you were in the market.

Harris makes note of the fact that Fred Rogers sat in front of the very same congressional committee fifty years prior to warn of the dangers of “animated bombardment.” He thinks the world’s friendliest neighbor would be horrified by the evolution of his prediction. Algorithms are influencing our politics, race relations, environmental policies — we can’t even celebrate a World Cup victory without politicization (and I’m not referencing equal pay, which is a great usage of such a platform).

The nature of identity has long been a philosophical question, but one thing is certain: no one is immune to influence. Ethics were not baked into the system we now rely on. If we don’t consciously add them in — and this will have to be where government steps in, as the idea of companies self-regulating is a joke — any level of asymmetric power is possible. We’ll stay amazed at the twittering bird flying from the hat, ignorant of the grinning man who pulled it from thin air.

—