Why A.I. robotics should have the same ethical protections as animals

KAZUHIRO NOGI/AFP/Getty Images

Universities across the world are conducting major research on artificial intelligence (A.I.), as are organisations such as the Allen Institute, and tech companies including Google and Facebook.

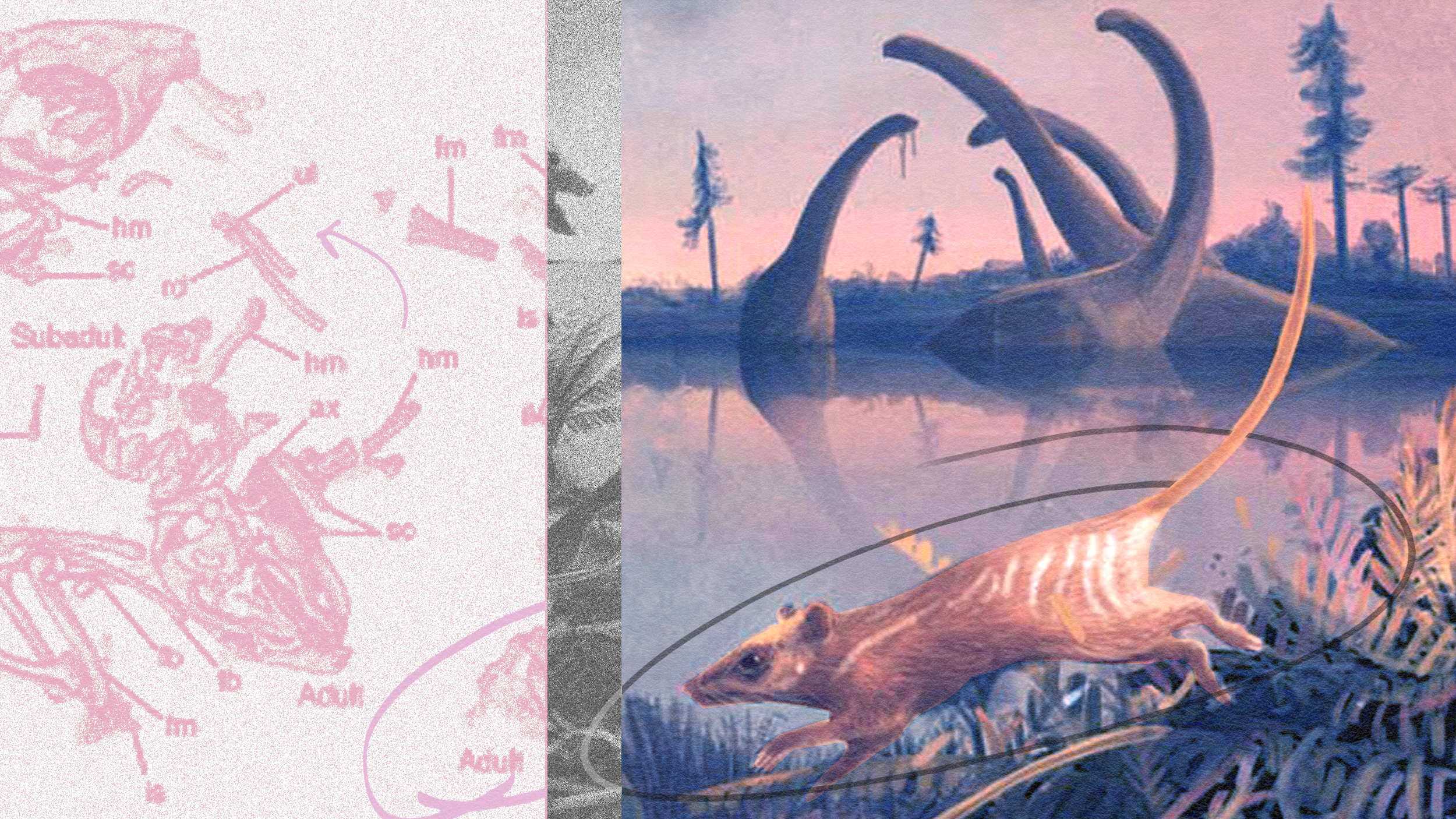

A likely result is that we will soon have A.I. approximately as cognitively sophisticated as mice or dogs. Now is the time to start thinking about whether, and under what conditions, these A.I.s might deserve the ethical protections we typically give to animals.

Discussions of “A.I. rights” or “robot rights” have so far been dominated by questions of what ethical obligations we would have to an A.I. of humanlike or superior intelligence – such as the android Data from Star Trek or Dolores from Westworld. But to think this way is to start in the wrong place, and it could have grave moral consequences. Before we create an A.I. with humanlike sophistication deserving humanlike ethical consideration, we will very likely create an A.I. with less-than-human sophistication, deserving some less-than-human ethical consideration.

We are already very cautious in how we do research that uses certain nonhuman animals. Animal care and use committees evaluate research proposals to ensure that vertebrate animals are not needlessly killed or made to suffer unduly. If human stem cells or, especially, human brain cells are involved, the standards of oversight are even more rigorous. Biomedical research is carefully scrutinised, but A.I. research, which might entail some of the same ethical risks, is not currently scrutinised at all. Perhaps it should be.

You might think that A.I.s don’t deserve that sort of ethical protection unless they are conscious – that is, unless they have a genuine stream of experience, with real joy and suffering. We agree. But now we face a tricky philosophical question: how will we know when we have created something capable of joy and suffering? If the AI is like Data or Dolores, it can complain and defend itself, initiating a discussion of its rights. But if the AI is inarticulate, like a mouse or a dog, or if it is for some other reason unable to communicate its inner life to us, it might have no way to report that it is suffering.

A puzzle and difficulty arises here because the scientific study of consciousness has not reached a consensus about what consciousness is, and how we can tell whether or not it is present. On some views – ‘liberal’ views – for consciousness to exist requires nothing but a certain type of well-organised information-processing, such as a flexible informational model of the system in relation to objects in its environment, with guided attentional capacities and long-term action-planning. We might be on the verge of creating such systems already. On other views – ‘conservative’ views – consciousness might require very specific biological features, such as a brain very much like a mammal brain in its low-level structural details: in which case we are nowhere near creating artificial consciousness.

It is unclear which type of view is correct or whether some other explanation will in the end prevail. However, if a liberal view is correct, we might soon be creating many subhuman AIs who will deserve ethical protection. There lies the moral risk.

Discussions of “A.I. risk,” in needless vivisections, the Nazi medical war crimes, and the Tuskegee syphilis study). With AI, we have a chance to do better. We propose the founding of oversight committees that evaluate cutting-edge A.I. research with these questions in mind. Such committees, much like animal care committees and stem-cell oversight committees, should be composed of a mix of scientists and non-scientists – A.I. designers, consciousness scientists, ethicists and interested community members. These committees will be tasked with identifying and evaluating the ethical risks of new forms of A.I. design, armed with a sophisticated understanding of the scientific and ethical issues, weighing the risks against the benefits of the research.

It is likely that such committees will judge all current A.I. research permissible. On most mainstream theories of consciousness, we are not yet creating A.I. with conscious experiences meriting ethical consideration. But we might – possibly soon – cross that crucial ethical line. We should be prepared for this.

John Basl & Eric Schwitzgebel

This article was originally published at Aeon and has been republished under Creative Commons.