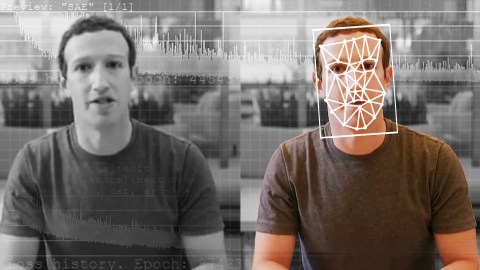

How the technology behind deepfakes can benefit all of society

Elyse Samuels/The Washington Post via Getty Images

Recent advances in deepfake video technology have led to a rapid increase of such videos in the public domain in the past year.

Face-swapping apps such as Zao, for example, allow users to swap their faces with a celebrity, creating a deepfake video in seconds.

These advances are the result of deep generative modelling, a new technology which allows us to generate duplicates of real faces and create new, and impressively true-to-life images, of people who do not exist.

This new technology has quite rightly raised concerns about privacy and identity. If our faces can be created by an algorithm, would it be possible to replicate even more details of our personal digital identity or attributes like our voice – or even create a true body double?

Indeed, the technology has advanced rapidly from duplicating just faces to entire bodies. Technology companies are concerned and are taking action: Google released 3,000 deepfake videos in the hope of allowing researchers to develop methods of combating malicious content and identifying these more easily.

While questions are rightly being asked about the consequences of deepfake technology, it is important that we do not lose sight of the fact that artificial intelligence (AI) can be used for good, as well as ill. World leaders are concerned with how to develop and apply technologies that genuinely benefit people and planet, and how to engage the whole of society in their development. Creating algorithms in isolation does not allow for the consideration of broader societal concerns to be incorporated into their practical applications.

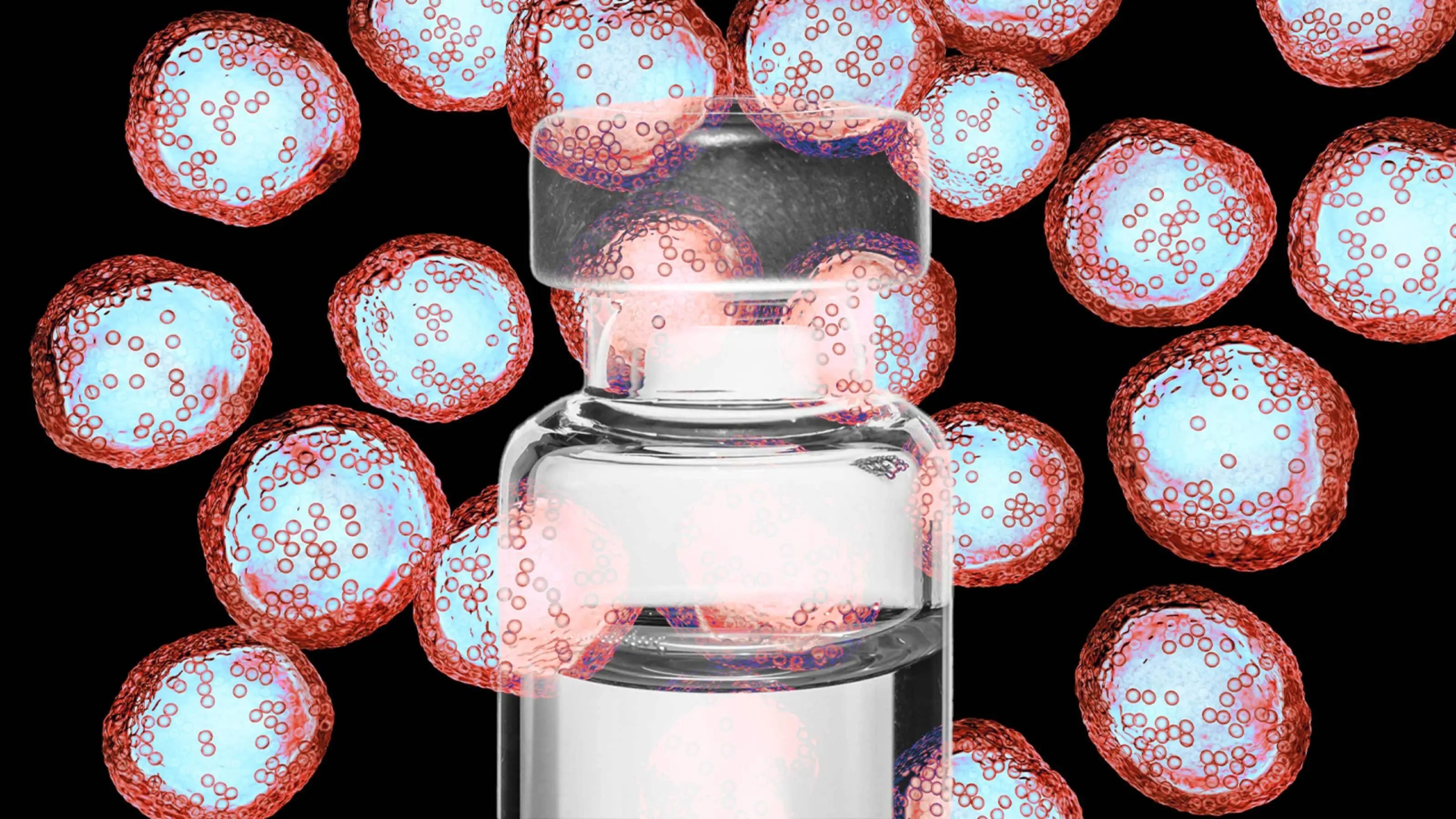

For example, the development of deep generative models raises new possibilities in healthcare, where we are rightly concerned about protecting the privacy of patients in treatment and ongoing research. With large amounts of real, digital patient data, a single hospital with adequate computational power could create an entirely imaginary population of virtual patients, removing the need to share the data of real patients.

We would also like to see advances in AI lead to new and more efficient ways of diagnosing and treating illness in individuals and populations. The technology could enable researchers to generate true-to-life data to develop and test new ways of diagnosing or monitoring disease without risking breaches in real patient privacy.

These examples in healthcare highlight that AI is an enabling technology that is neither intrinsically good nor evil. Technology like this depends on the context in which we create and use it.

Universities have a critical role to play here. In the UK, universities are leading the world in research and innovation and are focused on making an impact on real-world challenges. At UCL, we recently launched a dedicated UCL Centre for Artificial Intelligence that will be at the forefront of global research into AI. Our academics are working with a broad range of experts and organizations to create new algorithms to support science, innovation and society.

AI must complement and augment human endeavour, not replace it. We need to combine checks and balances that inhibit or prevent inappropriate use of technology while creating the right infrastructure and connections between different experts to ensure we develop technology that helps society thrive.

Reprinted with permission of the World Economic Forum. Read the original article.