Thought AIs could never replace human imagination? Think again

Back in the 1980s, Hans Moravec, an AI scientist, discovered a paradox: What is easy for humans, such as visual or auditory understanding, is hard for machines.

Things have changed since then. Today’s AIs are much better at understanding what they see. It is not that AI has caught up with all of our human visual capabilities, but rather that the technology is developing in different ways.

When we think AI, we think of pure automation. This is not true anymore. Did you think, for example, that AI could never replace portrait photographers? Think again; it doesn’t even need a model. Today we have AIs that can ‘imagine’ – that is, according to the Merriam-Webster definition, form an image of “something not present to the senses or never before wholly perceived in reality”.

The video above shows the results from an AI that has learned to generate photos of people who don’t exist. At a qualitative level, only a few artists are capable of inventing faces with photographic precision.

The secret behind AI imagination

The mechanism that gifts AI with imaginative powers has a name: Generative Adversarial Networks (GAN). GANs were partly inspired by neuroscience research. In essence, GANs consist of two entities which compete against – and learn from – each other: one learns to generate fakes, while another one learns to detect fakes. As the fake detector becomes more effective, so does the fake generator. Neuroscientists have discovered that we use a related mechanism, the actor-critic model, which is believed to be located pretty much in the middle of our brains.

If imagination is no longer a privilege of the human mind, how can we leverage AIs’ imagination? Here is a taste of what’s happening in labs right now.

Turning night into day: let there be GANs

Imagination has a direct application: guessing the representation of a subject in a different way, or in other words, translating an image from one representation to another. For instance, this AI imagines what the sketch of a photograph would look like, or what the colour version of a black and white photograph would look like.

An application of image translation is to help us see the world in a more readable way, or beyond what is visible.

Image: Computer Vision Lab, Department of Information Technology & Electrical Engineering, ETH Zurich, Switzerland

Image: Computer Vision Lab, Department of Information Technology & Electrical Engineering, ETH Zurich, Switzerland

This AI, meanwhile, simulates day from a night picture. This is valuable, as creating self-driving cars that work and can locate themselves precisely in all conditions – day, night, fog, rain, snow and so on – requires a lot of data that covers all scenarios. Collecting large amounts of data in all conditions is practically very difficult, as certain conditions (such as snow) occur very rarely in some areas. Instead of collecting more data, scientists have come up with this night-to-day workaround. This could also lead to better night vision for the military, airplane pilots and human drivers.

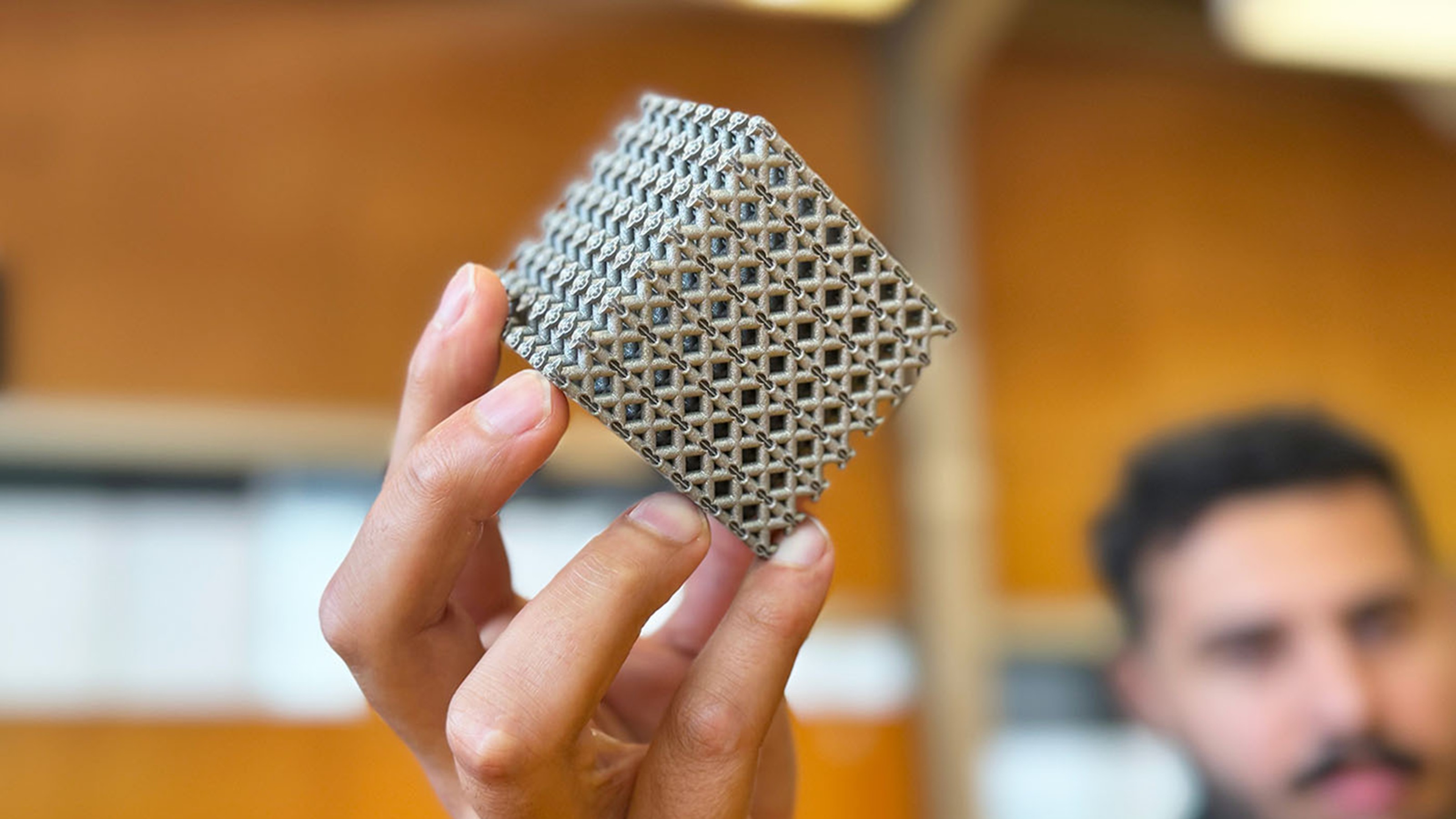

When something is not directly visible, GANs are used to make educated guesses. Take the case of the BodyNet AI (see above), which estimates the body shape of a person given a picture of them fully-clothed. The digital mannequin is useful for designing tailored clothing without either taking measurements by hand or the help of sophisticated body scanners.

And what if we could see through walls? This AI can literally help you track how people move behind walls. Similar to how bats see in the dark, a signal is emitted and what comes back is interpreted. Bats emit ultrasound that are reflected off the surface of nearby objects. Here, it is signals in the WiFi range that are emitted. As WiFi can go through walls but is partly reflected by our human bodies, this signal is interpreted by a deep learning architecture (see video below).

Discovery machines

The ability to make educated guesses is not limited to image generation or translations. Imagination is, in a broader sense, a tool for discovery, and has applications in diverse domains such as cybersecurity or drug design.

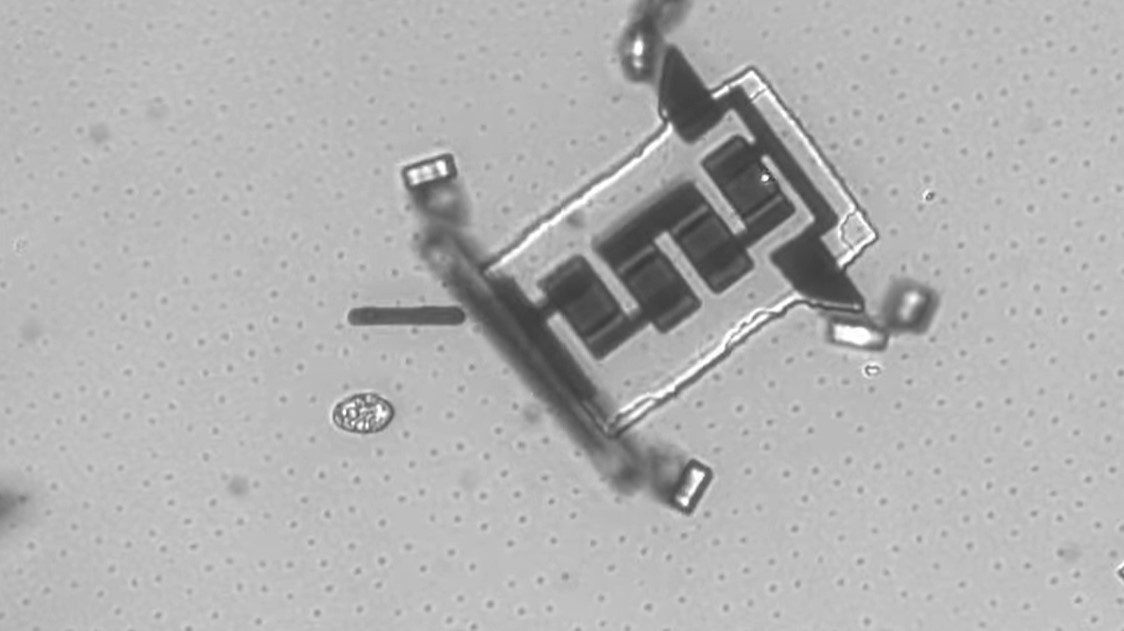

Modern cybersecurity tools feature AIs that can detect threats by looking at their characteristics. Researchers have designed a GAN which learns to generate pieces of malignant code that can bypass these cybersecurity detectors. That may sound scary, but the good news is we could also use it to perfect detectors of malignant code – or, if you think about it, sometimes deception can be a good thing, such as when we engineer drugs to fight diseases.

What does it mean for us?

The 4th Industrial Revolution is not just about automation, but about human-machine collaboration and symbiosis. GANs are a turning point in the development of AI, and will help us supercharge our mental capabilities.

They are also a tool with which we can study the mechanisms of imagination and help us to better understand the role of imagination in domains such as translation or discovery. Even though imagination does not equal creativity, it is one of the tools we use to invent new things. What are the missing pieces, yet to be discovered, that will enable us to build machines that can outsmart us in the creative realm as well?

Reprinted with permission of the World Economic Forum. Read the original article.