3 Tips for Avoiding Fake News in Science

There’s been a lot of talk lately about fake news, and many consider it a genuine threat to a well-functioning democracy. Some of it results from political mischief, or worse. It’s also happening in science reporting as researchers, writers, and publications attempt to gain attention with sensational headlines. Inconclusive evidence may be presented as fact, and sometimes it’s just bad science. Check out RetractionWatch, a site whose purpose is trying to keep up with scientific papers that have been recalled after publication — there were 700 retractions in 2015 alone.

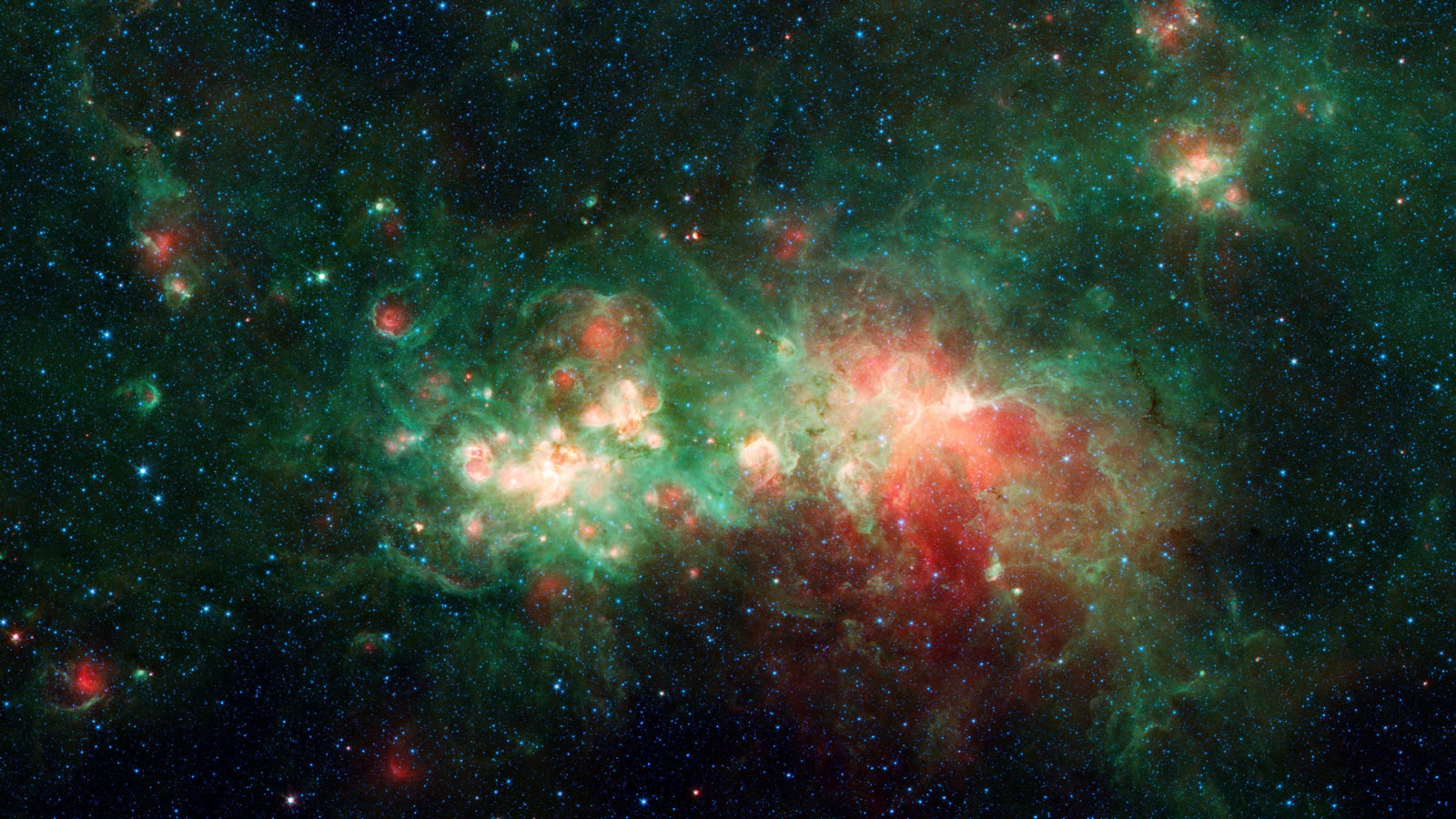

Astrophysicist Michael J. I. Brown is concerned with how difficult it’s become to separate real scientific breakthroughs from clickbait. Writing for Science Alert, he shares his three-step checklist for sorting the truth from the trash. Even with his checklist, it’s not always easy for a real scientist like him to be confident of a study’s validity. But nobody likes to be fooled by fake or bad science.

Neatness Counts

This may seem superficial, but Brown asserts that it’s not. Given that carefully conducted research typically takes a long time, even years, a study that looks slapdash may well be. Typos and cruddy-looking graphics can be a clue that a lack of due diligence is at play.

Brown cites a recent, well-publicized paper by E.F. Borra, E. Trottier that claimed in its Comments section: “Signals probably from Extraterrestrial Intelligence.” Brown was curious, so he had a look. “An immediate red flag for me was some blurry graphs, and figures with captions that weren’t on the same page,” Brown writes. Looking further, he discovered that the author’s conclusion was based on a liberal application of Fourier analysis, which Brown says is known to generate data artifacts that skew results. He also learned that Borra and Trottier chose to work with only a tiny subset of data. Together, Brown feels these two factors render the study questionable at best.

He points out, though, that the neatness filter isn’t always a reliable test, since sometimes great science comes in a less-than-great presentation, as with the announcement of the discovery of the Higgs boson.

Obviousness

As Brown puts it, “’That’s obvious, why didn’t someone think of that before?’

Well, perhaps someone did.” When a study announces something really big and basic, Brown suggests you do a search on that announcement — odds are that you’ll find the topic’s been studied many times before. If you find that to be the case, and if you find no one else came to the same conclusion, what you have is a red flag that should have you carefully considering the new study’s methodology.

Brown’s example here is a recent study that asserted the universe isn’t expanding at an accelerating rate, contrary to earlier, well-regarded research. Brown found an edifying discussion by experts on Twitter (one of whom referred to their discussion as “headdesking”) that dismissed the study as having been based on incorrect assumptions about the behavior of the supernovae it examined, and on the ignoring of some key counter-evidence.

. @Cosmic_Horizons@ScienceAlert A glaring mistake is they assume that the properties of all the supernova have a gaussian distribution – no

— Brad Tucker (@btucker22) October 24, 2016

May I suggest reading the paper! CMB isn't going away, BAOs aren't going away, even if you accept the paper's analysis, which I do not...

— Brian Schmidt (@cosmicpinot) October 25, 2016

In general, before getting excited about claims that a scientific paradigm is overturned, check how hard experts are headdesking on Twitter.

— Katie Mack (@AstroKatie) October 25, 2016

Who Published It?

Checking it the publisher of a study can sometimes help you ascertain its value. While Brown notes that a journal’s ranking isn’t a perfect indicator of its trustworthiness, it’s a start. He does note that some highly ranked journals, like Nature, an open-source subsidiary of which published the universe-expansion piece noted above, and Science are sometimes, in his opinion, seduced by the lure of an attention-grabbing headline.

Brown points out that some journals accept papers without peer review or other critiquing process altogether — meaning they’re just taking the authors’ word on a study’s veracity. University of Colorado librarian Jeffrey Beall had been hosting a well-known list of predatory publishers a skeptical reader could consult. It was mentioned in a recent New York Times article, though his site is offline as of this writing; maybe it will return.

Many of us are interested in keeping up with the latest developments in science, and we eagerly consume articles that feed our enthusiasm. Brown’s three tips can help us know how seriously to take what we’re reading, though it must be pointed out that his method is, in this case, an imperfect science of its own.