This Is Why Dark Energy Must Exist, Despite Recent Reports To The Contrary

An Oxford physicist tries to cast doubt on dark energy, but the data says otherwise.

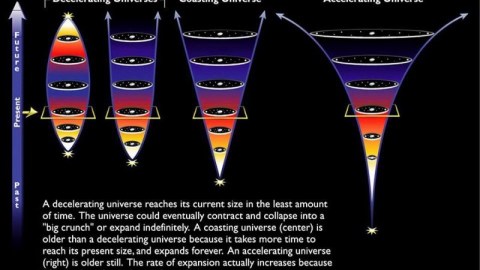

It was a mere 20 years ago that our picture of the Universe got a stunning revision. We all knew our Universe was expanding, that it was full of matter and radiation, and that most of the matter out there couldn’t be made of the same, normal stuff (atoms) that we were most familiar with. We were trying to determine, based on how the Universe was expanding, what our fate was: would we recollapse, expand forever, or be right on the border between the two?

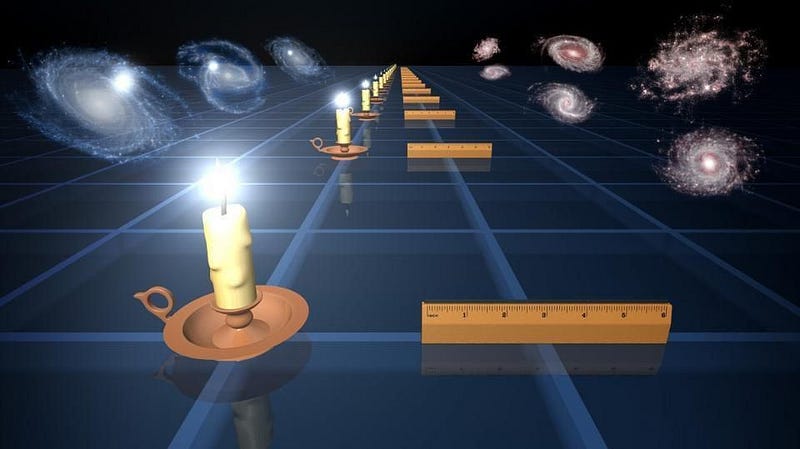

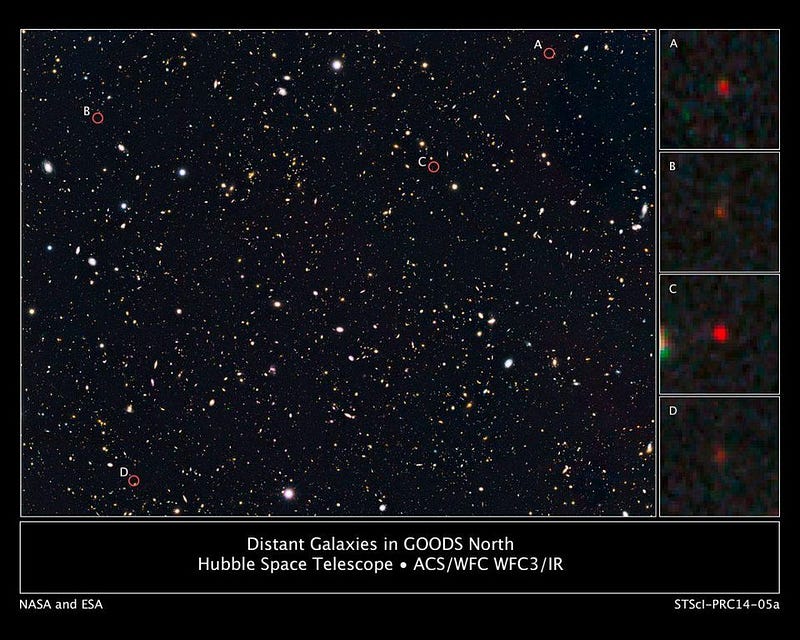

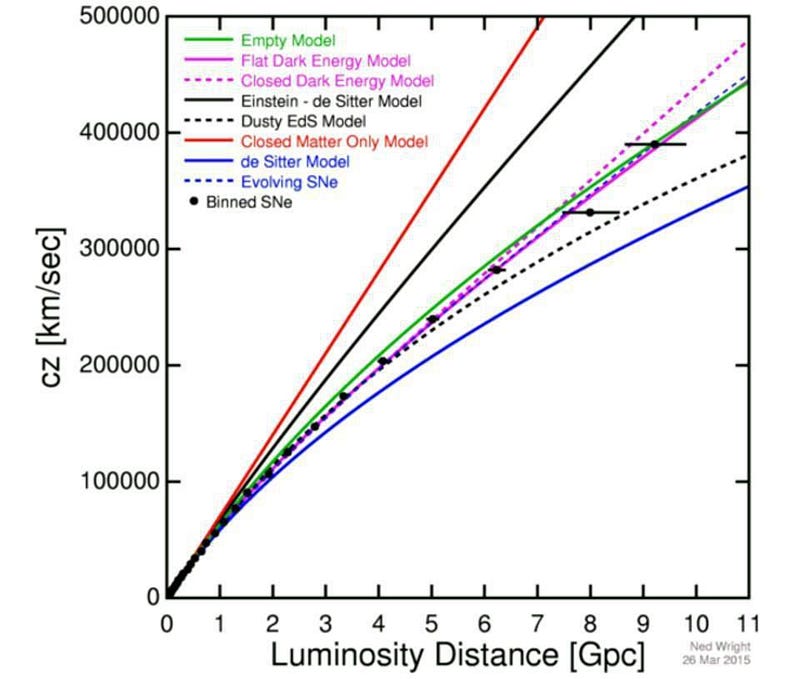

Distant supernovae of a specific type were the tool we would use to decide. In 1998, enough data had come in that two independent teams released the surprising results: the Universe would not only expand forever, but the expansion was accelerating.

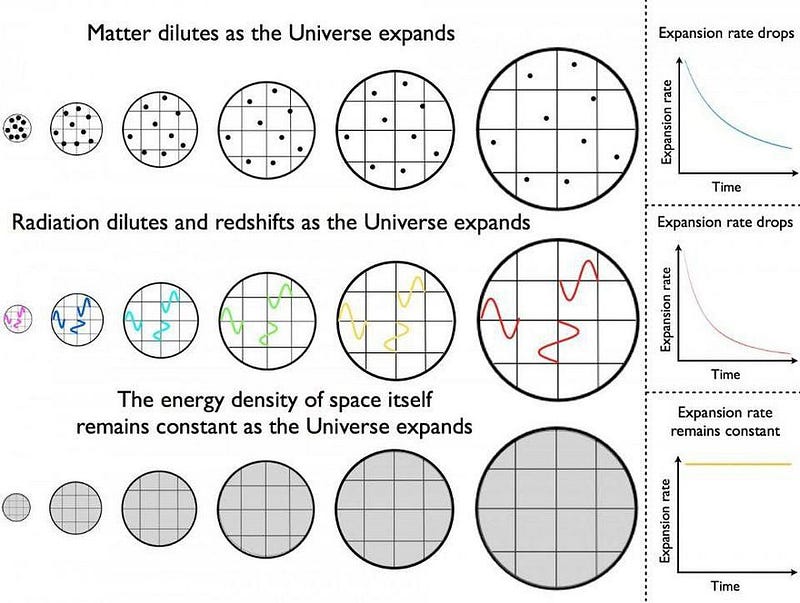

In order for this to be true, the Universe needed a new form of energy: dark energy. Whereas matter clumps and clusters together under the influence of gravity, dark energy would penetrate all of space equally, from the densest galaxy clusters to the deepest, emptiest cosmic void. Whereas matter gets less dense as the Universe expands, since the same number of particles occupy a larger volume, the density of dark energy remains constant over time.

It’s the total amount of energy in the Universe that governs what the expansion rate actually is. As time goes on and the matter density drops while the dark energy density doesn’t, dark energy becomes more and more important relative to everything else. A distant galaxy, therefore, will not just appear to move away from us, but the more distant a galaxy is, the faster and faster it will appear to recede from us, with that speed increasing as time goes on.

That last part, where the speed increases as time goes on, only occurs if there’s some form of dark energy in the Universe.

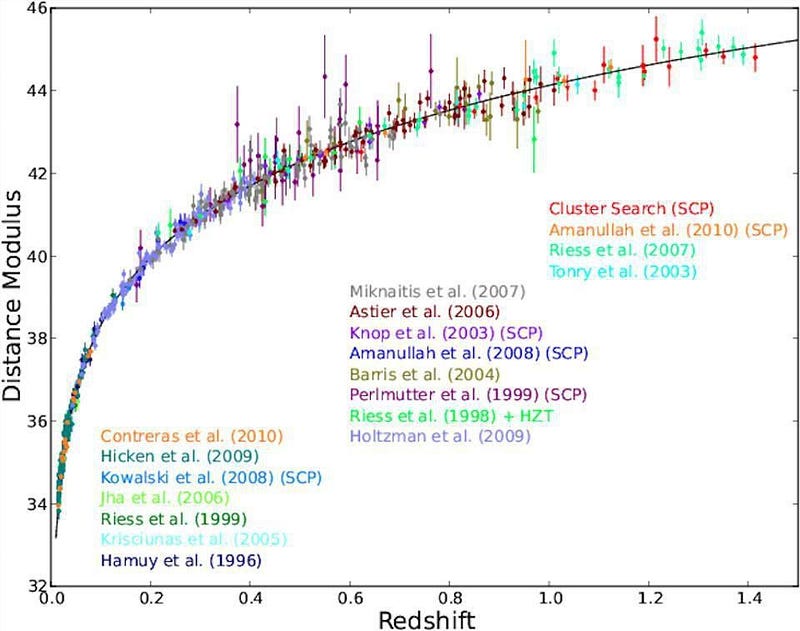

In the late 1990s, both the Supernova Cosmology Project and the High-z Supernova Search Team announced their results almost simultaneously, with both teams reaching the same conclusion: these distant supernovae are consistent with a Universe that’s dominated by dark energy, and inconsistent with a Universe that has no dark energy at all.

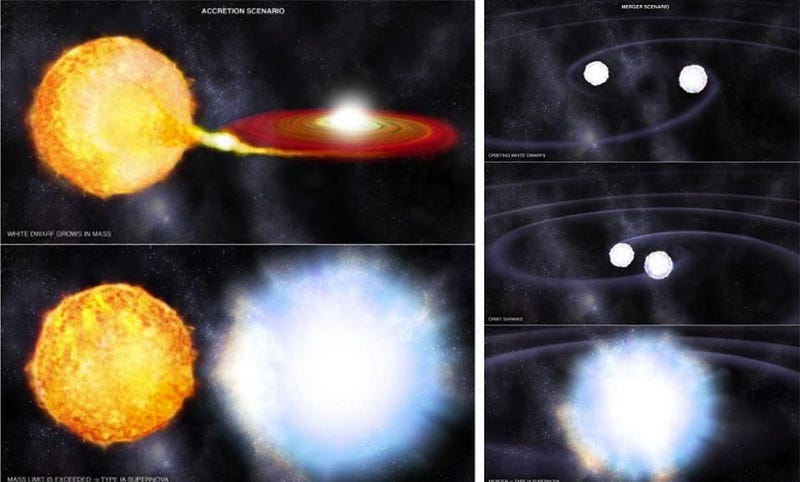

Now, 20 years later, we have more than 700 of these supernovae, and they remain among the best evidence we have for dark energy’s existence and properties. When a white dwarf — the corpse of a sun-like star — either accretes enough matter or merges with another white dwarf, it can trigger a Type Ia supernova, which is bright enough that we can observe these cosmic rarities from billions of light years away.

By the middle of the first decade of the 2000s, all of the reasonable alternative explanations for this observed phenomenon had been ruled out, and dark energy was an overwhelmingly accepted part of our Universe by the scientific community. Three of the leaders of those two teams — Saul Perlmutter, Brian Schmidt, and Adam Riess — were awarded the 2011 Nobel Prize in Physics for this result.

And yet, not everyone is convinced. Two weeks ago, Subir Sarkar of Oxford, along with a couple of collaborators, put forth a paper claiming that even today, with 740 Type Ia supernovae to work from, the supernova evidence only supports dark energy at the 3-sigma confidence level: far lower than what’s required in physics. This is his second paper making this allegation, and the results have gotten quite a bit of news coverage.

Unfortunately, Sarkar is not only wrong, he’s wrong in a very specific way. Whenever you work in a field that isn’t your own (he’s a particle physicist, not an astrophysicist), you have to understand how that field works differently from your own, and why. If you neglect those assumptions, you get the wrong answer, and so you have to be careful about how you do your analysis.

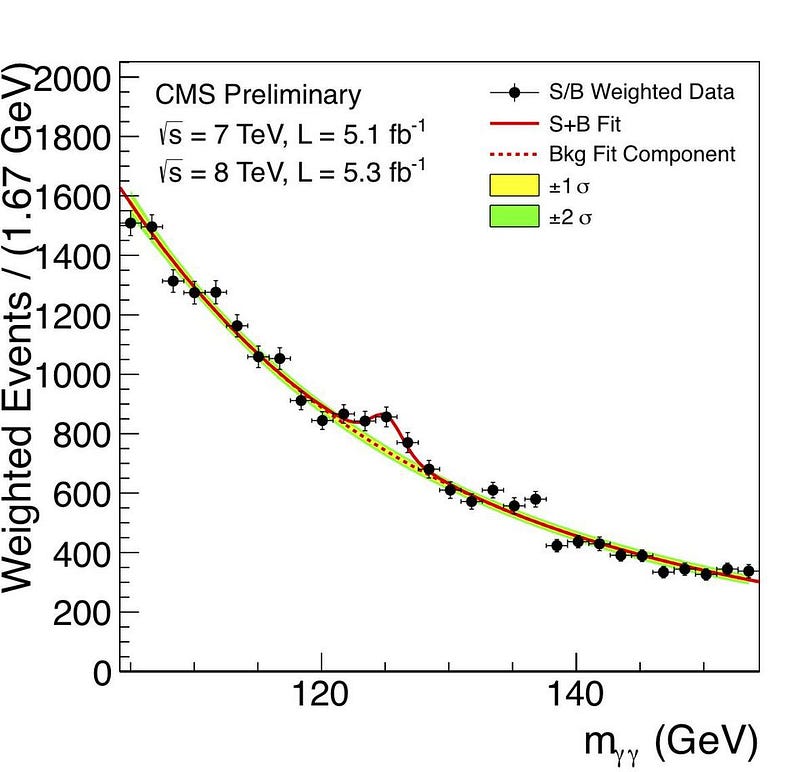

In particle physics, there are always assumptions you make about event rates, backgrounds, and what you expect to see. In order to make a new discovery, you have to subtract out the anticipated signal from all other sources, and then compare what you see to what remains. It’s how we’ve discovered every new particle for generations, including, most recently, the Higgs.

If you don’t make those assumptions, you won’t be able to tease the legitimate signal out of the noise; there will be too much going on, and your significance will be too low. In astronomy and astrophysics, there are assumptions we make, too, in order to make our discoveries. Much like we assume the validity of the particles we’ve measured and their well-measured interactions to discover new ones, we make assumptions about the Universe.

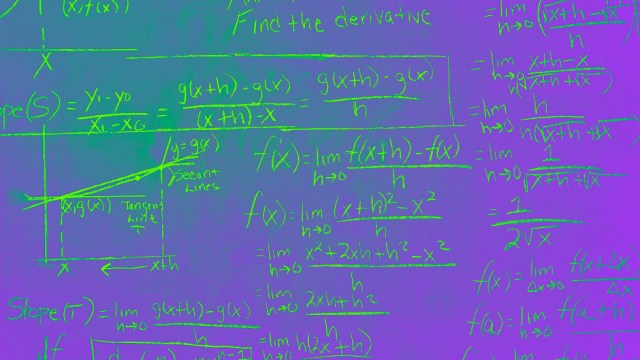

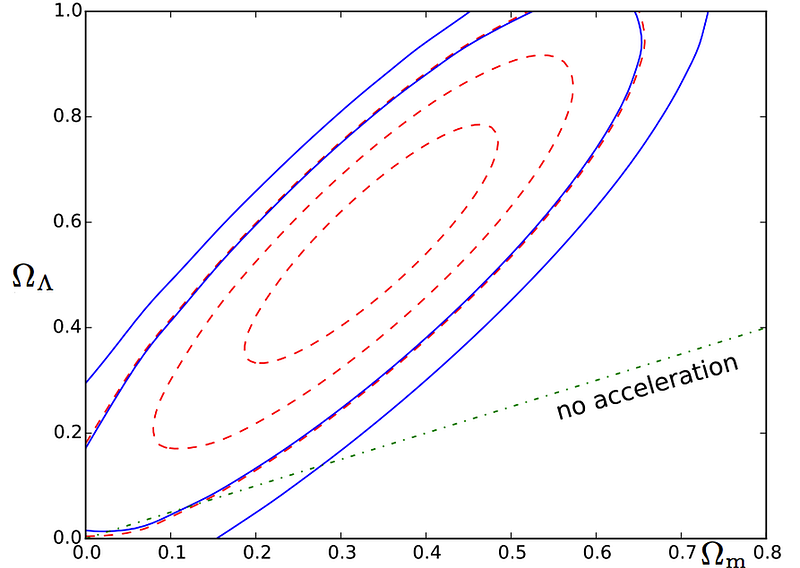

We assume that General Relativity is correct as our theory of gravity. We assume that the Universe is filled with matter and energy that’s roughly of the same density everywhere. We assume that Hubble’s Law is valid. And we assume that these supernovae are good distance indicators for how the Universe expands. Sarkar makes these assumptions as well, and here’s the graph he arrives at (from the 2016 paper) for the supernova data.

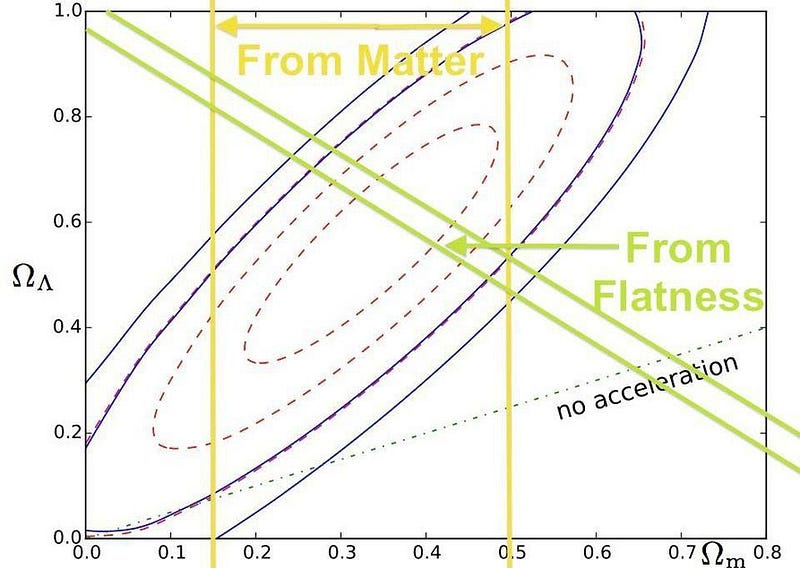

The y-axis indicates the percentage of Universe that’s made of dark energy; the x-axis the percentage that’s matter, normal and dark combined. The authors emphasize that while the best fit for the data does support the accepted model — a Universe that’s roughly 2/3 dark energy and 1/3 matter — the red contours, representing 1σ, 2σ, and 3σ confidence levels, aren’t overwhelmingly compelling. As Subir Sarkar says,

We analysed the latest catalogue of 740 Type Ia supernovae — over 10 times bigger than the original samples on which the discovery claim was based — and found that the evidence for accelerated expansion is, at most, what physicists call ‘3 sigma’. This is far short of the ‘5 sigma’ standard required to claim a discovery of fundamental significance.

Sure, you get ‘3 sigma’ if you make only those assumptions. But what about the assumptions he didn’t make, that he really should have?

You know, like the fact that the Universe contains matter. Yes, the value corresponding to the “0” value for matter density (on the x-axis) is ruled out because the Universe contains matter. In fact, we’ve measured how much matter the Universe has, and it’s around 30%. Even in 1998, that value was known to a certain precision: it couldn’t be less than about 14% or more than about 50%. So right away, we can place stronger constraints.

In addition, as soon as the first WMAP data came back, of the Cosmic Microwave Background, we recognized that the Universe was almost perfectly spatially flat. That means that the two numbers — the one on the y-axis and the one on the x-axis — have to add up to 1. This information from WMAP first came to our attention in 2003, even though other experiments like COBE, BOOMERanG and MAXIMA had hinted at it. If we add that extra flatness in, the “wiggle room” goes way, way down.

In fact, this crudely hand-drawn map I’ve made, overlaying the Sarkar analysis, matches almost exactly the modern joint analysis of the three major sources of data, which includes supernovae.

What this analysis actually shows is just how incredible our data is: even with using none of our knowledge about the matter in the Universe or the flatness of space, we can still arrive at a better-than-3σ result supporting an accelerating Universe.

But it also underscores something else that’s far more important. Even if all of the supernova data were thrown out and ignored, we have more than enough evidence at present to be extremely confident that the Universe is accelerating, and made of about 2/3 dark energy.

(Note that the new, 2018 paper makes a slightly different argument based on sky direction and distance to argue that the supernova evidence is only at 3-sigma significance. It is no more compelling than the 2016 argument that has been debunked here.)

We do not do science in a vacuum, completely ignoring all the other pieces of evidence that our scientific foundation builds upon. We use the information we have and know about the Universe to draw the best, most robust conclusions we have. It is not important that your data meet a certain arbitrary standard on its own, but rather that your data can demonstrate which conclusions are inescapable given our Universe as it actually is.

Our Universe contains matter, is at least close to spatially flat, and has supernovae that allow us to determine how it’s expanding. When we put that picture together, a dark energy-dominated Universe is inescapable. Just remember to look at the whole picture, or you might miss out on how amazing it truly is.

Ethan Siegel is the author of Beyond the Galaxy and Treknology. You can pre-order his third book, currently in development: the Encyclopaedia Cosmologica.