The Simplest Solution To The Expanding Universe’s Biggest Controversy

Different measurements of the Universe’s rate of expansion give inconsistent results. But this simple solution could fix everything.

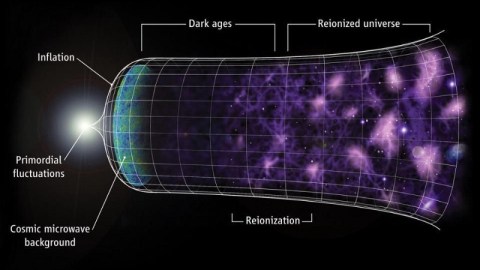

In 1915, Einstein’s theory of General Relativity gave us a brand new theory of gravity, based on the geometrical concept of curved spacetime. Matter and energy told space how to curve; curved space told matter and energy how to move. By 1922, scientists had discovered that if you fill the Universe uniformly with matter and energy, it won’t remain static, but will either expand or contract. By the end of the 1920s, led by the observations of Edwin Hubble, we had discovered our Universe was expanding, and had our first measurement of the expansion rate.

The journey to pin down exactly what that rate is has now hit a snag, with two different measurement techniques yielding inconsistent results. It could be an indicator of new physics. But there could be an even simpler solution, and nobody wants to talk about it.

The controversy is as follows: when we see a distant galaxy, we’re seeing it as it was in the past. But it isn’t simply that you look at light that took a billion years to arrive and conclude that the galaxy is a billion light years away. Instead, the galaxy will actually be more distant than that.

Why’s that? Because the space that makes up our Universe itself is expanding. This prediction of Einstein’s General Relativity, first recognized in the 1920s and then observationally validated by Edwin Hubble several years later, has been one of the cornerstones of modern cosmology.

The big question is how to measure it. How do we measure how the Universe is expanding? All methods invariably rely on the same general rules:

- you pick a point in the Universe’s past where you can make an observation,

- you measure the properties you can measure about that distant point,

- and you calculate how the Universe would have had to expand from then until now to reproduce what you see.

This could be from a wide variety of methods, ranging from observations of the nearby Universe to objects billions of light years away.

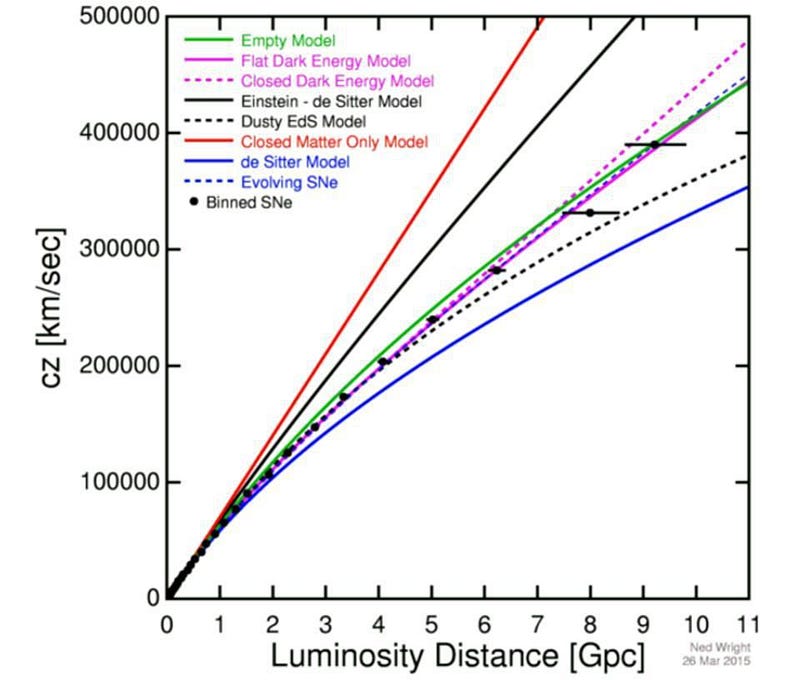

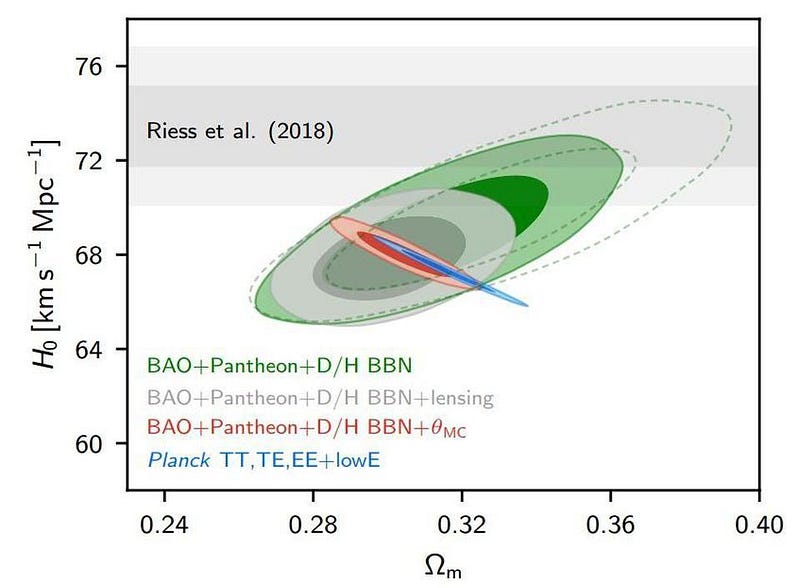

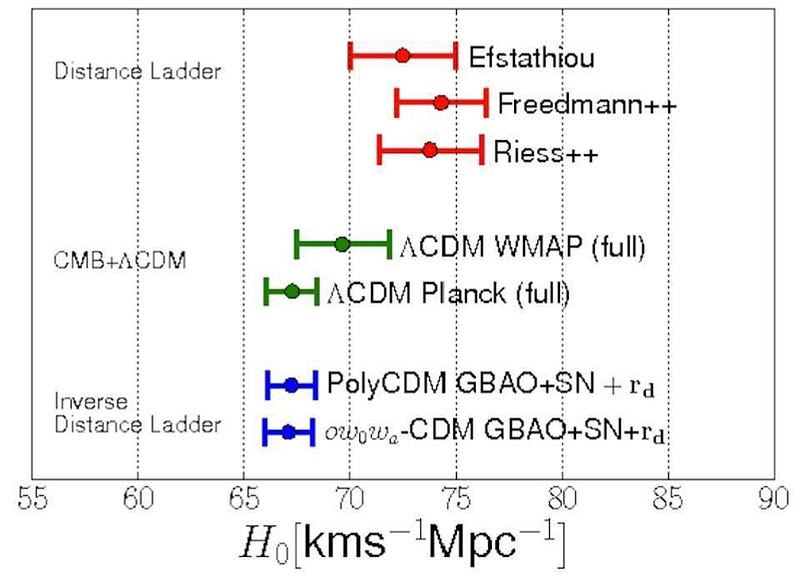

For many years now, there’s been a controversy brewing. Two different measurement methods — one using the cosmic distance ladder and one using the first observable light in the Universe — give results that are mutually inconsistent. The tension has enormous implications that something may be wrong with how we conceive of the Universe.

There is another explanation, however, that’s much simpler than the idea that either something is wrong with the Universe or that some new physics is required. Instead, it’s possible that one (or more) method has a systematic error associated with it: an inherent flaw to the method that hasn’t been identified yet that’s biasing its results. Either method (or even both methods) could be at fault. Here’s the story of how.

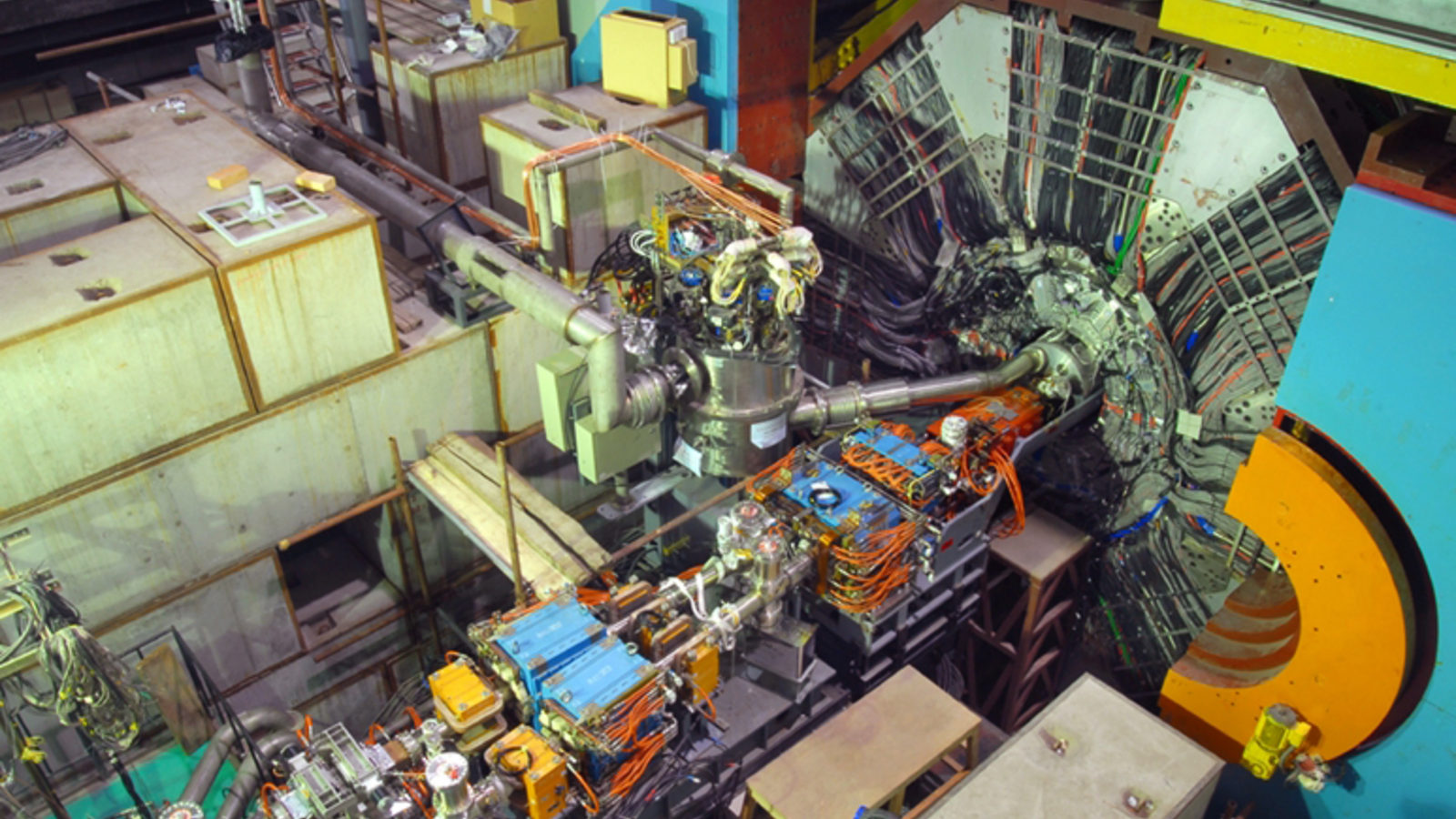

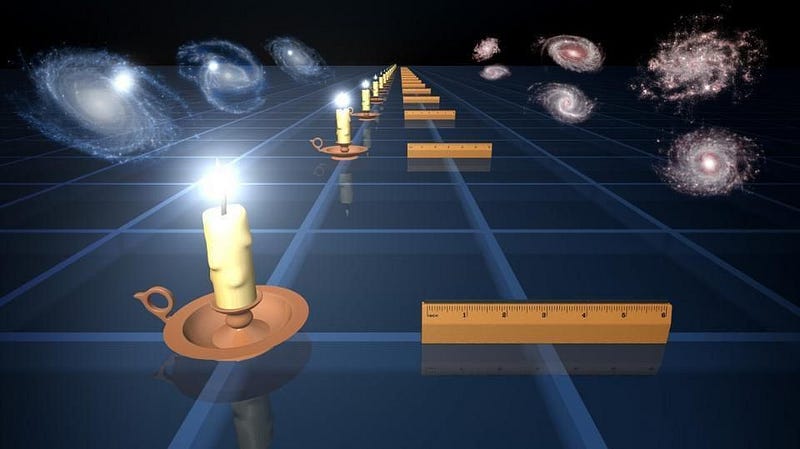

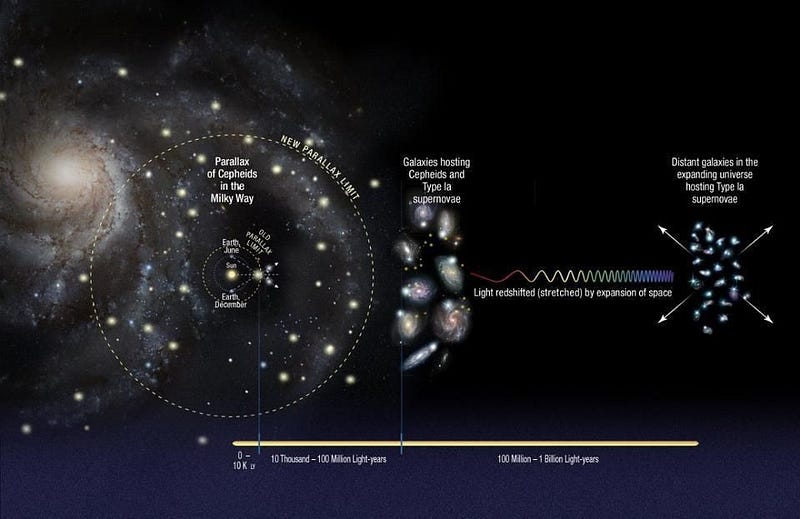

The cosmic distance ladder is the oldest method we have to compute the distances to faraway objects. You start by measuring something close by: the distance to the Sun, for example. Then you use direct measurements of distant stars using the motion of the Earth around the Sun — known as parallax — to calculate the distance to nearby stars. Some of these nearby stars will include variable stars like Cepheids, which can be measured accurately in nearby and distant galaxies, and some of those galaxies will contain events like type Ia supernovae, which are some of the most distant objects of all.

Make all of these measurements, and you can derive distances to galaxies many billions of light years away. Put it all together with easily-measurable redshifts, and you’ll arrive at a measurement for the rate of expansion of the Universe.

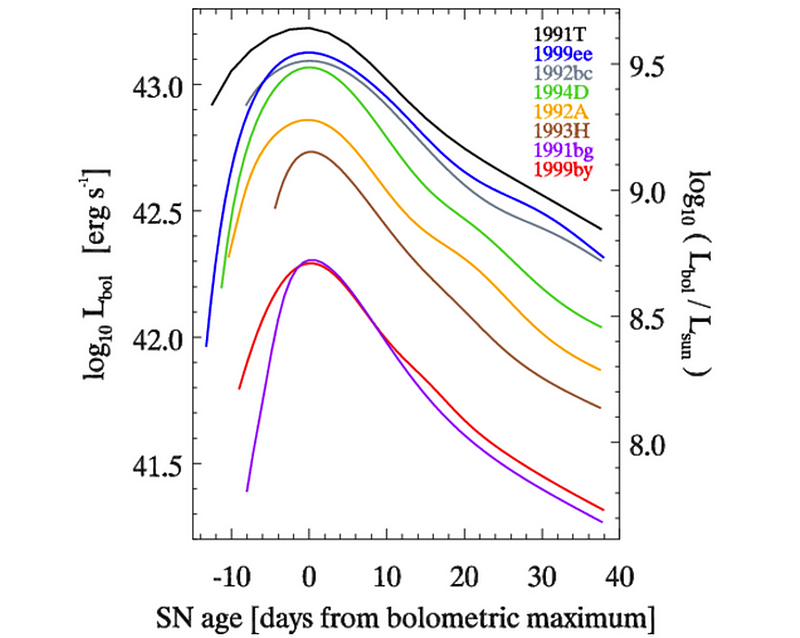

This is how dark energy was first discovered, and our best methods of the cosmic distance ladder give us an expansion rate of 73.2 km/s/Mpc, with an uncertainty of less than 3%.

However.

If there’s one error at any stage of this process, it propagates to all higher rungs. We can be pretty confident that we’ve measured the Earth-Sun distance correctly, but parallax measurements are currently being revised by the Gaia mission, with substantial uncertainties. Cepheids may have additional variables in them, skewing the results. And type Ia supernovae have recently been shown to vary by quite a bit — perhaps 5% — from what was previously thought. The possibility that there is an error is the most terrifying possibility to many scientists who work on the cosmic distance ladder.

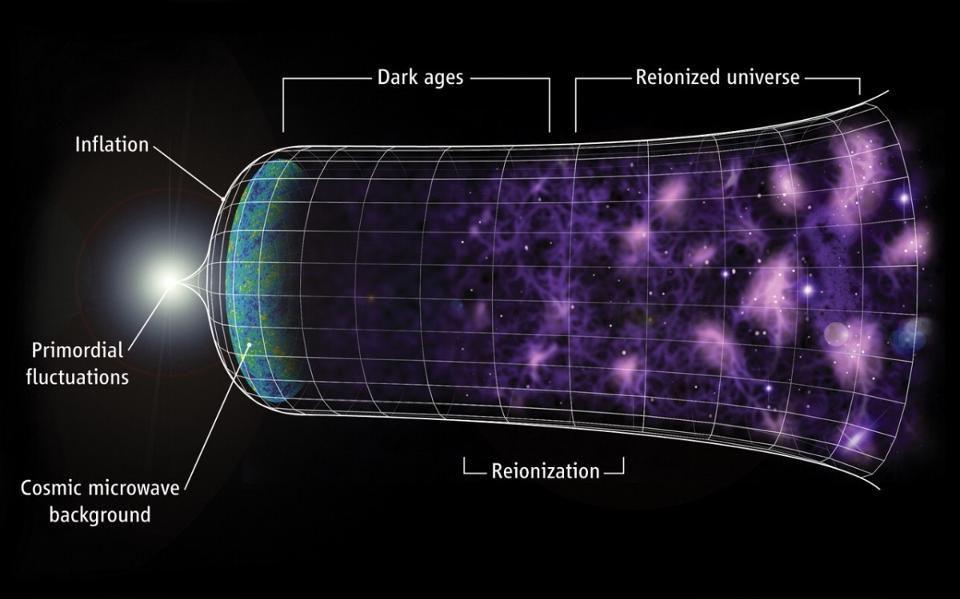

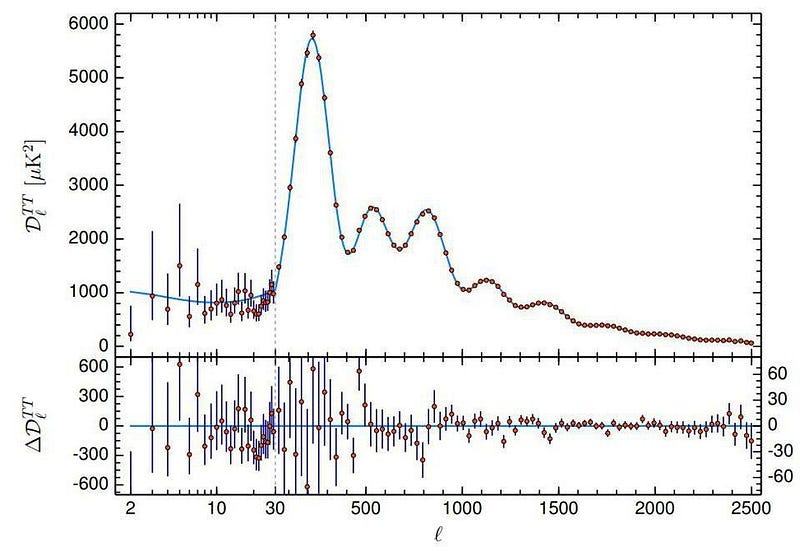

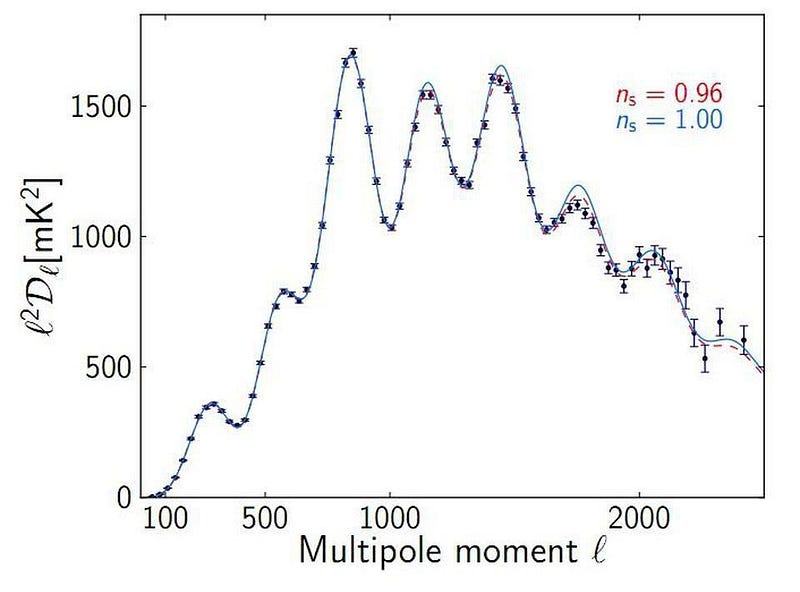

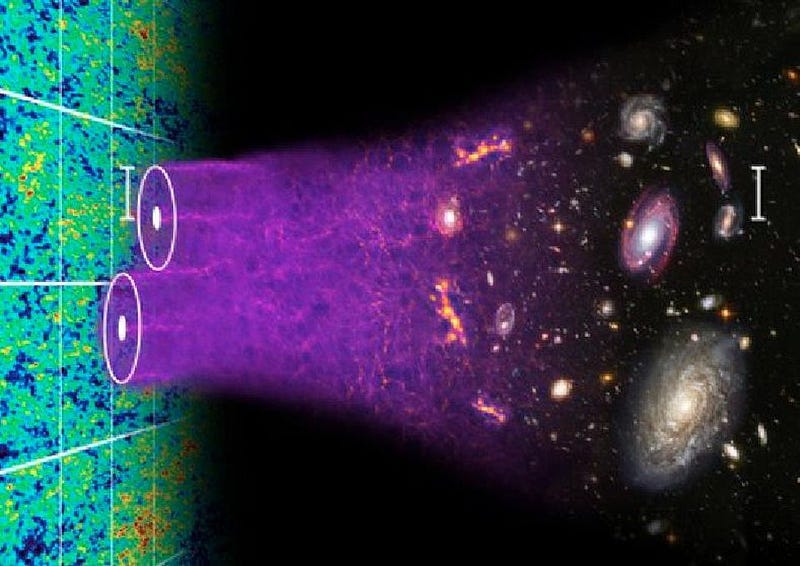

On the other hand, we have measurements of the Universe’s composition and expansion rate from the earliest available picture of it: the Cosmic Microwave Background. The minuscule, 1-part-in-30,000 temperature fluctuations display a very specific pattern on all scales, from the largest all-sky ones down to 0.07° or so, where its resolution is limited by the fundamental astrophysics of the Universe itself.

Based on the full suite of data from Planck, we have exquisite measurements for what the Universe is made of and how it’s expanded over its history. The Universe is 31.5% matter (where 4.9% is normal matter and the rest is dark matter), 68.5% dark energy, and just 0.01% radiation. The Hubble expansion rate, today, is determined to be 67.4 km/s/Mpc, with an uncertainty of only around 1%. This creates an enormous tension with the cosmic distance ladder results.

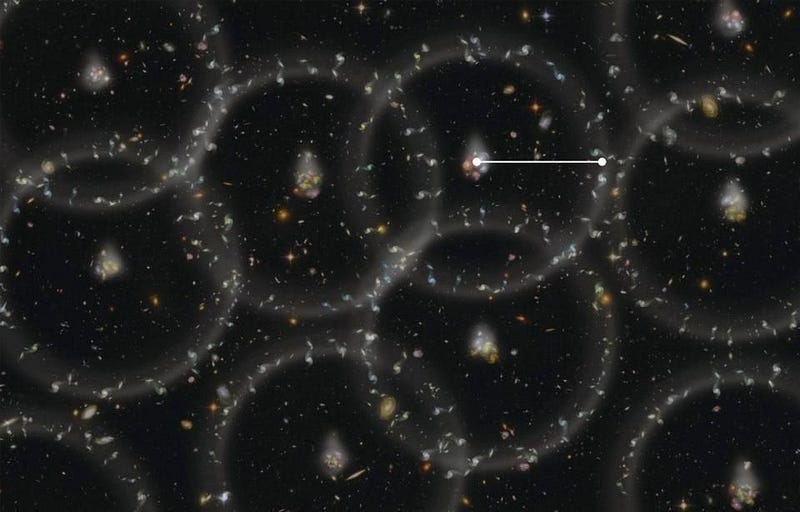

In addition, we have another measurement from the distant Universe that gives another measurement, based on the way that galaxies cluster together on large scales. When you have a galaxy, you can ask a simple-sounding question: what is the probability of finding another galaxy a specific distance away?

Based on what we know about dark matter and normal matter, there’s an enhanced probability of finding a galaxy 500 million light years distant from another versus 400 million or 600 million. This is for today, and so as the Universe was smaller in the past, the distance scale corresponding to this probability enhancement changes as the Universe expands. This method is known as the inverse distance ladder, and gives a third method to measure the expanding Universe. It also gives an expansion rate of around 67 km/s/Mpc, again with a small uncertainty.

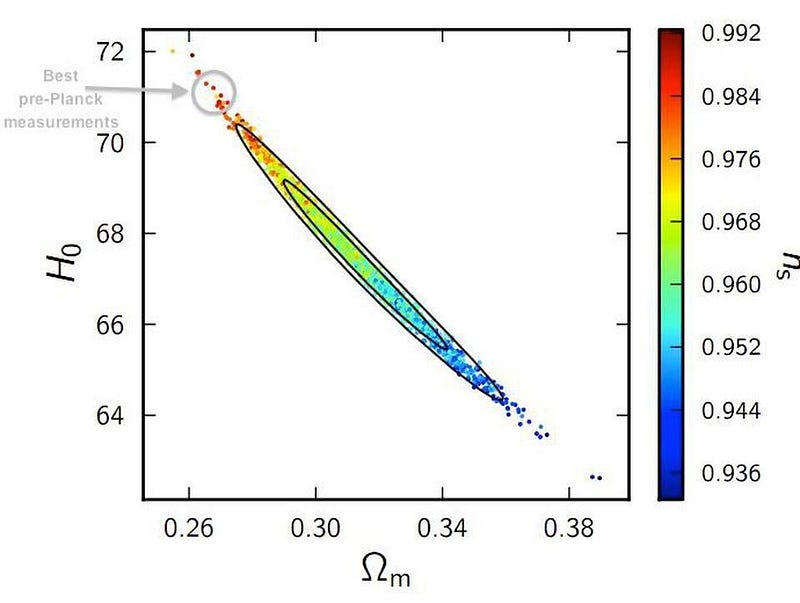

Now, it’s possible that both of these measurements have a flaw in them, too. In particular, many of these parameters are related, meaning that if you try and increase one, you have to decrease-or-increase others. While the data from Planck indicates a Hubble expansion rate of 67.4 km/s/Mpc, that rate could be higher, like 72 km/s/Mpc. If it were, that would simply mean we needed a smaller amount of matter (26% instead of 31.5%), a larger amount of dark energy (74% instead of 68.5%), and a larger scalar spectral index (ns) to characterize the density fluctuations (0.99 instead of 0.96).

This is deemed highly unlikely, but it illustrates how one small flaw, if we overlooked something, could keep these independent measurements from aligning.

There are a lot of problems that arise for cosmology if the teams measuring the Cosmic Microwave Background and the inverse distance ladder are wrong. The Universe, from the measurements we have today, should not have the low dark matter density or the high scalar spectral index that a large Hubble constant would imply. If the value truly is closer to 73 km/s/Mpc, we may be headed for a cosmic revolution.

On the other hand, if the cosmic distance ladder team is wrong, owing to a fault in any rung on the distance ladder, the crisis is completely evaded. There was one overlooked systematic, and once it’s resolved, every piece of the cosmic puzzle falls perfectly into place. Perhaps the value of the Hubble expansion rate really is somewhere between 66.5 and 68 km/s/Mpc, and all we had to do was identify one astronomical flaw to get there.

The possibility of needing to overhaul many of the most compelling conclusions we’ve reached over the past two decades is fascinating, and is worth investigating to the fullest. Both groups may be right, and there may be a physical reason why the nearby measurements are skewed relative to the more distant ones. Both groups may be wrong; they may both have erred.

But this controversy could end with the astronomical equivalent of a loose OPERA cable. The distance ladder group could have a flaw, and our large-scale cosmological measurements could be as good as gold. That would be the simplest solution to this fascinating saga. But until the critical data comes in, we simply don’t know. Meanwhile, our scientific curiosity demands that we investigate. No less than the entire Universe is at stake.

Ethan Siegel is the author of Beyond the Galaxy and Treknology. You can pre-order his third book, currently in development: the Encyclopaedia Cosmologica.