Scientists Still Don’t Know How Fast The Universe Is Expanding

A cosmic controversy is back, and at least one camp — perhaps both — is making an unidentified error.

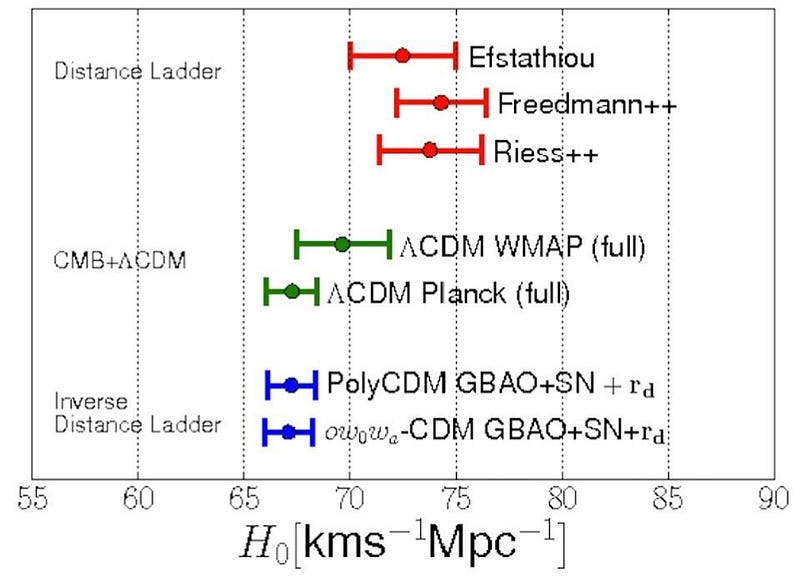

Ever since Hubble first discovered the relationship between a galaxy’s distance and its motion away from us, astrophysicists have raced to measure exactly how fast the Universe is expanding. As time moves forward, the fabric of space itself stretches and the distances between gravitationally unbound objects increases, which means everyone should see the Universe expanding at the same rate. What that rate is, however, is the subject of a great debate raging in cosmology today. If you measure that rate from the Big Bang’s afterglow, you get one value for Hubble’s constant: 67 km/s/Mpc. If you measure it from individual stars, galaxies, and supernovae, you get a different value: 74 km/s/Mpc. Who’s right, and who’s in error? It’s one of the biggest controversies in science today.

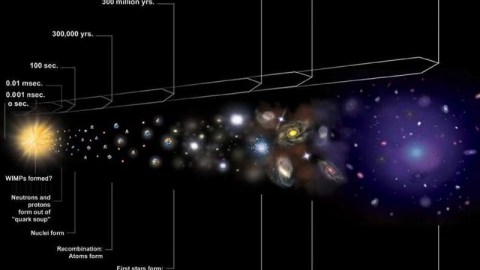

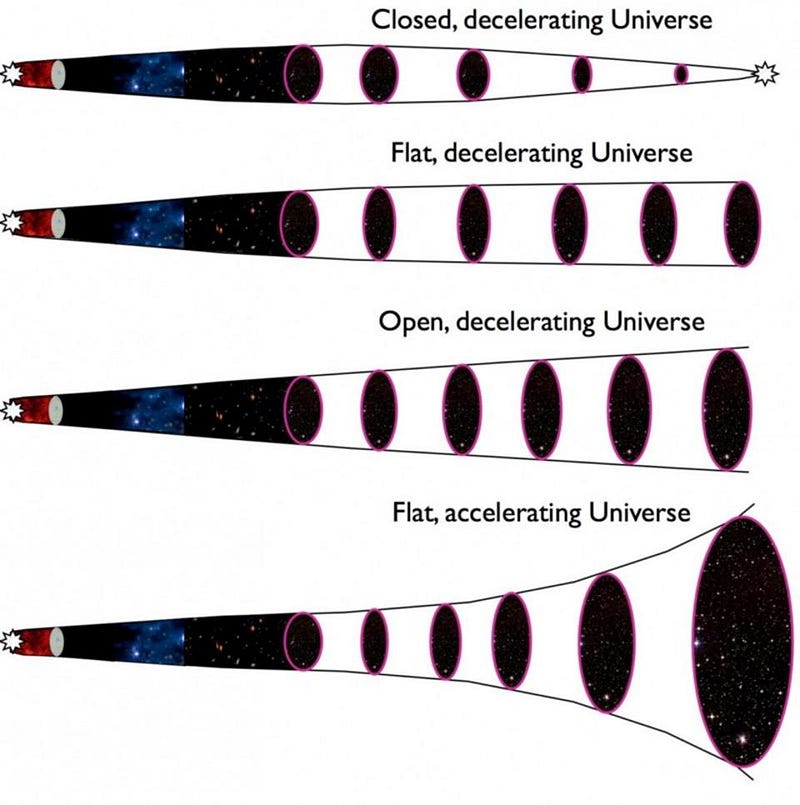

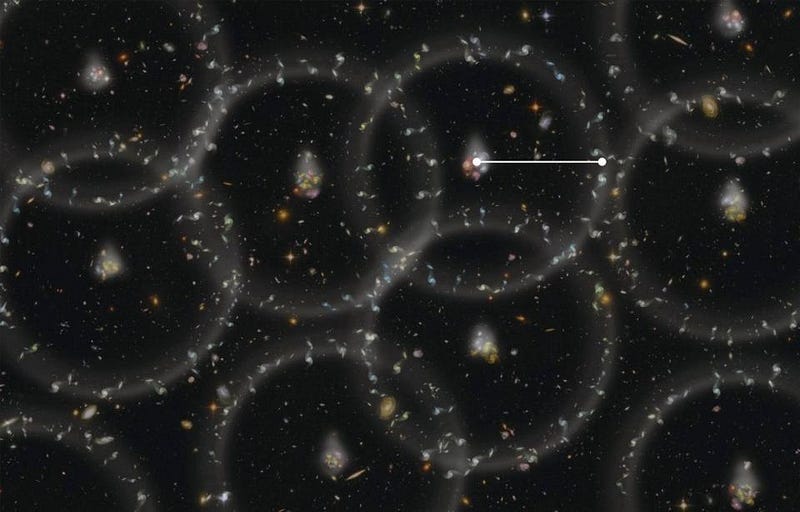

If the Universe is expanding today, that means it must have been more compact, denser, and even hotter in the distant past. The fact that things are getting farther apart, on a cosmic scale, implies that they were closer together a long time ago. If gravitation works to clump and cluster large masses together, then the galaxy-and-void-rich Universe we see today must have been more uniform billions of years ago. And if you can measure the rate of expansion today, as well as what’s in the Universe, you can learn:

- whether the Big Bang occurred (it did),

- how old our Universe is (13.8 billion years),

- and whether it will recollapse or expand forever (it will expand forever).

You can learn it all, if you can accurately measure the value of the Hubble constant.

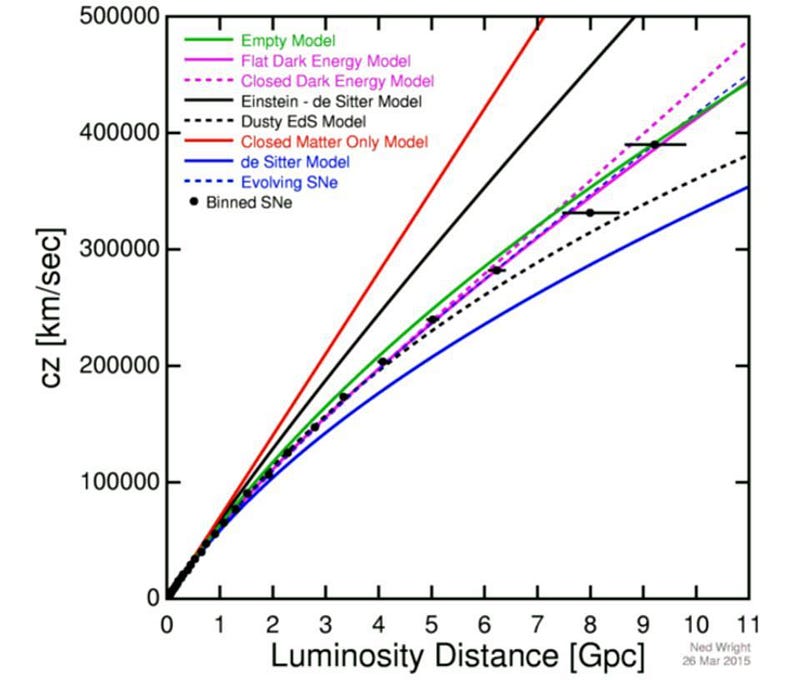

The Hubble constant seems to be a straightforward quantity to measure. If you can measure the distance to an object and the speed that it appears to move away from you (from its redshift), that’s all it takes to derive the Hubble constant, which relates distance and recession speed. The problem arises because different methods of measuring the Hubble constant give different results. In fact, there are two major classes of methods, and the results that each one gets is incompatible with the other.

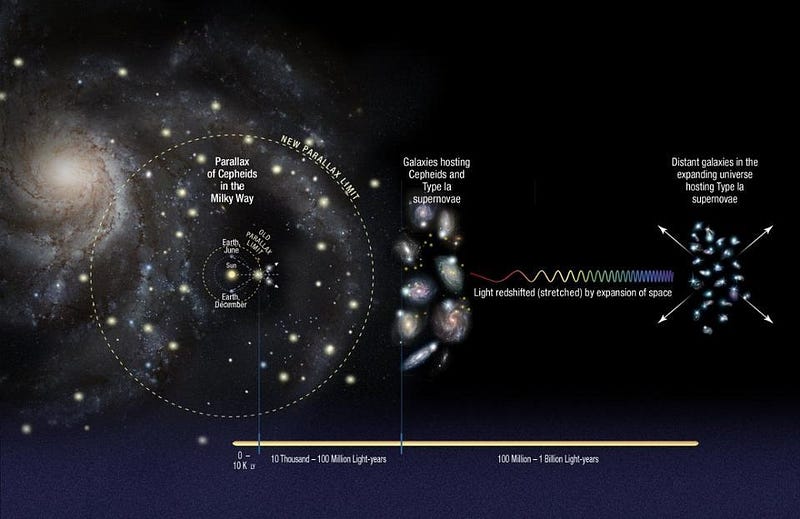

1.) The ‘distance ladder’ method. Look out at a distant galaxy. How far away is it? If you can measure the individual stars within it, and you know how stars work, you can infer a distance to those galaxies. If you can measure a supernova within it, and you know how supernovae work, same deal: you get a distance. We jump from parallax (within our own galaxy) to Cepheids (within our own galaxy and other nearby ones) to Type Ia supernovae (in all galaxies, from nearby to ultra-distant ones), and can measure cosmic distances. When we combine that with the redshift data, we consistently get expansion rates in the 72–75 km/s/Mpc range: a relatively high value for the Hubble constant.

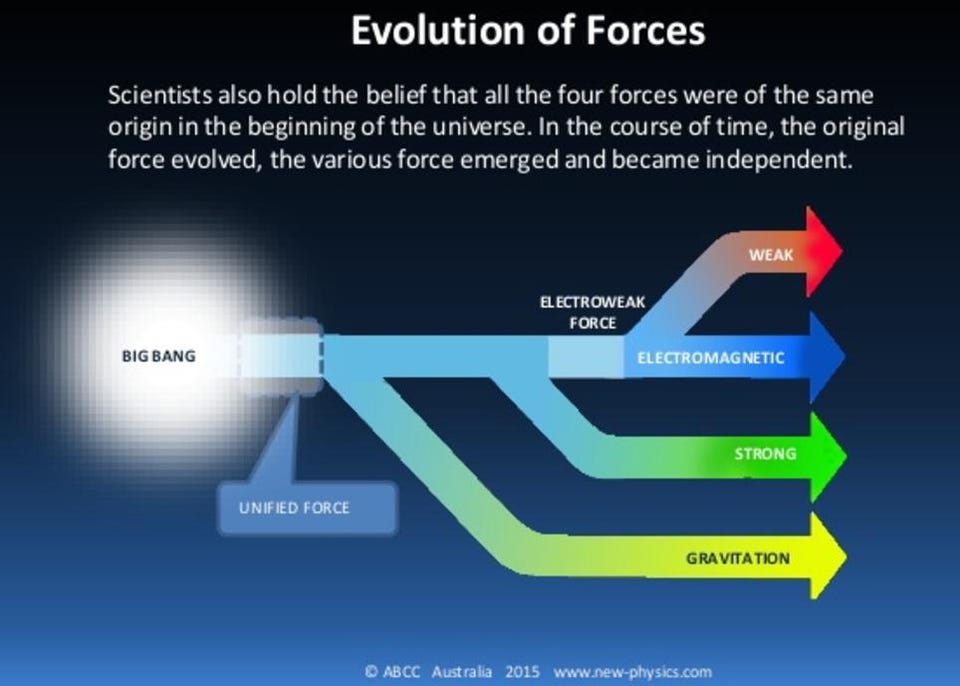

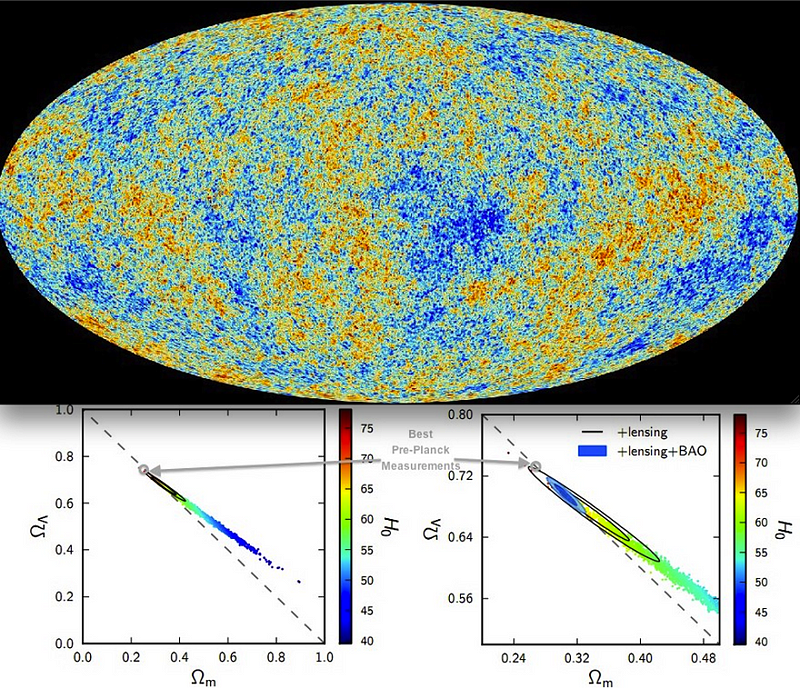

2.) The ‘leftover relic’ method. When the Big Bang occurred, our Universe came into existence with overdense and underdense regions. In the early stages, the three key ingredients are dark matter, normal matter, and radiation. Gravitation works to grow the overdense regions, where both normal matter and dark matter fall into them. Radiation works to push that excess matter out, but interacts differently with normal matter (which it scatters off of) than dark matter (which it doesn’t). This leaves a specific set of scale markers on the Universe, which grow as the Universe expands. By looking at the fluctuations in the cosmic microwave background or the correlations of large-scale structures due to baryon acoustic oscillations, we get expansion rates in the 66–68 km/s/Mpc range: a low value.

The uncertainties on these two methods are both pretty low, but are also mutually incompatible. If the Universe has less matter and more dark energy than we presently think, the numbers on the ‘leftover relic’ method could increase to line up with the higher values. If there are errors at any stage in our distance measurements, whether from parallax, calibrations, supernova evolution, or Cepheid distances, the ‘distance ladder’ method could be artificially high. There’s also the possibility, favored by many, that the true value lies somewhere in between.

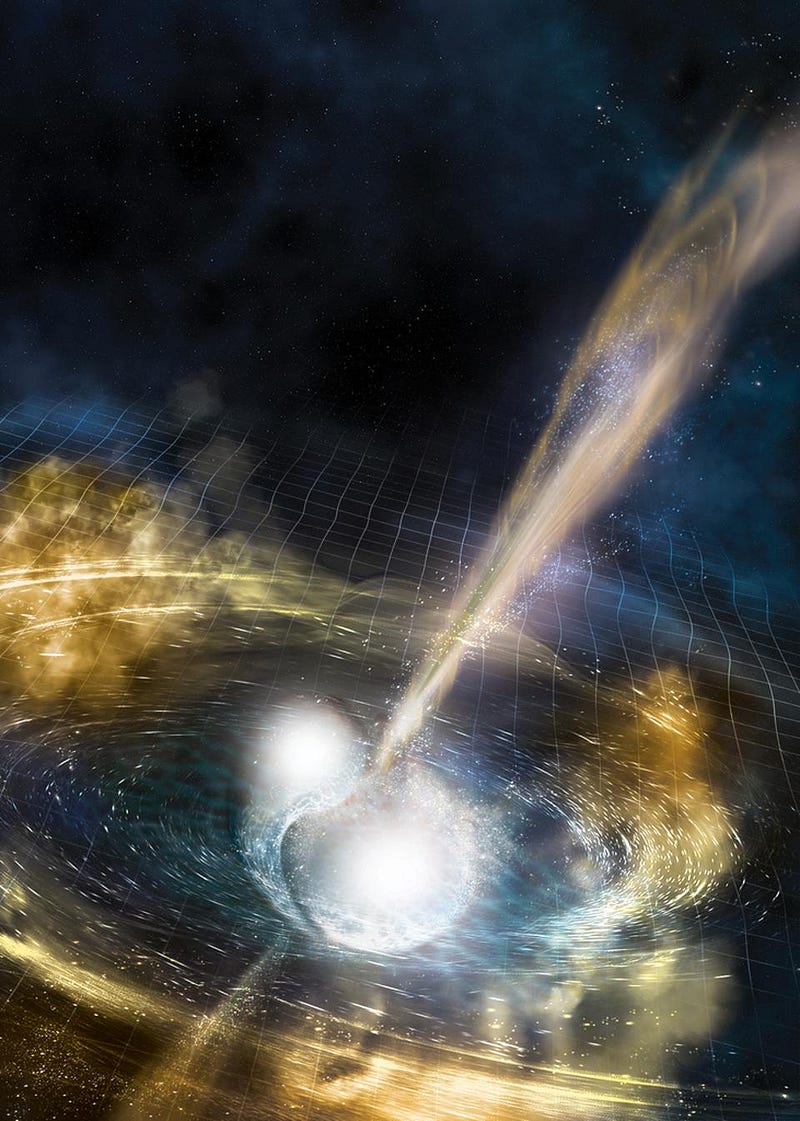

Recently, there’s been a lot of buzz that colliding neutron stars could settle the matter by providing a third, independent method. In principle, they could: the amplitude of the signal we receive is directly dependent on the distance of the merger. Observe enough of them, and (through electromagnetic follow-ups) get the redshift of the host galaxy, and you’ve got a measurement of the Hubble constant. But this third method, compelling though it is, has its own set of uncertainties, including:

- unknowns concerning the merger parameters of the neutron stars,

- peculiar velocities associated with the host galaxy,

- and local (nearby) voids and perturbations to the expansion rate.

Some of these uncertainties are the same ones that plague the ‘distance ladder’ method. If this ‘standard siren’ method, as it’s coming to be called, agrees with the higher figure of 72–75 km/s/Mpc after, say, 30 detections, that doesn’t necessarily mean the problem is solved. Instead, it’s possible that the systematic errors, or the errors inherent to the method you’re using, are biasing you towards an artificially higher value. It helps to have a third method when the first two give different results, but this third method isn’t entirely independent, and comes along with uncertainties all its own.

Understanding exactly how quickly the Universe expands is a vital ingredient in the recipe for making sense of where everything came from, how it got to be this way, and where it’s headed. All involved teams have been incredibly careful and done fantastic work, and as our measurements have gotten more and more precise, the tensions have only increased. Yet the Universe must have a single, overall expansion rate, so there must be a mistake, error, or bias in there somewhere, perhaps in multiple places. Still, even with all the data we have, we must be careful. Having a third method isn’t necessarily going to be a tiebreaker; if we’re not careful, it may turn out to be a new way to fool ourselves. Misinterpreting the Universe doesn’t change what reality actually is. It’s up to us to make sure we get it right.

Ethan Siegel is the author of Beyond the Galaxy and Treknology. You can pre-order his third book, currently in development: the Encyclopaedia Cosmologica.