Le problème de chariot. El problema carro. The trolley problem. A moral choice in another language is NOT the same.

A few thoughts on your lazy brain. But just a few because, well, you know, the brain likes things nice and easy.

The brain normally operates on what psychologists have come to call System One, a principally subconscious, faster, and more instinctive way of processing information and figuring things out. System One relies mostly on feelings and a toolkit of hidden mental shortcuts to help us sense our way through the choices we make, rather than thinking about each one methodically and consciously. System Two refers to the cognitive processes that kick in when we stop and think and purposefully pay attention.

But that takes time, and calories, and since we usually don’t have all the time we’d need to carefully think things through, and since the brain is pound-for-pound the most calorically hungry part of the body (it uses 20-25% of the calories we burn in an average day), and since sometimes survival requires really fast decisions and as the brain was evolving we couldn’t be sure of our next meal, human cognition has developed to mostly run on the faster, easier, and more energy efficient System One.

(If you want to learn more, this is all known as the Dual Process model of cognition, first proposed by philosopher and psychologist William James. Keith Stanovich and Richard West are credited with the “System One – System Two” labels that have been adopted as the lead characters in Daniel Kahneman’s masterwork, Thinking, Fast and Slow.)

Except for when we force ourselves to override this default and stop and think, we don’t consciously choose which of these two components of cognition to use at any given moment or for any specific task. The task at hand subconsciously challenges one system or the other to help figure things out. (It’s actually not as simple as ‘either/or’. Cognition is almost always a combination of both ‘systems’.) But depending on which one is more active, we make either more instinctive and emotional choices (System One), or more coldly analytical ones (System Two). That obviously has profound consequences, as illustrated by an intriguing study by Albert Costa and colleagues that demonstrates how this shapes the moral choices we make.

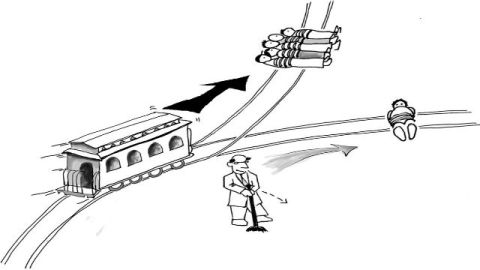

Costa posed the classic Trolley Problem to study subjects. This is the one where you’re asked “What would you do if you were on a bridge and a trolley is coming and is about to kill five people that you see standing on the tracks but if you throw a switch you can divert it onto a track where it will only kill that one person you see standing on the siding?” Most people throw the switch. But the second part of the conundrum gets stickier, asking “What would you do if you were on a bridge and a trolley is coming and is about to kill five people you see standing on the tracks, but there’s a fat person standing next to you and if you push him off the bridge he will be killed but he will stop the trolley and save the five people?” It’s obviously emotionally tougher to push a real live person to his death than kill someone by pulling a mechanical switch. Far fewer people push the fat man, though quantitatively, the choice is identical.

Costa posted the Trolley Problem to his subjects, all of whom were bilingual. Half read the question in their native language and half read it in the other language they knew, which they knew well enough to speak and read, but not fluently. (Subjects included native speakers of English, Korean, Spanish, French, and Hebrew). Of the people who faced the Trolley Problem choice in their native language, 20%, one person in five, said they’d push the fat man to his death. But more of those who got the challenge in their non-native language, 33%, or one in three, said they’d push the fat guy off the bridge.

Remember, the choices are numerically identical; kill one to save five. So pourquoi la difference, por qué la diferencia, 왜차이, מדוע ההבדל? Apparently, speculates Dr. Costa, because the subjects reading a foreign language had to translate it, which required activation of the more analytical System Two, while those reading the challenge in their native tongue could remain in the more instinctive and emotion-based default System One mode. The System One people made the choice based more on their feelings, while those relying more on analytical System Two could more clearly see the fact that the choices were numerically the same.

This is fascinating, and scary, because this is what’s going on in your brain and mine all the time, not just when we face moral choices but at every moment our brains are interpreting information to make sense of the world. From stimuli as simple as what we see or hear or smell or taste, to things as complex as the choices we face about relationships or personal safety or where we stand on questions of values, the brain is sorting things out and shaping our perceptions of the world, and our choices and judgments and feelings and behaviors, based on processes that are either more emotional and instinctive or more analytical and ‘rational’, and we have little say…we have limited free will…over which of these cognitive systems is in control.

We can stop and think about things carefully, and our decisions will be wiser and healthier if we do. But mostly we don’t. It’s like Ambrose Bierce suggested in the Devil’s Dictionary, the brain is only the organ with which we think we think.

Think about THAT!

(By the way, if you don’t want to worry about being pushed in front of a train to save others, East Asia is the place to be. None of the native or bilingual Korean speakers pushed the fat man off the bridge, a response that Costa et. al. report is generally true of East Asians in these sorts of moral tests.)