Will social media’s impact on the elections be different this time?

Photo by Phillip Goldsberry on Unsplash

- The effective subversion of social media during the 2016 U.S. presidential election was unprecedented and highlighted the major role social media plays in politics.

- Today, it’s harder to repurpose private social data than it was four years ago, but paid and organic audience microtargeting continues.

- Fake news and disinformation still spread freely. Networks of fake accounts are being taken down, but there’s no way to know what percentage continue to operate. Meanwhile, the same principles power the news feed algorithms, surfacing partisan content and reaffirming audience biases.

Social media has emerged as a political intermediary used to assert influence and achieve political goals. The effective subversion of social media during the 2016 U.S. presidential election was unprecedented and highlighted the major role social media plays in politics.

With the continual growth of online communities, Americans have gained greater access to the political landscape and election news. But on the other hand, social media can be used to spread misinformation and engender bias among voters.

With a new election just around the corner, it’s worth revisiting the dynamics of social media and American politics in order to better anticipate what we can expect on November 3, 2020.

It didn’t take long for pundits to recognize how much had changed in manipulative electioneering with the rise of social media. Quickly after the results were announced, it became clear that the 2016 election was a watershed moment in how targeted propaganda can be disseminated using advanced computational techniques.

Consulting firm Cambridge Analytica, for example, bypassed Facebook rules by creating an app requiring Facebook account login, which, in turn, mined vast amounts of personal information about the users and their friends. This information was then shared with Cambridge Analytica’s network of contacts, despite a ban on downloading private data from Facebook’s ecosystem and sharing it with third parties.

Shutterstock

The firm then leveraged the data to generate microtargeted political ad campaigns, according to former Cambridge Analytica staffer and whistleblower Christopher Wylie. Facebook suspended Cambridge Analytica, but the platform won’t stop microtargeting campaigns on its platform. To make things worse, Cambridge Analytica analysts are already back at work.

One trend to watch out for in microtargeting is the rise of nanoinfluencers. These small time influencers have far fewer followers, but target a highly tailored audience. Political marketers will leverage nanoinfluencers in tandem with other forms of social media manipulation to digitally knock on the doors of those most likely to be swayed by their canvassing. But knocking on the right doors requires data.

This ease of access to data and the continued popular strategy of psychographic segmentation means that unethical use of user information will likely still play a role in the 2020 elections.

Dividing voters into narrow segments and then whispering targeted messages into their ears was also central to Russian trolls’ strategy of spreading disinformation via social media in an effort to influence the outcome of the 2016 elections. It’s estimated that 126 million American Facebook users were targeted by Russian content over the course of their subversive campaign.

Aside from fake news, jackers also skewed the elections by illegally obtaining and then releasing private information and documents amidst tons of hype. The Wikileaks scandal gave hackers the opportunity to discredit Clinton and the DNC leadership by leaking emails days before the party’s convention. Likewise, the circumstances surrounding FBI Director James Comey’s October 28 letter to Congress, discussion of which dominated social media news feeds for weeks, will likely never be revealed.

Social media big hitters have stated before Congress that they are taking active steps to prevent the spread of disinformation and ensure protection from foreign influence, but stopping deceptive networks is a constant battle. In 2019, Facebook removed 50 networks from foreign actors, including Iran and Russia, that were actively spreading fake information, and from January to June of this year, another 18 have been removed. Just this month, Facebook removed dozens of troll accounts based out of Romania for coordinated inauthentic behavior.

Both Twitter and Facebook have begun flagging posts from public figures when disinformation is contained therein, although the way these flags look differs considerably.

Facebook and Twitter

Facebook, which eventually instituted new ad transparency policies, has also banned ads from state-controlled media outlets, for example from Russia or China, from their platform. This won’t stop governments from accessing more illicit means of spreading propaganda on Facebook and into the minds of America’s voters — identifying proxies can be tricky. Plus, they pretty much got away with it last time and even convinced the mainstream media to pick up some of the fake stories.

Well into election season, the spread of disinformation and the potential involvement of foreign influence has already begun. And it isn’t limited to the election. These tactics are also being used to spread lies about the coronavirus pandemic and the racial protests to incite division and unrest. Even with constant vigilance, it’s likely that information war-inclined countries with the tech knowhow and the will to do harm could influence the upcoming election, and that should concern us all.

After the Cambridge Analytica scandal, social platforms made efforts to change their algorithms and policies to prevent manipulation. But it just isn’t enough.

On Twitter, complete anonymity and the proliferation of automated bots and fake accounts were integral to the 2016 campaign and continue to outpace any efforts the platform makes to curb disinformation. Just last month, a Twitter hack into blue check accounts, including those of Obama and Biden, showed that this year’s election is still at risk.

Hackers have a rapt audience if they manage to make it in. Close to 70 percent of American adults are on Facebook and millions are on Twitter, most of them every day. As part of the 2016 campaign, foreign agents published more than 131,000 tweets and uploaded over 1,100 videos to YouTube. Now, with the global domination of TikTok, there are even more ways to target voters.

Digital propaganda has only improved with time, and despite the valiant efforts of Dr. Frankenstein, the monster social media created is not easily subdued. This month YouTube banned thousands of accounts for a coordinated influence campaign, Facebook shut down even more and has implemented additional encryption and privacy policies since 2016. On Snapchat, Reddit, Instagram and more, malicious manipulation is just a click away, and there is only so much community standards and terms of service agreements can do to stop it.

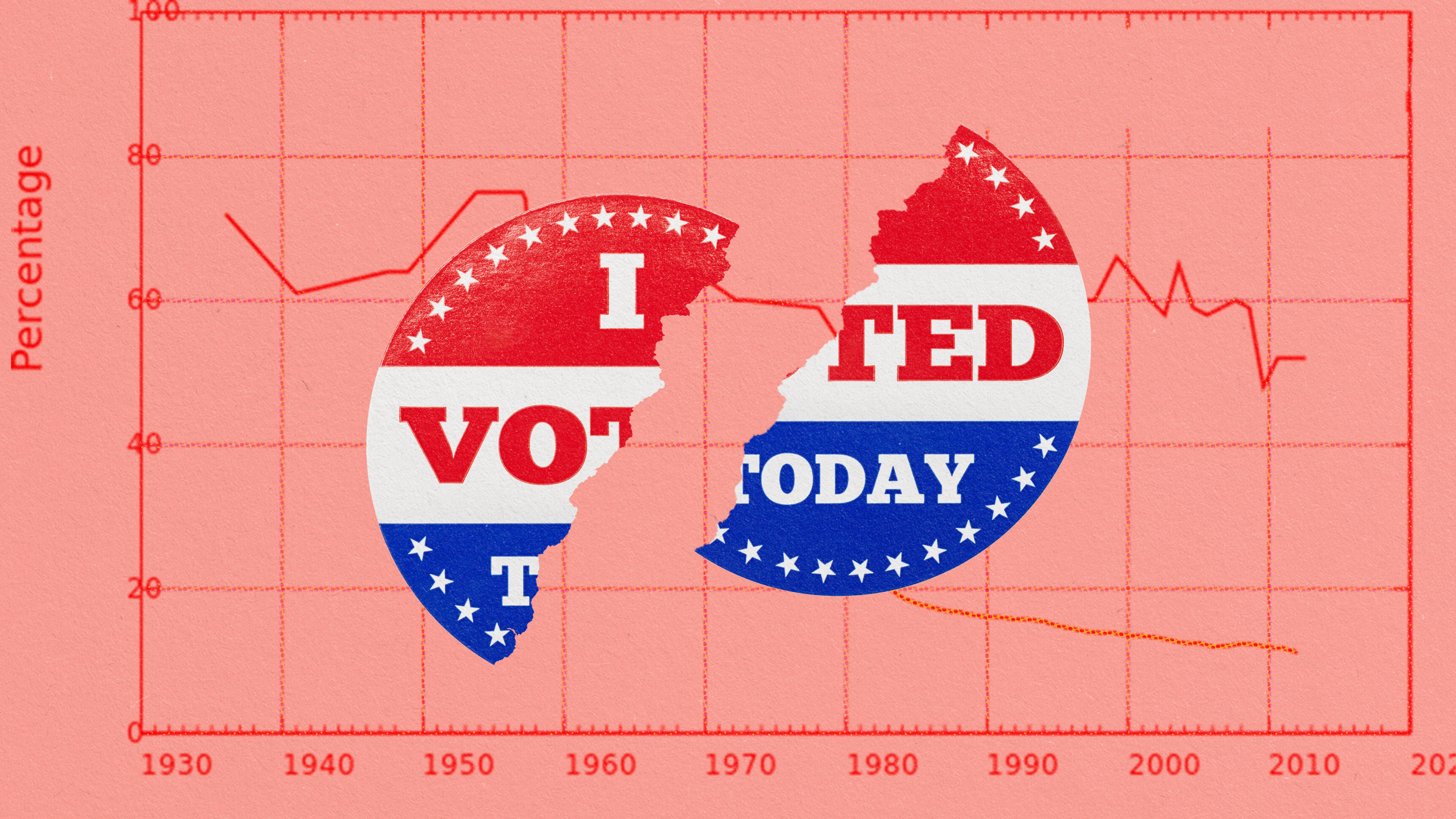

Since 2016, more Americans than ever mistrust mainstream news and get their facts on social media making misinformation and deliberate disinformation a concern in 2020. By 2016, only half of Americans watched TV for news, while those who found their news online reached 43 percent – up 7 percent from the year before.

The problem isn’t that people are getting their news from the internet, it’s that the internet is the perfect forum for spreading fake news. And a rapidly growing number of Americans will take at least some of this fake news to be fact. Furthermore, believing misinformation is actually linked to a decreased likelihood of being receptive to actual information.

Shutterstock

This demonstrates how the spread of partisan messaging is amplified by the proliferation of echo chambers online. Americans who engage with partisan content are likely choosing to do so because the story confirms their existing ideologies. In turn, social media algorithms exacerbate this tendency by only showing content that is similar to what we engage with. This algorithmic amplification of people’s confirmation biases screens out dissenting opinions and reinforces the most marginal viewpoints.

Extreme online groups leverage this tendency to market to homogeneous networks. Recent research demonstrates that social networking sites such as Facebook or Twitter can facilitate this selection into homogenous networks, increasing polarization and solidifying misinformed beliefs. The fundamental principles that inform these algorithms hasn’t changed since 2016, and we’re already seeing them at play, fomenting polarization in 2020, as civil discontent and the pandemic have propelled divisiveness and discontent.

In 2016, subversive actors used social media to manipulate the American political process, both from within and from without. There isn’t much room for optimism that this year things will be much different. If anything, the risks are greater than before. There is, however, some glittering hope emanating from a somewhat ironic place: TikTok.

Flipping the script on Twitter-happy Trump, some activists engaged in a weeks-long campaign to artificially inflate the number of people registered to attend a June campaign rally in Tulsa. The prank was a success, with campaign staff bragging about anticipated turnout going through the roof, leaving the arena with only 31 percent of its seats occupied with Trump supporters.

While anecdotal, this goes to show that while social media can be a tool for the halls of power to manipulate the masses, it can also be a tool for grassroots mobilization against the halls of power.