Trusting a Robot with Your Life: Can Self-Driving Cars Earn the Public’s Trust?

It should never escape our attention the amount of trust we have come to place in technology. Of course, the reason we give tech-based services our trust is because, well, they’ve earned it: they’re fast; they’re efficient; they’re generally reliable; they work, to our eyes, as if by magic. We trust them even as we don’t trust them: dubious as people generally are about giving up their personal information with tech companies, that dubiousness doesn’t appear to beenough to alter anybody’s habits. A company’s ability to provide a service efficiently overwhelms the tertiary consideration of whether or not it’s responsible in doing so. We don’t like the idea of a corporation manhandling our data, but that concern ultimatelyremains in the realm of ideas, too abstract or at least not powerful enough to sway anyone’s behavior.

In other words, it seems as if we’ll trust tech to do anything for us, as long as it does it fast and well and cheap. But what happens when tech moves into fields where the stakes are higher than keeping in touch with friends, looking up shawarma places, or delivering stuff to our doorsteps? Will we be willing to trust Google, Apple, Tesla or brands yet to be created with our lives? That’s not a thought experiment. In developing self-driving cars, tech companies are worming their way into the automotive industry, where the result of a software bug won’t be a website outage or an app crash, but crumpled steel, distant sirens, a crowd of onlookers, a cry for help….

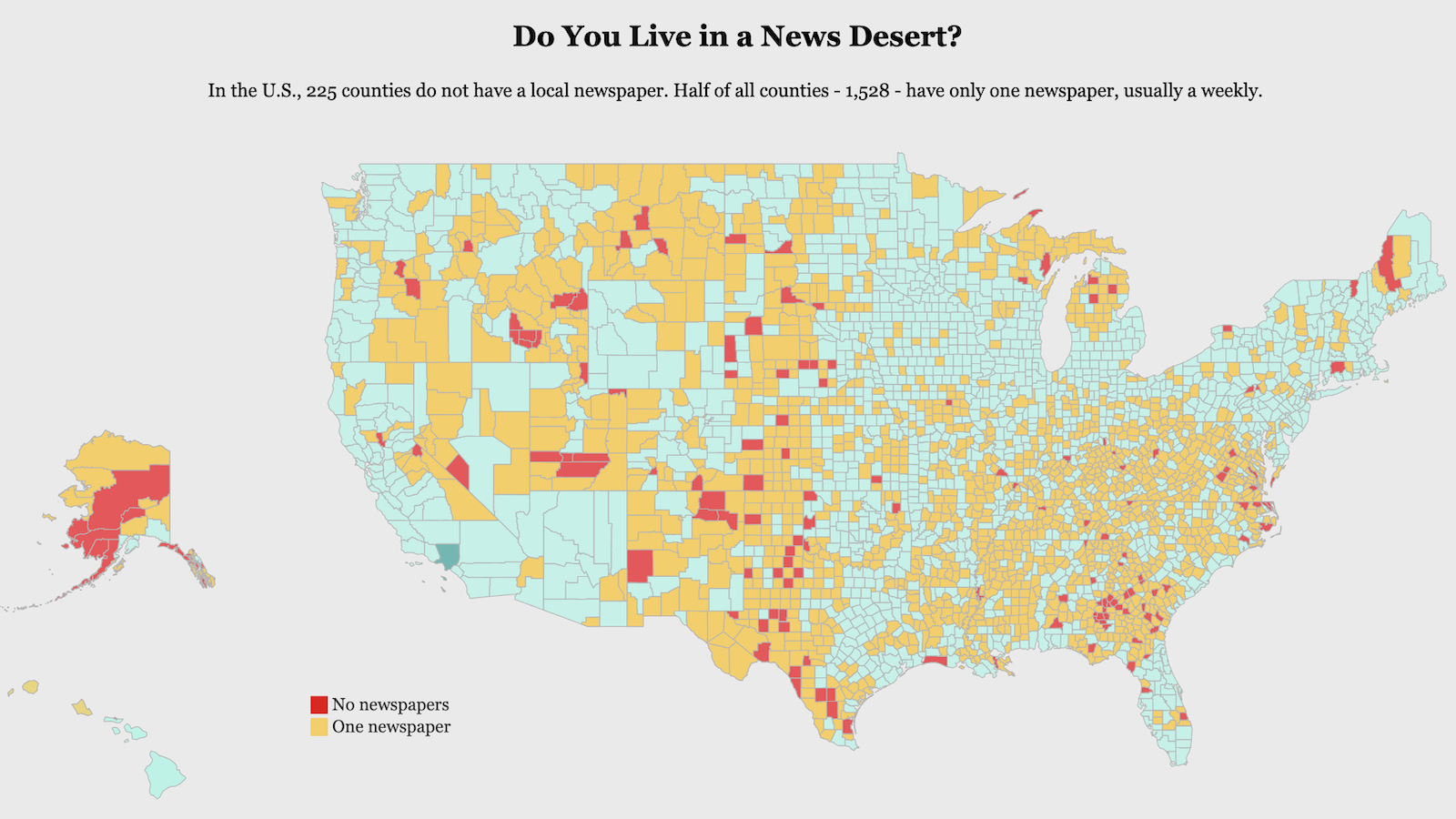

Today, only about 26% of consumers say they would purchase a self-driving car, and the main barrier seems to be trust. Most people don’t believe an autonomous car can keep them safe; nevermind that nearly all car accidents are the result of human error. Trust, in this case, has far more to do with instinct than with reason or practicality. For example, people who take test-rides in self-driving cars tend to be made nervous by the small berth the robotic driver gives when it passes parked vehicles. The fact that self-driving cars are more precise than humans has the ironic effect of reducing our trust in them. On the other hand, if a consumer is presented with a hypothetical dilemma faced by an autonomous system, such as its being forced to choose between the life of vehicle’s passenger versus the life of a pedestrian, the consumer may refuse to consider the idea, period. She will say that as long as such a dilemma is in the realm of possibility, the technology simply should not be employed. In other words, it has to be perfect. But any such perfection is, of course, is an attempt to contemplate infinity.

Ultimately, the developers of autonomous vehicles will have to meet a public threshold of trust far above what we expect from tech companies or humans today. Even leaders in technology, such as Boeing, Airbus and others in aerospace still keep a person in the left seat — even if most of that time is watching the system operate and giving everyone in behind them a warm feeling that “someone” is in control. Note Hollywood is still making movies about human heroes making decisions in a pinch — Sully (2016) And this trust-gap, as it were, is an opening that could very well be exploited by any brand willing to make the leap into the automotive industry. It could just as well be a company that nobody’s talking about right now — Amazon? Verizon? Microsoft? – that will dominate the burgeoning industry as it might be Google or Tesla. The question will be which company can best leverage its image to convince customers to entrust them with their lives.

For years, tech has operated on the ethos of disruption. Nothing makes Silicon Valley happier than upending a whole industry. But disruptive may not be what you want to be when you’re in the business of human lives. Moving too hastily — pushing for the implementation of autonomous technologies before they’re ready — could lead to disaster. If the rollout of self-driving cars leads to negative headlines questioning their reliability, then their developers will find themselves struggling to make up a yawning trust deficit, something that could delay the wide-scale adoption of autonomous vehicles for years.

The smartest way for tech companies to move forward with autonomous cars might be to work closely with the government. Sometimes government moves sluggishly because it is inefficient, yes; but often, the pace of government merely reflects the gravity of the duties to which it has been assigned, and the accompanying need to act prudently. Policymaking can act as a circuit breaker when society may not yet be ready for dramatic change. Tech will have to develop something of a conservative streak and a willingness to work closely with regulators if it wants to survive in the auto industry.

In 1900, the driverless elevator was invented — to which you might respond, “why would an elevator need a driver?” It’s second-nature for us to ride an automatic elevator today, but it was outright feared when it was introduced. People who stepped into an automatic elevator were apt to turn around and walk right back out of it. It took over fifty years, an elevator operator strike, and a coordinated industry ad campaign for driverless elevators to finally be accepted — a cautionary tale for those of us who hope for big things from autonomous vehicles in the near future. Is fifty years the timeline we ought to expect for people to grow fully comfortable with a computer taking the wheel? Or will the practical benefits of self-driving cars serve to quickly overwhelm peoples’ concerns? So far, at least, tech companies have always been able to bank on that.

MIT AgeLab‘s Adam Felts contributed to this article.