People who see God as a white man tend to prefer white men for leadership positions

GODONG/Corbis via Getty Images

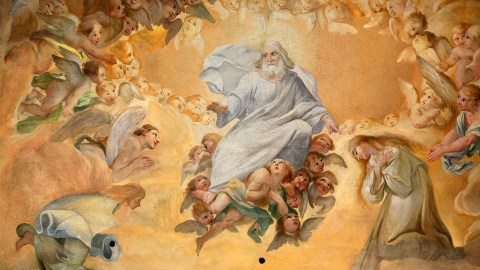

When you picture God, who do you see: a young black woman, or an old white man? Chances are it’s the latter — and a new study in the Journal of Personality and Social Psychology suggests that that image has its consequences.

Across a series of seven studies, at team led by Steven O Roberts at Stanford University found that the way that we perceive God — and in particular our beliefs about God’s race — may influence our decisions about who should be in positions of leadership more generally.

First, the team examined how 444 American Christians — a mixture of men and women, some black and some white — pictured God. In their “indirect” measure, the researchers asked participants to view 12 pairs of faces that differed either in age (young vs old), race (white vs black), or gender (man vs woman), and pick the photo of each pair they thought looked more like God. Participants were also asked to explicitly rate God on each of these characteristics (e.g. whether they thought God was more likely white or black).

On both measures, participants were more likely to see God as old than young, and male rather than female. But participants’ view of God’s race depended on their own race: white participants tended to see God as white, while black participants tended to see God as black.

So people clearly conceptualise God in a specific way — but how does this relate to decisions they make in their everyday lives? For Christians, God is the ultimate leader, so perhaps they look for the characteristics they ascribe to God in other leaders too. So in a second study, the team asked more than 1,000 participants to complete the same direct and indirect measures as before, as well as a new task in which they imagined working for a company that was looking for a new supervisor. They saw 32 faces that varied in gender and race and had to rate how well each person would fit the position.

The team found that when participants saw God as white, they tended to give white candidates a higher rating compared to black candidates. The reverse was true too: participants who saw God as black tended to rate black candidates as more suited than white ones. People who saw God as male also rated males higher than females. A subsequent study found that even children aged 4 to 12 generally perceived God as male and white, and those who conceptualised God as white also viewed white people as more boss-like than black people.

The results suggest that the extent to which people see God as white and male predicts how much they will prefer white men for leadership roles. Interestingly, these effects held even after controlling for measures of participants’ racial prejudice, sexism and political attitudes, suggesting that the effects couldn’t simply be explained by these kinds of biases. Of course, when people saw God as black, these effects were reversed, with participants preferring black candidates. But the fact is that in America, the idea that God is white is a “deeply rooted intuition”, the authors write, and so this conceptualisation could potentially reinforce existing hierarchies that disadvantage black people.

However, there’s a big limitation here: these studies were all based on correlations between beliefs about God and beliefs about who should be leaders. That is, it wasn’t clear whether perceptions of God’s race actually cause people to prefer certain leaders or whether there’s something else going on that could explain the link between the two.

To address this question of causality, the team turned to made-up scenarios, in which participants had to judge who made good rulers based on the characteristics of a deity. Participants read about a planet inhabited by different kinds of aliens — “Hibbles” or “Glerks” — who all worshipped a Creator. People tended to infer whether Hibbles or Glerks should rule over the planet depending on whether the Creator itself was Hibble or Glerk.

These final studies provide some evidence that the ways in which people picture God, or at least an abstract God-like being, do indeed filter down to actively influence beliefs and decisions in other areas of their lives. The authors suggest that future work should look at how to prevent people making these kinds of inferences.

Of course, the research focuses on a specific group: all participants lived in America, and in most studies they were Christian (some of the later studies also included atheists). It remains to be seen whether similar patterns exist amongst adherents of other religions, or in countries with different demographics. It would also be important to figure out whether perceptions of God influence decisions in the real world, and not just in the lab.

Still, as the authors write, the results “provide robust support for a profound conclusion: beliefs about who rules in heaven predict beliefs about who rules on earth.”

Matthew Warren (@MattbWarren) is Editor of BPS Research Digest

Reprinted with permission of The British Psychological Society. Read the original article.