Can Crowdsourcing Teach AI to Do the Right Thing?

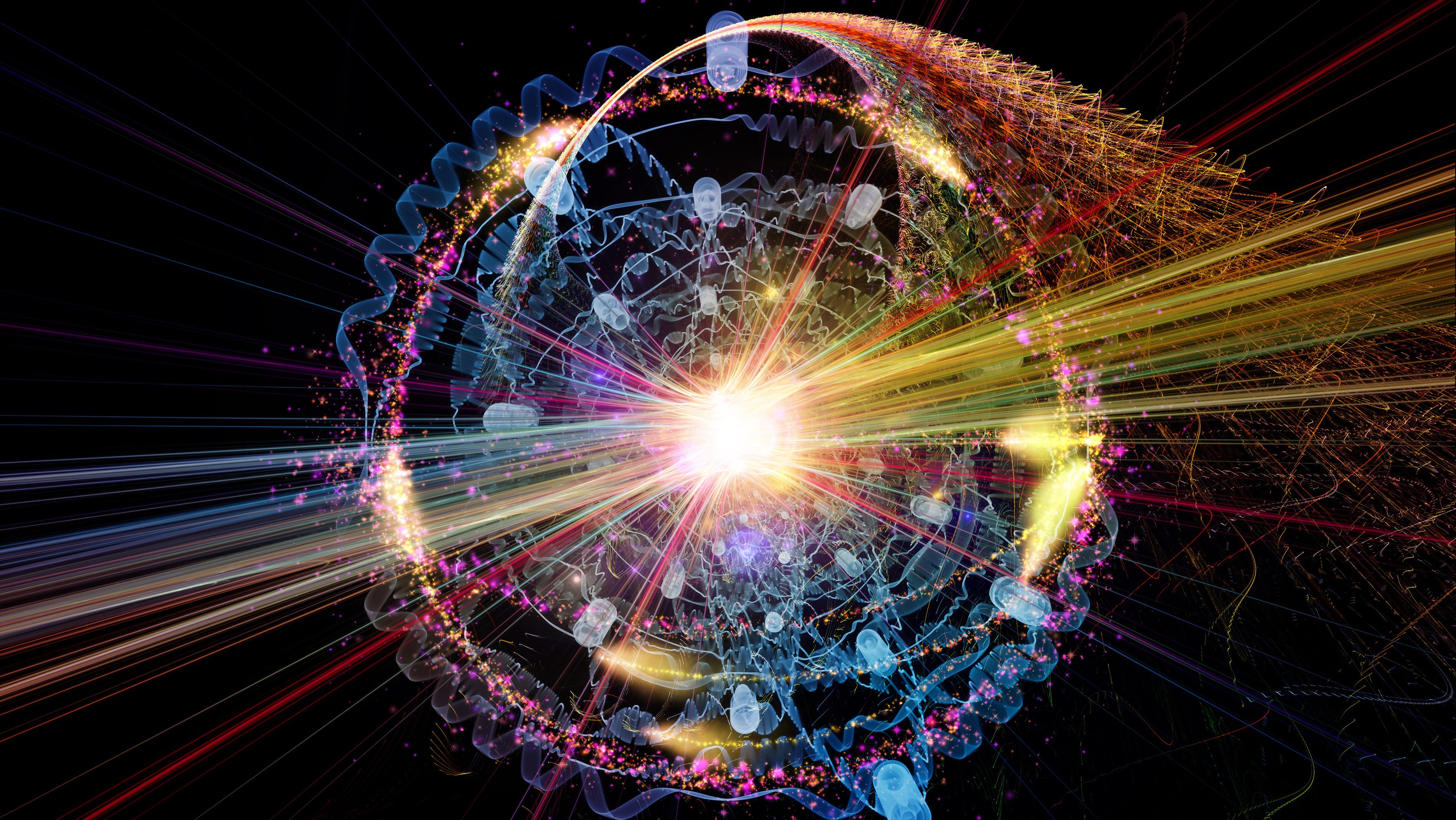

As we cede more and more control to artificial intelligence, it’s inevitable that those machines will need to make choices based, hopefully, on human morality. But where are AI’s ethics going to come from? AI’s own logic? Rules written by programmers? Company executives? Could they be crowdsourced, essentially voted on by everyone?

Alphabet’s DeepMind division now has a unit working on AI ethics, and in June 2017, Germany became the first nation to officially begin to address the question, with a report issued by its Ethics Commission on Automated and Connected Driving. For anyone worried about machines taking over — to quote Stephen Hawking, “The development of artificial intelligence could spell the end of the human race.” — getting AI’s ethics right is central to our survival.

The problem’s already here in the form of autonomous vehicles. It’s just the first case, and undoubtedly not the last. And it’s challenging from the get-go.

(TED-ED)

Researchers at MIT recently developed a Moral Machine website based on the trolley problem to test how crowdsourcing could work. It’s based on the ethical choices confronting autonomous vehicles, and presented as a game in which players can award Likes to other people’s choices, and also see how your own opinions fare.

Researchers from Carnegie-Mellon took the data from Moral Machine and turned it into an AI algorithm for self-driving cars, as detailed in a report published in September 2017. They began by constructing a model of each Moral Machine player’s decisions that would allow the researchers to predict his or her choice when faced with “all possible alternatives.” The next step was to “Combine the individual models into a single model, which approximately captures the collective preferences of all voters over all possible alternatives.”

Critics point out a few ways crowdsourcing morality could go wrong. For one thing, it involves people. Just because an ethical choice wins a poll doesn’t mean it’s truly ethical, only popular. As Cornell Law professor James Grimmelmann tells The Outline, “It makes the AI ethical or unethical in the same way that large numbers of people are ethical or unethical.” We’ve all been caught on the wrong side of a vote, still sure we’re the ones who are right.

There’s also the matter of who the crowd is. There’s a good chance of sample bias based on whose opinions a sample reflects.

And finally, there’s potential bias in the people who convert raw crowdsourced data in decision-making algorithms, with the clear potential for different analysts and programmers to arrive at different results from the same data.

One of the authors of the study, Carnegie-Mellon computer scientist Ariel Procaccia, tells The Outline, “We are not saying that the system is ready for deployment. But it is a proof of concept, showing that democracy can help address the grand challenge of ethical decision making in AI.” Maybe.